这里给出几个典型的部署样例,仅供参考。

1 - Vagrant沙箱环境

概述

这个配置文件,是Pigsty自带的沙箱环境所使用的配置文件。

Github原地址为:https://github.com/Vonng/pigsty/blob/master/pigsty.yml

该配置文件可作为一个标准的学习样例,例如使用相同规格的虚拟机环境部署时,通常只需要在这份配置文件的基础上进行极少量修改就可以直接使用:例如,将10.10.10.10替换为您的元节点IP,将10.10.10.*替换为数据库节点的IP,修改或移除 ansible_host 系列连接参数以提供正确的连接信息。就可以将Pigsty部署到一组虚拟机上了。

配置文件

---

######################################################################

# File : pigsty.yml

# Path : pigsty.yml

# Desc : Pigsty Configuration file

# Note : follow ansible inventory file format

# Ctime : 2020-05-22

# Mtime : 2021-03-16

# Copyright (C) 2018-2021 Ruohang Feng

######################################################################

######################################################################

# Development Environment Inventory #

######################################################################

all: # top-level namespace, match all hosts

#==================================================================#

# Clusters #

#==================================================================#

# postgres database clusters are defined as kv pair in `all.children`

# where the key is cluster name and the value is the object consist

# of cluster members (hosts) and ad-hoc variables (vars)

# meta node are defined in special group "meta" with `meta_node=true`

children:

#-----------------------------

# meta controller

#-----------------------------

meta: # special group 'meta' defines the main controller machine

vars:

meta_node: true # mark node as meta controller

ansible_group_priority: 99 # meta group is top priority

# nodes in meta group

hosts: {10.10.10.10: {ansible_host: meta}}

#-----------------------------

# cluster: pg-meta

#-----------------------------

pg-meta:

# - cluster members - #

hosts:

10.10.10.10: {pg_seq: 1, pg_role: primary, ansible_host: meta}

# - cluster configs - #

vars:

pg_cluster: pg-meta # define actual cluster name

pg_version: 13 # define installed pgsql version

node_tune: tiny # tune node into oltp|olap|crit|tiny mode

pg_conf: tiny.yml # tune pgsql into oltp/olap/crit/tiny mode

patroni_mode: pause # enter maintenance mode, {default|pause|remove}

patroni_watchdog_mode: off # disable watchdog (require|automatic|off)

pg_lc_ctype: en_US.UTF8 # enabled pg_trgm i18n char support

pg_users:

# complete example of user/role definition for production user

- name: dbuser_meta # example production user have read-write access

password: DBUser.Meta # example user's password, can be encrypted

login: true # can login, true by default (should be false for role)

superuser: false # is superuser? false by default

createdb: false # can create database? false by default

createrole: false # can create role? false by default

inherit: true # can this role use inherited privileges?

replication: false # can this role do replication? false by default

bypassrls: false # can this role bypass row level security? false by default

connlimit: -1 # connection limit, -1 disable limit

expire_at: '2030-12-31' # 'timestamp' when this role is expired

expire_in: 365 # now + n days when this role is expired (OVERWRITE expire_at)

roles: [dbrole_readwrite] # dborole_admin|dbrole_readwrite|dbrole_readonly

pgbouncer: true # add this user to pgbouncer? false by default (true for production user)

parameters: # user's default search path

search_path: public

comment: test user

# simple example for personal user definition

- name: dbuser_vonng2 # personal user example which only have limited access to offline instance

password: DBUser.Vonng # or instance with explict mark `pg_offline_query = true`

roles: [dbrole_offline] # personal/stats/ETL user should be grant with dbrole_offline

expire_in: 365 # expire in 365 days since creation

pgbouncer: false # personal user should NOT be allowed to login with pgbouncer

comment: example personal user for interactive queries

pg_databases:

- name: meta # name is the only required field for a database

# owner: postgres # optional, database owner

# template: template1 # optional, template1 by default

# encoding: UTF8 # optional, UTF8 by default , must same as template database, leave blank to set to db default

# locale: C # optional, C by default , must same as template database, leave blank to set to db default

# lc_collate: C # optional, C by default , must same as template database, leave blank to set to db default

# lc_ctype: C # optional, C by default , must same as template database, leave blank to set to db default

allowconn: true # optional, true by default, false disable connect at all

revokeconn: false # optional, false by default, true revoke connect from public # (only default user and owner have connect privilege on database)

# tablespace: pg_default # optional, 'pg_default' is the default tablespace

connlimit: -1 # optional, connection limit, -1 or none disable limit (default)

extensions: # optional, extension name and where to create

- {name: postgis, schema: public}

parameters: # optional, extra parameters with ALTER DATABASE

enable_partitionwise_join: true

pgbouncer: true # optional, add this database to pgbouncer list? true by default

comment: pigsty meta database # optional, comment string for database

pg_default_database: meta # default database will be used as primary monitor target

# proxy settings

vip_mode: l2 # enable/disable vip (require members in same LAN)

vip_address: 10.10.10.2 # virtual ip address

vip_cidrmask: 8 # cidr network mask length

vip_interface: eth1 # interface to add virtual ip

#-----------------------------

# cluster: pg-test

#-----------------------------

pg-test: # define cluster named 'pg-test'

# - cluster members - #

hosts:

10.10.10.11: {pg_seq: 1, pg_role: primary, ansible_host: node-1}

10.10.10.12: {pg_seq: 2, pg_role: replica, ansible_host: node-2}

10.10.10.13: {pg_seq: 3, pg_role: offline, ansible_host: node-3}

# - cluster configs - #

vars:

# basic settings

pg_cluster: pg-test # define actual cluster name

pg_version: 13 # define installed pgsql version

node_tune: tiny # tune node into oltp|olap|crit|tiny mode

pg_conf: tiny.yml # tune pgsql into oltp/olap/crit/tiny mode

# business users, adjust on your own needs

pg_users:

- name: test # example production user have read-write access

password: test # example user's password

roles: [dbrole_readwrite] # dborole_admin|dbrole_readwrite|dbrole_readonly|dbrole_offline

pgbouncer: true # production user that access via pgbouncer

comment: default test user for production usage

pg_databases: # create a business database 'test'

- name: test # use the simplest form

pg_default_database: test # default database will be used as primary monitor target

# proxy settings

vip_mode: l2 # enable/disable vip (require members in same LAN)

vip_address: 10.10.10.3 # virtual ip address

vip_cidrmask: 8 # cidr network mask length

vip_interface: eth1 # interface to add virtual ip

#==================================================================#

# Globals #

#==================================================================#

vars:

#------------------------------------------------------------------------------

# CONNECTION PARAMETERS

#------------------------------------------------------------------------------

# this section defines connection parameters

# ansible_user: vagrant # admin user with ssh access and sudo privilege

proxy_env: # global proxy env when downloading packages

no_proxy: "localhost,127.0.0.1,10.0.0.0/8,192.168.0.0/16,*.pigsty,*.aliyun.com,mirrors.aliyuncs.com,mirrors.tuna.tsinghua.edu.cn,mirrors.zju.edu.cn"

# http_proxy: ''

# https_proxy: ''

# all_proxy: ''

#------------------------------------------------------------------------------

# REPO PROVISION

#------------------------------------------------------------------------------

# this section defines how to build a local repo

# - repo basic - #

repo_enabled: true # build local yum repo on meta nodes?

repo_name: pigsty # local repo name

repo_address: yum.pigsty # repo external address (ip:port or url)

repo_port: 80 # listen address, must same as repo_address

repo_home: /www # default repo dir location

repo_rebuild: false # force re-download packages

repo_remove: true # remove existing repos

# - where to download - #

repo_upstreams:

- name: base

description: CentOS-$releasever - Base - Aliyun Mirror

baseurl:

- http://mirrors.aliyun.com/centos/$releasever/os/$basearch/

- http://mirrors.aliyuncs.com/centos/$releasever/os/$basearch/

- http://mirrors.cloud.aliyuncs.com/centos/$releasever/os/$basearch/

gpgcheck: no

failovermethod: priority

- name: updates

description: CentOS-$releasever - Updates - Aliyun Mirror

baseurl:

- http://mirrors.aliyun.com/centos/$releasever/updates/$basearch/

- http://mirrors.aliyuncs.com/centos/$releasever/updates/$basearch/

- http://mirrors.cloud.aliyuncs.com/centos/$releasever/updates/$basearch/

gpgcheck: no

failovermethod: priority

- name: extras

description: CentOS-$releasever - Extras - Aliyun Mirror

baseurl:

- http://mirrors.aliyun.com/centos/$releasever/extras/$basearch/

- http://mirrors.aliyuncs.com/centos/$releasever/extras/$basearch/

- http://mirrors.cloud.aliyuncs.com/centos/$releasever/extras/$basearch/

gpgcheck: no

failovermethod: priority

- name: epel

description: CentOS $releasever - EPEL - Aliyun Mirror

baseurl: http://mirrors.aliyun.com/epel/$releasever/$basearch

gpgcheck: no

failovermethod: priority

- name: grafana

description: Grafana - TsingHua Mirror

gpgcheck: no

baseurl: https://mirrors.tuna.tsinghua.edu.cn/grafana/yum/rpm

- name: prometheus

description: Prometheus and exporters

gpgcheck: no

baseurl: https://packagecloud.io/prometheus-rpm/release/el/$releasever/$basearch

# consider using ZJU PostgreSQL mirror in mainland china

- name: pgdg-common

description: PostgreSQL common RPMs for RHEL/CentOS $releasever - $basearch

gpgcheck: no

# baseurl: https://download.postgresql.org/pub/repos/yum/common/redhat/rhel-$releasever-$basearch

baseurl: http://mirrors.zju.edu.cn/postgresql/repos/yum/common/redhat/rhel-$releasever-$basearch

- name: pgdg13

description: PostgreSQL 13 for RHEL/CentOS $releasever - $basearch

gpgcheck: no

# baseurl: https://download.postgresql.org/pub/repos/yum/13/redhat/rhel-$releasever-$basearch

baseurl: http://mirrors.zju.edu.cn/postgresql/repos/yum/13/redhat/rhel-$releasever-$basearch

- name: centos-sclo

description: CentOS-$releasever - SCLo

gpgcheck: no

mirrorlist: http://mirrorlist.centos.org?arch=$basearch&release=7&repo=sclo-sclo

- name: centos-sclo-rh

description: CentOS-$releasever - SCLo rh

gpgcheck: no

mirrorlist: http://mirrorlist.centos.org?arch=$basearch&release=7&repo=sclo-rh

- name: nginx

description: Nginx Official Yum Repo

skip_if_unavailable: true

gpgcheck: no

baseurl: http://nginx.org/packages/centos/$releasever/$basearch/

- name: haproxy

description: Copr repo for haproxy

skip_if_unavailable: true

gpgcheck: no

baseurl: https://download.copr.fedorainfracloud.org/results/roidelapluie/haproxy/epel-$releasever-$basearch/

# for latest consul & kubernetes

- name: harbottle

description: Copr repo for main owned by harbottle

skip_if_unavailable: true

gpgcheck: no

baseurl: https://download.copr.fedorainfracloud.org/results/harbottle/main/epel-$releasever-$basearch/

# - what to download - #

repo_packages:

# repo bootstrap packages

- epel-release nginx wget yum-utils yum createrepo # bootstrap packages

# node basic packages

- ntp chrony uuid lz4 nc pv jq vim-enhanced make patch bash lsof wget unzip git tuned # basic system util

- readline zlib openssl libyaml libxml2 libxslt perl-ExtUtils-Embed ca-certificates # basic pg dependency

- numactl grubby sysstat dstat iotop bind-utils net-tools tcpdump socat ipvsadm telnet # system utils

# dcs & monitor packages

- grafana prometheus2 pushgateway alertmanager # monitor and ui

- node_exporter postgres_exporter nginx_exporter blackbox_exporter # exporter

- consul consul_exporter consul-template etcd # dcs

# python3 dependencies

- ansible python python-pip python-psycopg2 audit # ansible & python

- python3 python3-psycopg2 python36-requests python3-etcd python3-consul # python3

- python36-urllib3 python36-idna python36-pyOpenSSL python36-cryptography # python3 patroni extra deps

# proxy and load balancer

- haproxy keepalived dnsmasq # proxy and dns

# postgres common Packages

- patroni patroni-consul patroni-etcd pgbouncer pg_cli pgbadger pg_activity # major components

- pgcenter boxinfo check_postgres emaj pgbconsole pg_bloat_check pgquarrel # other common utils

- barman barman-cli pgloader pgFormatter pitrery pspg pgxnclient PyGreSQL pgadmin4 tail_n_mail

# postgres 13 packages

- postgresql13* postgis31* citus_13 timescaledb_13 # pgrouting_13 # postgres 13 and postgis 31

- pg_repack13 pg_squeeze13 # maintenance extensions

- pg_qualstats13 pg_stat_kcache13 system_stats_13 bgw_replstatus13 # stats extensions

- plr13 plsh13 plpgsql_check_13 plproxy13 plr13 plsh13 plpgsql_check_13 pldebugger13 # PL extensions # pl extensions

- hdfs_fdw_13 mongo_fdw13 mysql_fdw_13 ogr_fdw13 redis_fdw_13 pgbouncer_fdw13 # FDW extensions

- wal2json13 count_distinct13 ddlx_13 geoip13 orafce13 # MISC extensions

- rum_13 hypopg_13 ip4r13 jsquery_13 logerrors_13 periods_13 pg_auto_failover_13 pg_catcheck13

- pg_fkpart13 pg_jobmon13 pg_partman13 pg_prioritize_13 pg_track_settings13 pgaudit15_13

- pgcryptokey13 pgexportdoc13 pgimportdoc13 pgmemcache-13 pgmp13 pgq-13

- pguint13 pguri13 prefix13 safeupdate_13 semver13 table_version13 tdigest13

repo_url_packages:

- https://github.com/Vonng/pg_exporter/releases/download/v0.3.2/pg_exporter-0.3.2-1.el7.x86_64.rpm

- https://github.com/cybertec-postgresql/vip-manager/releases/download/v0.6/vip-manager_0.6-1_amd64.rpm

- http://guichaz.free.fr/polysh/files/polysh-0.4-1.noarch.rpm

#------------------------------------------------------------------------------

# NODE PROVISION

#------------------------------------------------------------------------------

# this section defines how to provision nodes

# nodename: # if defined, node's hostname will be overwritten

# - node dns - #

node_dns_hosts: # static dns records in /etc/hosts

- 10.10.10.10 yum.pigsty

node_dns_server: add # add (default) | none (skip) | overwrite (remove old settings)

node_dns_servers: # dynamic nameserver in /etc/resolv.conf

- 10.10.10.10

node_dns_options: # dns resolv options

- options single-request-reopen timeout:1 rotate

- domain service.consul

# - node repo - #

node_repo_method: local # none|local|public (use local repo for production env)

node_repo_remove: true # whether remove existing repo

node_local_repo_url: # local repo url (if method=local, make sure firewall is configured or disabled)

- http://yum.pigsty/pigsty.repo

# - node packages - #

node_packages: # common packages for all nodes

- wget,yum-utils,ntp,chrony,tuned,uuid,lz4,vim-minimal,make,patch,bash,lsof,wget,unzip,git,readline,zlib,openssl

- numactl,grubby,sysstat,dstat,iotop,bind-utils,net-tools,tcpdump,socat,ipvsadm,telnet,tuned,pv,jq

- python3,python3-psycopg2,python36-requests,python3-etcd,python3-consul

- python36-urllib3,python36-idna,python36-pyOpenSSL,python36-cryptography

- node_exporter,consul,consul-template,etcd,haproxy,keepalived,vip-manager

node_extra_packages: # extra packages for all nodes

- patroni,patroni-consul,patroni-etcd,pgbouncer,pgbadger,pg_activity

node_meta_packages: # packages for meta nodes only

- grafana,prometheus2,alertmanager,nginx_exporter,blackbox_exporter,pushgateway

- dnsmasq,nginx,ansible,pgbadger,polysh

# - node features - #

node_disable_numa: false # disable numa, important for production database, reboot required

node_disable_swap: false # disable swap, important for production database

node_disable_firewall: true # disable firewall (required if using kubernetes)

node_disable_selinux: true # disable selinux (required if using kubernetes)

node_static_network: true # keep dns resolver settings after reboot

node_disk_prefetch: false # setup disk prefetch on HDD to increase performance

# - node kernel modules - #

node_kernel_modules:

- softdog

- br_netfilter

- ip_vs

- ip_vs_rr

- ip_vs_rr

- ip_vs_wrr

- ip_vs_sh

- nf_conntrack_ipv4

# - node tuned - #

node_tune: tiny # install and activate tuned profile: none|oltp|olap|crit|tiny

node_sysctl_params: # set additional sysctl parameters, k:v format

net.bridge.bridge-nf-call-iptables: 1 # for kubernetes

# - node user - #

node_admin_setup: true # setup an default admin user ?

node_admin_uid: 88 # uid and gid for admin user

node_admin_username: admin # default admin user

node_admin_ssh_exchange: true # exchange ssh key among cluster ?

node_admin_pks: # public key list that will be installed

- 'ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAAAgQC7IMAMNavYtWwzAJajKqwdn3ar5BhvcwCnBTxxEkXhGlCO2vfgosSAQMEflfgvkiI5nM1HIFQ8KINlx1XLO7SdL5KdInG5LIJjAFh0pujS4kNCT9a5IGvSq1BrzGqhbEcwWYdju1ZPYBcJm/MG+JD0dYCh8vfrYB/cYMD0SOmNkQ== vagrant@pigsty.com'

# - node ntp - #

node_ntp_service: ntp # ntp or chrony

node_ntp_config: true # overwrite existing ntp config?

node_timezone: Asia/Shanghai # default node timezone

node_ntp_servers: # default NTP servers

- pool cn.pool.ntp.org iburst

- pool pool.ntp.org iburst

- pool time.pool.aliyun.com iburst

- server 10.10.10.10 iburst

#------------------------------------------------------------------------------

# META PROVISION

#------------------------------------------------------------------------------

# - ca - #

ca_method: create # create|copy|recreate

ca_subject: "/CN=root-ca" # self-signed CA subject

ca_homedir: /ca # ca cert directory

ca_cert: ca.crt # ca public key/cert

ca_key: ca.key # ca private key

# - nginx - #

nginx_upstream:

- { name: home, host: pigsty, url: "127.0.0.1:3000"}

- { name: consul, host: c.pigsty, url: "127.0.0.1:8500" }

- { name: grafana, host: g.pigsty, url: "127.0.0.1:3000" }

- { name: prometheus, host: p.pigsty, url: "127.0.0.1:9090" }

- { name: alertmanager, host: a.pigsty, url: "127.0.0.1:9093" }

- { name: haproxy, host: h.pigsty, url: "127.0.0.1:9091" }

# - nameserver - #

dns_records: # dynamic dns record resolved by dnsmasq

- 10.10.10.2 pg-meta # sandbox vip for pg-meta

- 10.10.10.3 pg-test # sandbox vip for pg-test

- 10.10.10.10 meta-1 # sandbox node meta-1 (node-0)

- 10.10.10.11 node-1 # sandbox node node-1

- 10.10.10.12 node-2 # sandbox node node-2

- 10.10.10.13 node-3 # sandbox node node-3

- 10.10.10.10 pigsty

- 10.10.10.10 y.pigsty yum.pigsty

- 10.10.10.10 c.pigsty consul.pigsty

- 10.10.10.10 g.pigsty grafana.pigsty

- 10.10.10.10 p.pigsty prometheus.pigsty

- 10.10.10.10 a.pigsty alertmanager.pigsty

- 10.10.10.10 n.pigsty ntp.pigsty

- 10.10.10.10 h.pigsty haproxy.pigsty

# - prometheus - #

prometheus_data_dir: /export/prometheus/data # prometheus data dir

prometheus_options: '--storage.tsdb.retention=30d'

prometheus_reload: false # reload prometheus instead of recreate it

prometheus_sd_method: consul # service discovery method: static|consul|etcd

prometheus_scrape_interval: 2s # global scrape & evaluation interval

prometheus_scrape_timeout: 1s # scrape timeout

prometheus_sd_interval: 2s # service discovery refresh interval

# - grafana - #

grafana_url: http://admin:admin@10.10.10.10:3000 # grafana url

grafana_admin_password: admin # default grafana admin user password

grafana_plugin: install # none|install|reinstall

grafana_cache: /www/pigsty/grafana/plugins.tar.gz # path to grafana plugins tarball

grafana_customize: true # customize grafana resources

grafana_plugins: # default grafana plugins list

- redis-datasource

- simpod-json-datasource

- fifemon-graphql-datasource

- sbueringer-consul-datasource

- camptocamp-prometheus-alertmanager-datasource

- ryantxu-ajax-panel

- marcusolsson-hourly-heatmap-panel

- michaeldmoore-multistat-panel

- marcusolsson-treemap-panel

- pr0ps-trackmap-panel

- dalvany-image-panel

- magnesium-wordcloud-panel

- cloudspout-button-panel

- speakyourcode-button-panel

- jdbranham-diagram-panel

- grafana-piechart-panel

- snuids-radar-panel

- digrich-bubblechart-panel

grafana_git_plugins:

- https://github.com/Vonng/grafana-echarts

#------------------------------------------------------------------------------

# DCS PROVISION

#------------------------------------------------------------------------------

service_registry: consul # where to register services: none | consul | etcd | both

dcs_type: consul # consul | etcd | both

dcs_name: pigsty # consul dc name | etcd initial cluster token

dcs_servers: # dcs server dict in name:ip format

meta-1: 10.10.10.10 # you could use existing dcs cluster

# meta-2: 10.10.10.11 # host which have their IP listed here will be init as server

# meta-3: 10.10.10.12 # 3 or 5 dcs nodes are recommend for production environment

dcs_exists_action: clean # abort|skip|clean if dcs server already exists

dcs_disable_purge: false # set to true to disable purge functionality for good (force dcs_exists_action = abort)

consul_data_dir: /var/lib/consul # consul data dir (/var/lib/consul by default)

etcd_data_dir: /var/lib/etcd # etcd data dir (/var/lib/consul by default)

#------------------------------------------------------------------------------

# POSTGRES INSTALLATION

#------------------------------------------------------------------------------

# - dbsu - #

pg_dbsu: postgres # os user for database, postgres by default (change it is not recommended!)

pg_dbsu_uid: 26 # os dbsu uid and gid, 26 for default postgres users and groups

pg_dbsu_sudo: limit # none|limit|all|nopass (Privilege for dbsu, limit is recommended)

pg_dbsu_home: /var/lib/pgsql # postgresql binary

pg_dbsu_ssh_exchange: false # exchange ssh key among same cluster

# - postgres packages - #

pg_version: 13 # default postgresql version

pgdg_repo: false # use official pgdg yum repo (disable if you have local mirror)

pg_add_repo: false # add postgres related repo before install (useful if you want a simple install)

pg_bin_dir: /usr/pgsql/bin # postgres binary dir

pg_packages:

- postgresql${pg_version}*

- postgis31_${pg_version}*

- pgbouncer patroni pg_exporter pgbadger

- patroni patroni-consul patroni-etcd pgbouncer pgbadger pg_activity

- python3 python3-psycopg2 python36-requests python3-etcd python3-consul

- python36-urllib3 python36-idna python36-pyOpenSSL python36-cryptography

pg_extensions:

- pg_repack${pg_version} pg_qualstats${pg_version} pg_stat_kcache${pg_version} wal2json${pg_version}

# - ogr_fdw${pg_version} mysql_fdw_${pg_version} redis_fdw_${pg_version} mongo_fdw${pg_version} hdfs_fdw_${pg_version}

# - count_distinct${version} ddlx_${version} geoip${version} orafce${version} # popular features

# - hypopg_${version} ip4r${version} jsquery_${version} logerrors_${version} periods_${version} pg_auto_failover_${version} pg_catcheck${version}

# - pg_fkpart${version} pg_jobmon${version} pg_partman${version} pg_prioritize_${version} pg_track_settings${version} pgaudit15_${version}

# - pgcryptokey${version} pgexportdoc${version} pgimportdoc${version} pgmemcache-${version} pgmp${version} pgq-${version} pgquarrel pgrouting_${version}

# - pguint${version} pguri${version} prefix${version} safeupdate_${version} semver${version} table_version${version} tdigest${version}

#------------------------------------------------------------------------------

# POSTGRES PROVISION

#------------------------------------------------------------------------------

# - identity - #

# pg_cluster: # [REQUIRED] cluster name (validated during pg_preflight)

# pg_seq: 0 # [REQUIRED] instance seq (validated during pg_preflight)

# pg_role: replica # [REQUIRED] service role (validated during pg_preflight)

pg_hostname: false # overwrite node hostname with pg instance name

pg_nodename: true # overwrite consul nodename with pg instance name

# - retention - #

# pg_exists_action, available options: abort|clean|skip

# - abort: abort entire play's execution (default)

# - clean: remove existing cluster (dangerous)

# - skip: end current play for this host

# pg_exists: false # auxiliary flag variable (DO NOT SET THIS)

pg_exists_action: clean

pg_disable_purge: false # set to true to disable pg purge functionality for good (force pg_exists_action = abort)

# - storage - #

pg_data: /pg/data # postgres data directory

pg_fs_main: /export # data disk mount point /pg -> {{ pg_fs_main }}/postgres/{{ pg_instance }}

pg_fs_bkup: /var/backups # backup disk mount point /pg/* -> {{ pg_fs_bkup }}/postgres/{{ pg_instance }}/*

# - connection - #

pg_listen: '0.0.0.0' # postgres listen address, '0.0.0.0' by default (all ipv4 addr)

pg_port: 5432 # postgres port (5432 by default)

pg_localhost: /var/run/postgresql # localhost unix socket dir for connection

# - patroni - #

# patroni_mode, available options: default|pause|remove

# - default: default ha mode

# - pause: into maintenance mode

# - remove: remove patroni after bootstrap

patroni_mode: default # pause|default|remove

pg_namespace: /pg # top level key namespace in dcs

patroni_port: 8008 # default patroni port

patroni_watchdog_mode: automatic # watchdog mode: off|automatic|required

pg_conf: tiny.yml # user provided patroni config template path

# - localization - #

pg_encoding: UTF8 # default to UTF8

pg_locale: C # default to C

pg_lc_collate: C # default to C

pg_lc_ctype: en_US.UTF8 # default to en_US.UTF8

# - pgbouncer - #

pgbouncer_port: 6432 # pgbouncer port (6432 by default)

pgbouncer_poolmode: transaction # pooling mode: (transaction pooling by default)

pgbouncer_max_db_conn: 100 # important! do not set this larger than postgres max conn or conn limit

#------------------------------------------------------------------------------

# POSTGRES TEMPLATE

#------------------------------------------------------------------------------

# - template - #

pg_init: pg-init # init script for cluster template

# - system roles - #

pg_replication_username: replicator # system replication user

pg_replication_password: DBUser.Replicator # system replication password

pg_monitor_username: dbuser_monitor # system monitor user

pg_monitor_password: DBUser.Monitor # system monitor password

pg_admin_username: dbuser_admin # system admin user

pg_admin_password: DBUser.Admin # system admin password

# - default roles - #

# chekc http://pigsty.cc/zh/docs/concepts/provision/acl/ for more detail

pg_default_roles:

# common production readonly user

- name: dbrole_readonly # production read-only roles

login: false

comment: role for global readonly access

# common production read-write user

- name: dbrole_readwrite # production read-write roles

login: false

roles: [dbrole_readonly] # read-write includes read-only access

comment: role for global read-write access

# offline have same privileges as readonly, but with limited hba access on offline instance only

# for the purpose of running slow queries, interactive queries and perform ETL tasks

- name: dbrole_offline

login: false

comment: role for restricted read-only access (offline instance)

# admin have the privileges to issue DDL changes

- name: dbrole_admin

login: false

bypassrls: true

comment: role for object creation

roles: [dbrole_readwrite,pg_monitor,pg_signal_backend]

# dbsu, name is designated by `pg_dbsu`. It's not recommend to set password for dbsu

- name: postgres

superuser: true

comment: system superuser

# default replication user, name is designated by `pg_replication_username`, and password is set by `pg_replication_password`

- name: replicator

replication: true # for replication user

bypassrls: true # logical replication require bypassrls

roles: [pg_monitor, dbrole_readonly] # logical replication require select privileges

comment: system replicator

# default replication user, name is designated by `pg_monitor_username`, and password is set by `pg_monitor_password`

- name: dbuser_monitor

connlimit: 16

comment: system monitor user

roles: [pg_monitor, dbrole_readonly]

# default admin user, name is designated by `pg_admin_username`, and password is set by `pg_admin_password`

- name: dbuser_admin

bypassrls: true

superuser: true

comment: system admin user

roles: [dbrole_admin]

# default stats user, for ETL and slow queries

- name: dbuser_stats

password: DBUser.Stats

comment: business offline user for offline queries and ETL

roles: [dbrole_offline]

# - privileges - #

# object created by dbsu and admin will have their privileges properly set

pg_default_privileges:

- GRANT USAGE ON SCHEMAS TO dbrole_readonly

- GRANT SELECT ON TABLES TO dbrole_readonly

- GRANT SELECT ON SEQUENCES TO dbrole_readonly

- GRANT EXECUTE ON FUNCTIONS TO dbrole_readonly

- GRANT USAGE ON SCHEMAS TO dbrole_offline

- GRANT SELECT ON TABLES TO dbrole_offline

- GRANT SELECT ON SEQUENCES TO dbrole_offline

- GRANT EXECUTE ON FUNCTIONS TO dbrole_offline

- GRANT INSERT, UPDATE, DELETE ON TABLES TO dbrole_readwrite

- GRANT USAGE, UPDATE ON SEQUENCES TO dbrole_readwrite

- GRANT TRUNCATE, REFERENCES, TRIGGER ON TABLES TO dbrole_admin

- GRANT CREATE ON SCHEMAS TO dbrole_admin

# - schemas - #

pg_default_schemas: [monitor] # default schemas to be created

# - extension - #

pg_default_extensions: # default extensions to be created

- { name: 'pg_stat_statements', schema: 'monitor' }

- { name: 'pgstattuple', schema: 'monitor' }

- { name: 'pg_qualstats', schema: 'monitor' }

- { name: 'pg_buffercache', schema: 'monitor' }

- { name: 'pageinspect', schema: 'monitor' }

- { name: 'pg_prewarm', schema: 'monitor' }

- { name: 'pg_visibility', schema: 'monitor' }

- { name: 'pg_freespacemap', schema: 'monitor' }

- { name: 'pg_repack', schema: 'monitor' }

- name: postgres_fdw

- name: file_fdw

- name: btree_gist

- name: btree_gin

- name: pg_trgm

- name: intagg

- name: intarray

# - hba - #

pg_offline_query: false # set to true to enable offline query on instance

pg_reload: true # reload postgres after hba changes

pg_hba_rules: # postgres host-based authentication rules

- title: allow meta node password access

role: common

rules:

- host all all 10.10.10.10/32 md5

- title: allow intranet admin password access

role: common

rules:

- host all +dbrole_admin 10.0.0.0/8 md5

- host all +dbrole_admin 172.16.0.0/12 md5

- host all +dbrole_admin 192.168.0.0/16 md5

- title: allow intranet password access

role: common

rules:

- host all all 10.0.0.0/8 md5

- host all all 172.16.0.0/12 md5

- host all all 192.168.0.0/16 md5

- title: allow local read/write (local production user via pgbouncer)

role: common

rules:

- local all +dbrole_readonly md5

- host all +dbrole_readonly 127.0.0.1/32 md5

- title: allow offline query (ETL,SAGA,Interactive) on offline instance

role: offline

rules:

- host all +dbrole_offline 10.0.0.0/8 md5

- host all +dbrole_offline 172.16.0.0/12 md5

- host all +dbrole_offline 192.168.0.0/16 md5

pg_hba_rules_extra: [] # extra hba rules (for cluster/instance overwrite)

pgbouncer_hba_rules: # pgbouncer host-based authentication rules

- title: local password access

role: common

rules:

- local all all md5

- host all all 127.0.0.1/32 md5

- title: intranet password access

role: common

rules:

- host all all 10.0.0.0/8 md5

- host all all 172.16.0.0/12 md5

- host all all 192.168.0.0/16 md5

pgbouncer_hba_rules_extra: [] # extra pgbouncer hba rules (for cluster/instance overwrite)

# pg_users: [] # business users

# pg_databases: [] # business databases

#------------------------------------------------------------------------------

# MONITOR PROVISION

#------------------------------------------------------------------------------

# - install - #

exporter_install: none # none|yum|binary, none by default

exporter_repo_url: '' # if set, repo will be added to /etc/yum.repos.d/ before yum installation

# - collect - #

exporter_metrics_path: /metrics # default metric path for pg related exporter

# - node exporter - #

node_exporter_enabled: true # setup node_exporter on instance

node_exporter_port: 9100 # default port for node exporter

node_exporter_options: '--no-collector.softnet --collector.systemd --collector.ntp --collector.tcpstat --collector.processes'

# - pg exporter - #

pg_exporter_config: pg_exporter-demo.yaml # default config files for pg_exporter

pg_exporter_enabled: true # setup pg_exporter on instance

pg_exporter_port: 9630 # default port for pg exporter

pg_exporter_url: '' # optional, if not set, generate from reference parameters

# - pgbouncer exporter - #

pgbouncer_exporter_enabled: true # setup pgbouncer_exporter on instance (if you don't have pgbouncer, disable it)

pgbouncer_exporter_port: 9631 # default port for pgbouncer exporter

pgbouncer_exporter_url: '' # optional, if not set, generate from reference parameters

#------------------------------------------------------------------------------

# SERVICE PROVISION

#------------------------------------------------------------------------------

pg_weight: 100 # default load balance weight (instance level)

# - service - #

pg_services: # how to expose postgres service in cluster?

# primary service will route {ip|name}:5433 to primary pgbouncer (5433->6432 rw)

- name: primary # service name {{ pg_cluster }}_primary

src_ip: "*"

src_port: 5433

dst_port: pgbouncer # 5433 route to pgbouncer

check_url: /primary # primary health check, success when instance is primary

selector: "[]" # select all instance as primary service candidate

# replica service will route {ip|name}:5434 to replica pgbouncer (5434->6432 ro)

- name: replica # service name {{ pg_cluster }}_replica

src_ip: "*"

src_port: 5434

dst_port: pgbouncer

check_url: /read-only # read-only health check. (including primary)

selector: "[]" # select all instance as replica service candidate

selector_backup: "[? pg_role == `primary`]" # primary are used as backup server in replica service

# default service will route {ip|name}:5436 to primary postgres (5436->5432 primary)

- name: default # service's actual name is {{ pg_cluster }}-{{ service.name }}

src_ip: "*" # service bind ip address, * for all, vip for cluster virtual ip address

src_port: 5436 # bind port, mandatory

dst_port: postgres # target port: postgres|pgbouncer|port_number , pgbouncer(6432) by default

check_method: http # health check method: only http is available for now

check_port: patroni # health check port: patroni|pg_exporter|port_number , patroni by default

check_url: /primary # health check url path, / as default

check_code: 200 # health check http code, 200 as default

selector: "[]" # instance selector

haproxy: # haproxy specific fields

maxconn: 3000 # default front-end connection

balance: roundrobin # load balance algorithm (roundrobin by default)

default_server_options: 'inter 3s fastinter 1s downinter 5s rise 3 fall 3 on-marked-down shutdown-sessions slowstart 30s maxconn 3000 maxqueue 128 weight 100'

# offline service will route {ip|name}:5438 to offline postgres (5438->5432 offline)

- name: offline # service name {{ pg_cluster }}_replica

src_ip: "*"

src_port: 5438

dst_port: postgres

check_url: /replica # offline MUST be a replica

selector: "[? pg_role == `offline` || pg_offline_query ]" # instances with pg_role == 'offline' or instance marked with 'pg_offline_query == true'

selector_backup: "[? pg_role == `replica` && !pg_offline_query]" # replica are used as backup server in offline service

pg_services_extra: [] # extra services to be added

# - haproxy - #

haproxy_enabled: true # enable haproxy among every cluster members

haproxy_reload: true # reload haproxy after config

haproxy_admin_auth_enabled: false # enable authentication for haproxy admin?

haproxy_admin_username: admin # default haproxy admin username

haproxy_admin_password: admin # default haproxy admin password

haproxy_exporter_port: 9101 # default admin/exporter port

haproxy_client_timeout: 3h # client side connection timeout

haproxy_server_timeout: 3h # server side connection timeout

# - vip - #

vip_mode: none # none | l2 | l4

vip_reload: true # whether reload service after config

# vip_address: 127.0.0.1 # virtual ip address ip (l2 or l4)

# vip_cidrmask: 24 # virtual ip address cidr mask (l2 only)

# vip_interface: eth0 # virtual ip network interface (l2 only)

...

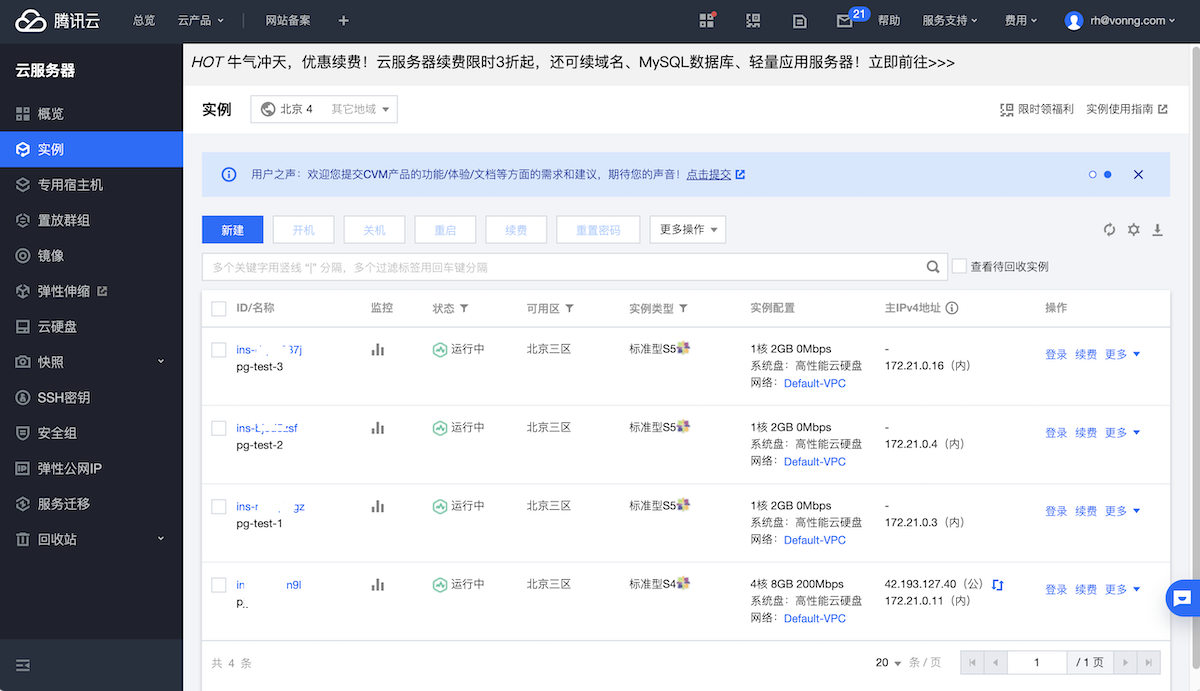

2 - 腾讯云VPC部署

本样例将基于腾讯云VPC部署Pigsty

资源准备

申请虚拟机

买几台虚拟机,如下图所示,其中11这一台作为元节点,带有公网IP,数据库节点3台,普通1核1G即可。

配置SSH远程登录

现在假设我们的管理用户名为vonng,就是我啦!现在首先配置我在元节点上到其他三台节点的ssh免密码访问。

# vonng@172.21.0.11 # meta

ssh-copy-id root@172.21.0.3 # pg-test-1

ssh-copy-id root@172.21.0.4 # pg-test-2

ssh-copy-id root@172.21.0.16 # pg-test-3

scp ~/.ssh/id_rsa.pub root@172.21.0.3:/tmp/

scp ~/.ssh/id_rsa.pub root@172.21.0.4:/tmp/

scp ~/.ssh/id_rsa.pub root@172.21.0.16:/tmp/

ssh root@172.21.0.3 'useradd vonng; mkdir -m 700 -p /home/vonng/.ssh; mv /tmp/id_rsa.pub /home/vonng/.ssh/authorized_keys; chown -R vonng /home/vonng; chmod 0600 /home/vonng/.ssh/authorized_keys;'

ssh root@172.21.0.4 'useradd vonng; mkdir -m 700 -p /home/vonng/.ssh; mv /tmp/id_rsa.pub /home/vonng/.ssh/authorized_keys; chown -R vonng /home/vonng; chmod 0600 /home/vonng/.ssh/authorized_keys;'

ssh root@172.21.0.16 'useradd vonng; mkdir -m 700 -p /home/vonng/.ssh; mv /tmp/id_rsa.pub /home/vonng/.ssh/authorized_keys; chown -R vonng /home/vonng; chmod 0600 /home/vonng/.ssh/authorized_keys;'

然后配置该用户免密码执行sudo的权限:

ssh root@172.21.0.3 "echo '%vonng ALL=(ALL) NOPASSWD: ALL' > /etc/sudoers.d/vonng"

ssh root@172.21.0.4 "echo '%vonng ALL=(ALL) NOPASSWD: ALL' > /etc/sudoers.d/vonng"

ssh root@172.21.0.16 "echo '%vonng ALL=(ALL) NOPASSWD: ALL' > /etc/sudoers.d/vonng"

# 校验配置是否成功

ssh 172.21.0.3 'sudo ls'

ssh 172.21.0.4 'sudo ls'

ssh 172.21.0.16 'sudo ls'

下载项目

# 从Github克隆代码

git clone https://github.com/Vonng/pigsty

# 如果您不能访问Github,也可以使用Pigsty CDN下载代码包

curl http://pigsty-1304147732.cos.accelerate.myqcloud.com/latest/pigsty.tar.gz -o pigsty.tgz && tar -xf pigsty.tgz && cd pigsty

下载离线安装包

# 从Github Release页面下载

# https://github.com/Vonng/pigsty

# 如果您不能访问Github,也可以使用Pigsty CDN下载离线软件包

curl http://pigsty-1304147732.cos.accelerate.myqcloud.com/latest/pkg.tgz -o files/pkg.tgz

# 将离线安装包解压至元节点指定位置 (也许要sudo)

mv -rf /www/pigsty /www/pigsty-backup && mkdir -p /www/pigsty

tar -xf files/pkg.tgz --strip-component=1 -C /www/pigsty/

调整配置

我们可以基于Pigsty沙箱的配置文件进行调整。因为都是普通低配虚拟机,因此不需要任何实质配置修改,只需要修改连接参数与节点信息即可。简单的说,只要改IP地址就可以了!

现在将沙箱中的IP地址全部替换为云环境中的实际IP地址。(如果使用了L2 VIP,VIP也需要替换为合理的地址)

| 说明 | 沙箱IP | 虚拟机IP | |

|---|---|---|---|

| 元节点 | 10.10.10.10 | 172.21.0.11 | |

| 数据库节点1 | 10.10.10.11 | 172.21.0.3 | |

| 数据库节点2 | 10.10.10.12 | 172.21.0.4 | |

| 数据库节点3 | 10.10.10.13 | 172.21.0.16 | |

| pg-meta VIP | 10.10.10.2 | 172.21.0.8 | |

| pg-test VIP | 10.10.10.3 | 172.21.0.9 |

编辑配置文件:pigsty.yml,如果都是规格差不多的虚拟机,通常您只需要修改IP地址即可。特别需要注意的是在沙箱中我们是通过SSH Alias来连接的(诸如meta, node-1之类),记得移除所有ansible_host配置,我们将直接使用IP地址连接目标节点。

cat pigsty.yml | \

sed 's/10.10.10.10/172.21.0.11/g' |\

sed 's/10.10.10.11/172.21.0.3/g' |\

sed 's/10.10.10.12/172.21.0.4/g' |\

sed 's/10.10.10.13/172.21.0.16/g' |\

sed 's/10.10.10.2/172.21.0.8/g' |\

sed 's/10.10.10.3/172.21.0.9/g' |\

sed 's/10.10.10.3/172.21.0.9/g' |\

sed 's/, ansible_host: meta//g' |\

sed 's/ansible_host: meta//g' |\

sed 's/, ansible_host: node-[123]//g' |\

sed 's/vip_interface: eth1/vip_interface: eth0/g' |\

sed 's/vip_cidrmask: 8/vip_cidrmask: 24/g' > pigsty2.yml

mv pigsty.yml pigsty-backup.yml; mv pigsty2.yml pigsty.yml

就这?

是的,配置文件已经修改完了!我们可以看看到底修改了什么东西

$ diff pigsty.yml pigsty-backup.yml

38c38

< hosts: {172.21.0.11: {}}

---

> hosts: {10.10.10.10: {ansible_host: meta}}

46c46

< 172.21.0.11: {pg_seq: 1, pg_role: primary}

---

> 10.10.10.10: {pg_seq: 1, pg_role: primary, ansible_host: meta}

109,111c109,111

< vip_address: 172.21.0.8 # virtual ip address

< vip_cidrmask: 24 # cidr network mask length

< vip_interface: eth0 # interface to add virtual ip

---

> vip_address: 10.10.10.2 # virtual ip address

> vip_cidrmask: 8 # cidr network mask length

> vip_interface: eth1 # interface to add virtual ip

120,122c120,122

< 172.21.0.3: {pg_seq: 1, pg_role: primary}

< 172.21.0.4: {pg_seq: 2, pg_role: replica}

< 172.21.0.16: {pg_seq: 3, pg_role: offline}

---

> 10.10.10.11: {pg_seq: 1, pg_role: primary, ansible_host: node-1}

> 10.10.10.12: {pg_seq: 2, pg_role: replica, ansible_host: node-2}

> 10.10.10.13: {pg_seq: 3, pg_role: offline, ansible_host: node-3}

147,149c147,149

< vip_address: 172.21.0.9 # virtual ip address

< vip_cidrmask: 24 # cidr network mask length

< vip_interface: eth0 # interface to add virtual ip

---

> vip_address: 10.10.10.3 # virtual ip address

> vip_cidrmask: 8 # cidr network mask length

> vip_interface: eth1 # interface to add virtual ip

326c326

< - 172.21.0.11 yum.pigsty

---

> - 10.10.10.10 yum.pigsty

329c329

< - 172.21.0.11

---

> - 10.10.10.10

393c393

< - server 172.21.0.11 iburst

---

> - server 10.10.10.10 iburst

417,430c417,430

< - 172.21.0.8 pg-meta # sandbox vip for pg-meta

< - 172.21.0.9 pg-test # sandbox vip for pg-test

< - 172.21.0.11 meta-1 # sandbox node meta-1 (node-0)

< - 172.21.0.3 node-1 # sandbox node node-1

< - 172.21.0.4 node-2 # sandbox node node-2

< - 172.21.0.16 node-3 # sandbox node node-3

< - 172.21.0.11 pigsty

< - 172.21.0.11 y.pigsty yum.pigsty

< - 172.21.0.11 c.pigsty consul.pigsty

< - 172.21.0.11 g.pigsty grafana.pigsty

< - 172.21.0.11 p.pigsty prometheus.pigsty

< - 172.21.0.11 a.pigsty alertmanager.pigsty

< - 172.21.0.11 n.pigsty ntp.pigsty

< - 172.21.0.11 h.pigsty haproxy.pigsty

---

> - 10.10.10.2 pg-meta # sandbox vip for pg-meta

> - 10.10.10.3 pg-test # sandbox vip for pg-test

> - 10.10.10.10 meta-1 # sandbox node meta-1 (node-0)

> - 10.10.10.11 node-1 # sandbox node node-1

> - 10.10.10.12 node-2 # sandbox node node-2

> - 10.10.10.13 node-3 # sandbox node node-3

> - 10.10.10.10 pigsty

> - 10.10.10.10 y.pigsty yum.pigsty

> - 10.10.10.10 c.pigsty consul.pigsty

> - 10.10.10.10 g.pigsty grafana.pigsty

> - 10.10.10.10 p.pigsty prometheus.pigsty

> - 10.10.10.10 a.pigsty alertmanager.pigsty

> - 10.10.10.10 n.pigsty ntp.pigsty

> - 10.10.10.10 h.pigsty haproxy.pigsty

442c442

< grafana_url: http://admin:admin@172.21.0.11:3000 # grafana url

---

> grafana_url: http://admin:admin@10.10.10.10:3000 # grafana url

478,480c478,480

< meta-1: 172.21.0.11 # you could use existing dcs cluster

< # meta-2: 172.21.0.3 # host which have their IP listed here will be init as server

< # meta-3: 172.21.0.4 # 3 or 5 dcs nodes are recommend for production environment

---

> meta-1: 10.10.10.10 # you could use existing dcs cluster

> # meta-2: 10.10.10.11 # host which have their IP listed here will be init as server

> # meta-3: 10.10.10.12 # 3 or 5 dcs nodes are recommend for production environment

692c692

< - host all all 172.21.0.11/32 md5

---

> - host all all 10.10.10.10/32 md5

执行剧本

您可以使用同样的 沙箱初始化 来完成 基础设施和数据库集群的初始化。

其输出结果除了IP地址,与沙箱并无区别。参考输出

访问Demo

现在,您可以通过公网IP访问元节点上的服务了!请注意做好信息安全工作。

与沙箱环境不同的是,如果您需要从公网访问Pigsty管理界面,需要自己把定义的域名写入/etc/hosts中,或者使用真正申请的域名。

否则就只能通过IP端口直连的方式访问,例如: http://<meta_node_public_ip>:3000。

Nginx监听的域名可以通过可以通过 nginx_upstream 选项。

nginx_upstream:

- { name: home, host: pigsty.cc, url: "127.0.0.1:3000"}

- { name: consul, host: c.pigsty.cc, url: "127.0.0.1:8500" }

- { name: grafana, host: g.pigsty.cc, url: "127.0.0.1:3000" }

- { name: prometheus, host: p.pigsty.cc, url: "127.0.0.1:9090" }

- { name: alertmanager, host: a.pigsty.cc, url: "127.0.0.1:9093" }

- { name: haproxy, host: h.pigsty.cc, url: "127.0.0.1:9091" }

3 - 生产环境部署

本样例将基于一个真实生产环境作为样例。

该环境包括了200台高规格 x86 物理机:Dell R740 64核CPU / 400GB内存 / 4TB PCI-E SSD / 双万兆网卡

资源准备

调整配置

执行剧本

访问服务

4 - 集成阿里云MyBase

Pigsty内置了数据库供给方案,但也可以单纯作为监控系统与外部供给方案集成,例如阿里云MyBase for PostgreSQL。

与外部系统集成时,用户只需要部署一个元节点,用于设置监控基础设施。同时在监控目标机器上,需要安装Node Exporter与PG Exporter采集指标。

Pigsty提供了静态服务发现机制与Exporter二进制部署模式,以减少对外部系统的侵入。

下面将以一个实际例子介绍如何使用Pigsty监控阿里云MyBase。