Pigsty 针对大规模数据库集群监控与管理而设计,提供简单便利的高可用数据库供给管理方案与业界一流的图形化的监控管理界面。Pigsty旨在降低数据库使用与管理的门槛,提高PostgreSQL数据库使用管理水平的下限。

Pigsty经过真实生产环境的长期考验,基于Apache 2.0协议开源,可免费用于测试与生产。作者不对使用本项目导致的任何损失负责,但本项目提供可选商业支持。

This the multi-page printable view of this section. Click here to print.

Pigsty 针对大规模数据库集群监控与管理而设计,提供简单便利的高可用数据库供给管理方案与业界一流的图形化的监控管理界面。Pigsty旨在降低数据库使用与管理的门槛,提高PostgreSQL数据库使用管理水平的下限。

Pigsty经过真实生产环境的长期考验,基于Apache 2.0协议开源,可免费用于测试与生产。作者不对使用本项目导致的任何损失负责,但本项目提供可选商业支持。

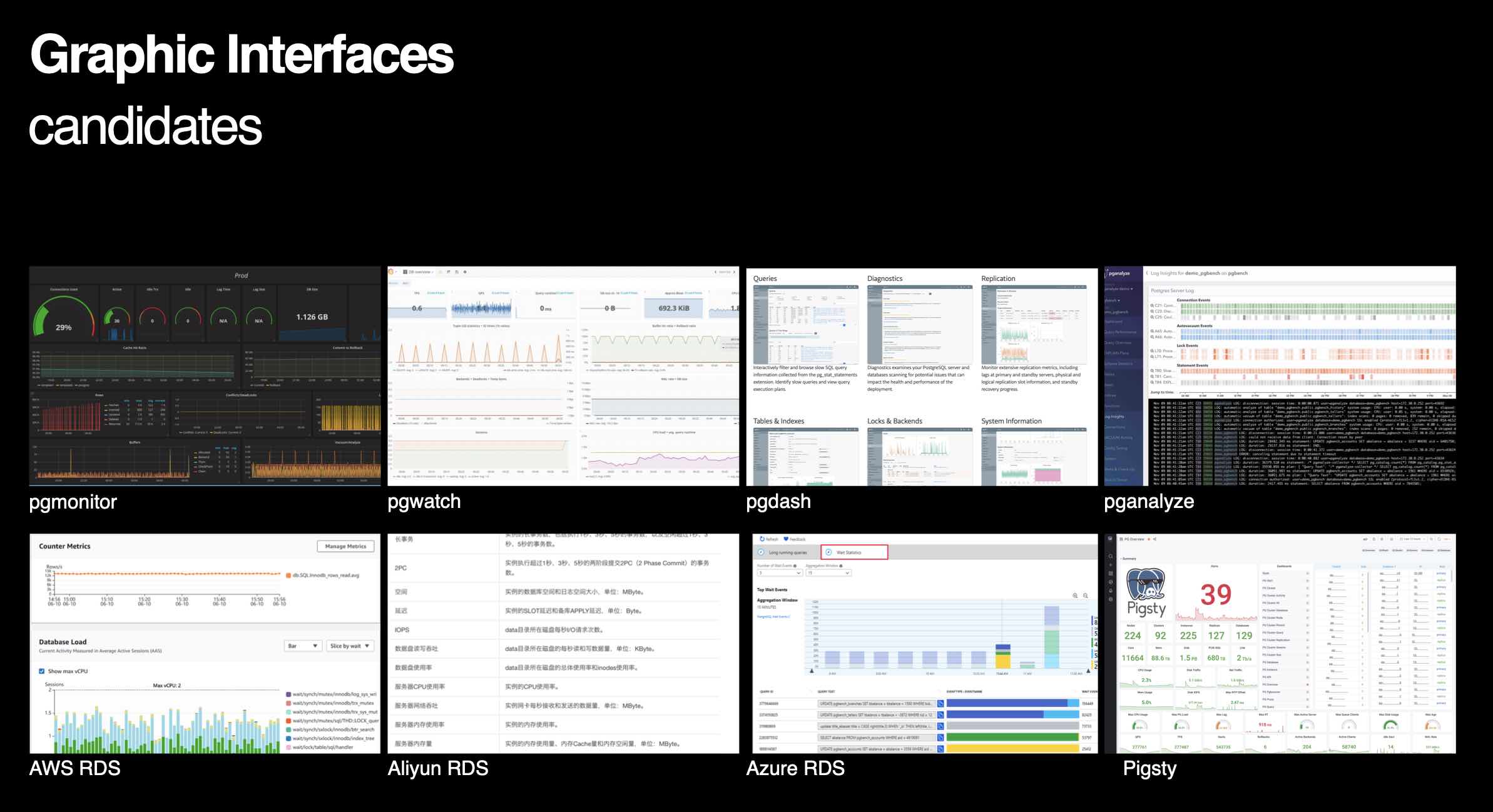

Pigsty旨在提供世界上最好的PostgreSQL监控系统,PostgreSQL是世界上最好的开源关系型数据库,但在其生态中却缺少一个足够好的监控系统,Pigsty即旨在解决这一问题。

开发Pigsty的初衷是:作者需要对一个大规模PostgreSQL集群进行管理,但找遍所有市面上的开源与商业监控系统方案后,发现没有一个是“足够好用”的,遂我行我上开发设计了本系统。

作为开发者,这套系统还有非常巨大的改进空间。但作为终端用户,我认为它已经成为世界上最好的PostgreSQL监控系统

Pigsty同时还是一个高可用数据库集群供给方案。

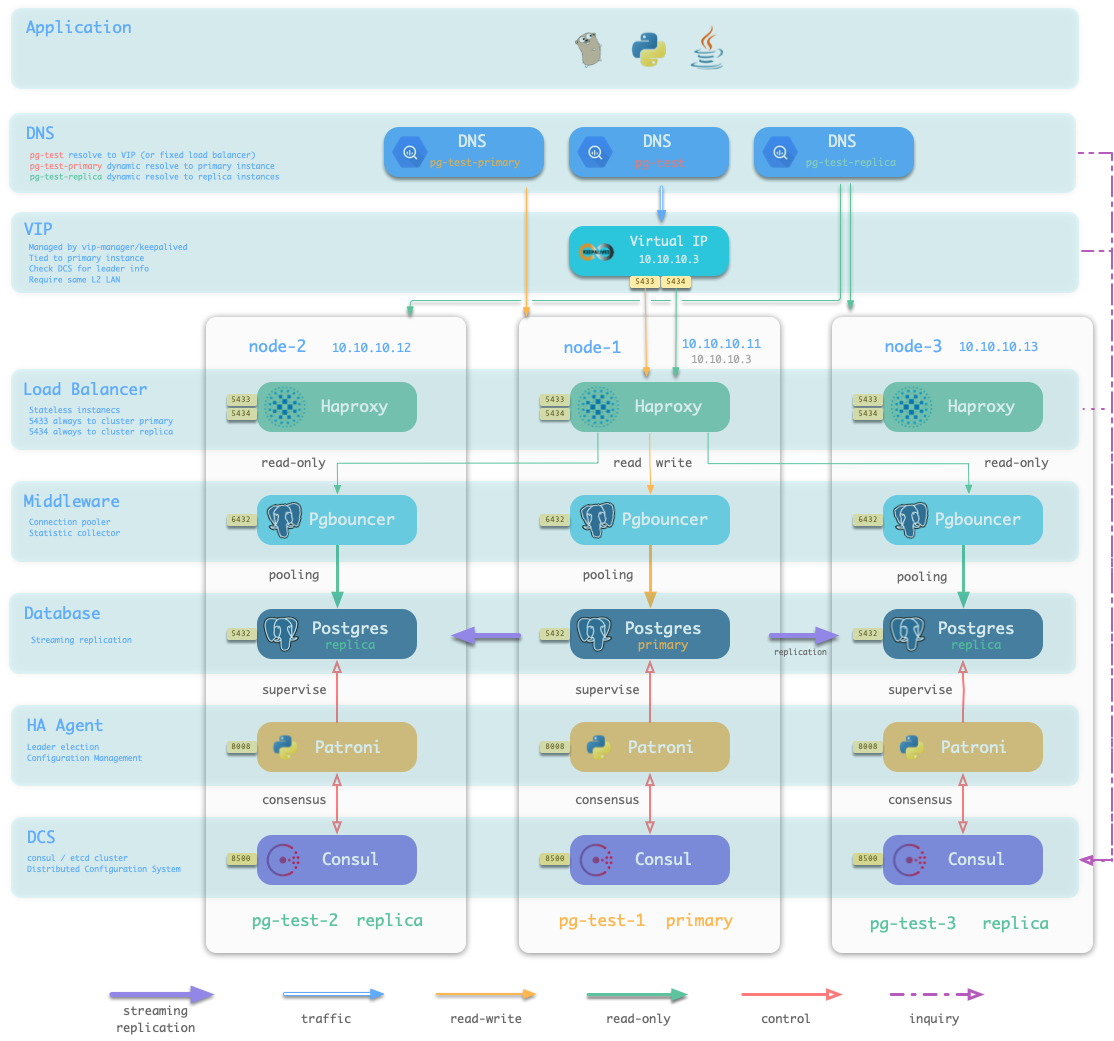

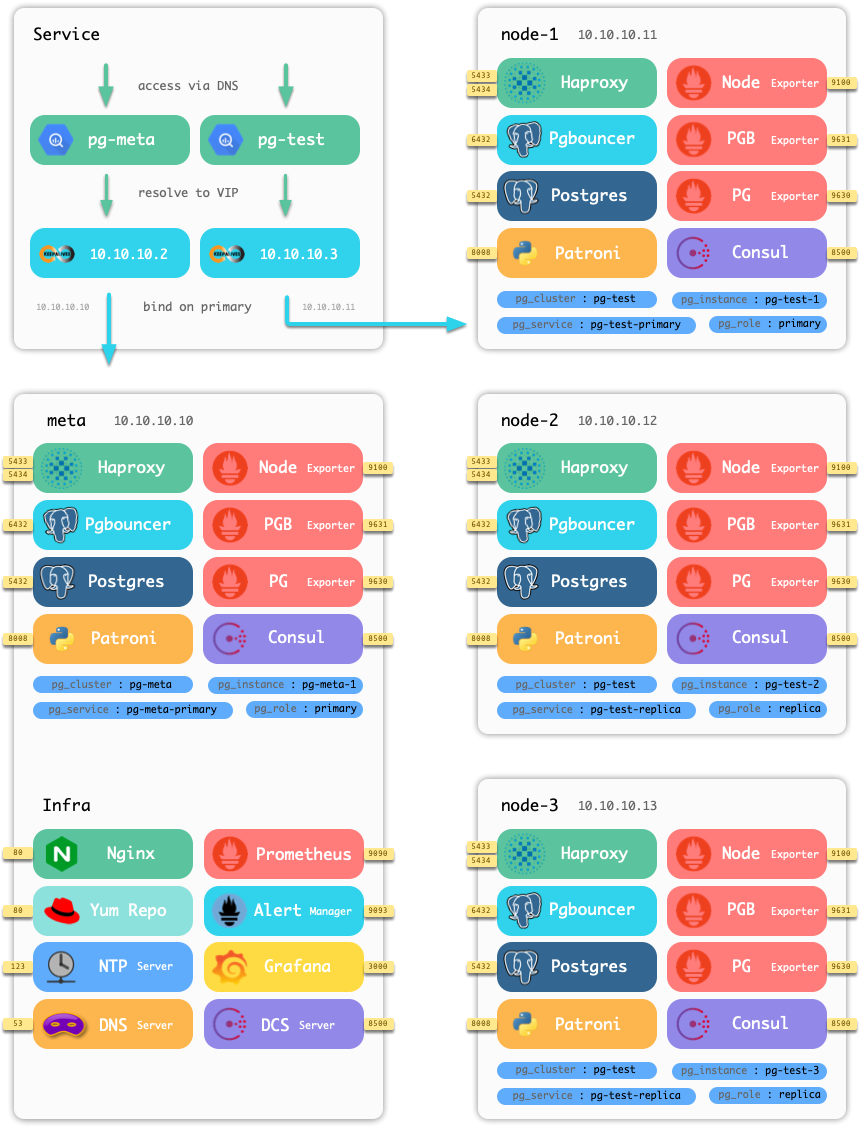

监控系统要想发行与演示,必须要先有被监控的对象。可许多用户自建的数据库实在是不堪入目,所以这里作者干脆就把数据库供给方案作为项目的一部分发布。将主从复制,故障切换,流量代理,连接池,服务发现,基本权限系统等生产级成熟部署方案打包至本项目中,真正让用户做到开箱即用。

数据库供给方案所做的事情就是:您填写一张表单,然后系统会自动根据表单的内容创建出对应的数据库集群。真正做到傻瓜式数据库管理。

Pigsty依托开源,回馈社区,是免费的开源软件,基于Apache 2.0协议开源,但也提供可选的商业支持服务。

Pigsty的监控系统基于开源组件Prometheus,Grafana,Alertmanager, Exporter进行深度定制开发。 同时还包括Nginx, Dnsmasq/CoreDNS, NTP/Chrony, Consul/Etcd等基础设施。

Pigsty的供给方案基于流行的DevOps工具Ansible进行开发,部署涉及的组件包括:Postgres, Pgbouncer, Patroni, HAProxy, KeepAlived

接下来,您可以:

这篇文档将介绍如何在本地基于Vagrant与Virtualbox拉起Pigsty演示沙箱。

如果您的本地计算机上已经安装有vagrant 与 virtualbox,那么只需要克隆并进入本项目后执行以下命令:

sudo make dns # 将Pigsty所需的静态DNS写入您的/etc/hosts文件 (需要sudo权限,当然您也可以跳过这一步,并使用IP端口直接访问)

make new # 使用Vagrant创建四台Virtualbox虚拟机,并使用这些虚拟机拉起Pigsty本地演示沙箱

make mon-view # 从本地访问Pigsty主页,默认的用户名与密码都是:admin

宿主机的操作系统没有特殊要求,只要能够安装运行Vagrant与virtualbox即可。作者验证可行的环境有:

If you already have vagrant and virtualbox properly installed. Just run following commands:

# run under pigsty home dir

make up # pull up all vagrant nodes

make ssh # setup vagrant ssh access

make init # init infrastructure and databaes clusters

sudo make dns # write static DNS record to your host (sudo required)

make mon-view # monitoring system home page (default: admin:admin)

Verified version: MacOS 10.15, Vagrant 2.2.10, Virtualbox 6.1.14, CentOS 7.8

System Requirement

Minimal setup

pg-metaStandard setup ( TINY mode, vagrant demo)

pg-meta and 3-instances database cluster pg-testProduction setup (OLTP/OLAP/CRIT mode)

Verified environment: Dell R740 / 64 Core / 400GB Mem / 3TB PCI-E SSD x 200

If you wish to run pigsty on virtual machine in your laptop. Consider using vagrant and virtualbox. Which enables you create and destroy virtual machine easily. Check Vagrant Provision for more information. Other virtual machine solution such as vmware also works.

Prepare nodes, bare metal or virtual machine.

Currently only CentOS 7 is supported and fully tested.

You will need one node for minial setup, and four nodes for a complete demonstration.

Pick one node as meta node, Which is controller of entire system.

Meta node is controller of the system. Which will run essential service such as Nginx, Yum Repo, DNS Server, NTP Server, Consul Server, Prometheus, AlterManager, Grafana, and other components. It it recommended to have 1 meta node in sandbox/dev environment, and 3 ~ 5 meta nodes in production environment.

Create admin user on these nodes which has nopassword sudo privilege.

echo "<username> ALL=(ALL) NOPASSWD: ALL" >> /etc/sudoers.d/<username>

Setup admin user SSH nopass access from meta node.

ssh-copy-id <address>

You could execute playbooks on your host machine directly instead of meta node when running pigsty inside virtual machines. It is convenient for development and testing.

Install Ansible on meta node (or your host machine if you prefer running playbooks there)

yum install ansible # centos

brew install ansible # macos

If your meta node does not have Internet access. You could perform an Offline Installation. Or figure out your own way installing ansible there.

Clone this repo to meta node

git clone https://github.com/vonng/pigsty && cd pigsty

[Optional]: download pre-packaged offline installation resource tarball to ${PIGSTY_HOME}/files/pkg.tgz

If you happen to have exactly same OS (e.g CentOS 7.8 pkg). You could download it and put it there. So the first-time provision will be extremely fast.

Configuration is essential to pigsty.

dev.yml is the Configuration file for vagrant sandbox environment. And conf/all.yml is the default configuration file path, which is a soft link to conf/dev.yml by default.

You can leave most parameters intact, only small portion of parameters need adjustment such as cluster inventory definition. A typical cluster definition only require 3 variables to work: pg_cluster , pg_role, and pg_seq. Check configuration guide for more detail.

#-----------------------------

# cluster: pg-test

#-----------------------------

pg-test: # define cluster named 'pg-test'

# - cluster members - #

hosts:

10.10.10.11: {pg_seq: 1, pg_role: primary, ansible_host: node-1}

10.10.10.12: {pg_seq: 1, pg_role: replica, ansible_host: node-2}

10.10.10.13: {pg_seq: 1, pg_role: replica, ansible_host: node-3}

# - cluster configs - #

vars:

# basic settings

pg_cluster: pg-test # define actual cluster name

pg_version: 13 # define installed pgsql version

node_tune: tiny # tune node into oltp|olap|crit|tiny mode

pg_conf: tiny.yml # tune pgsql into oltp/olap/crit/tiny mode

pg_users:

- username: test

password: test

comment: default test user

groups: [ dbrole_readwrite ]

pg_databases: # create a business database 'test'

- name: test

extensions: [{name: postgis}] # create extra extension postgis

parameters: # overwrite database meta's default search_path

search_path: public,monitor

pg_default_database: test # default database will be used as primary monitor target

# proxy settings

vip_enabled: true # enable/disable vip (require members in same LAN)

vip_address: 10.10.10.3 # virtual ip address

vip_cidrmask: 8 # cidr network mask length

vip_interface: eth1 # interface to add virtual ip

It is straight forward to materialize that configuration about infrastructure & database cluster:

./infra.yml # init infrastructure according to config

./initdb.yml # init database cluster according to config

It may take around 5~30min to download all necessary rpm packages from internet according to your network condition. (Only for the first time, you could cache downloaded packages by running make cache)

(Consider using other upstream yum repo if not applicable , check

conf/all.yml,all.vars.repo_upstreams)

Start exploring Pigsty.

Main Page: http://pigsty or http://<meta-ip-address>

Grafana: http://g.pigsty or http://<meta-ip-address>:3000 (default userpass: admin:admin)

Consul: http://c.pigsty or http://<meta-ip-address>:8500 (consul only listen on localhost)

Prometheus: http://p.pigsty or http://<meta-ip-address>:9090

AlertManager: http://a.pigsty or http://<meta-ip-address>:9093

You may need to write DNS to your host before accessing pigsty via domain names.

sudo make dns # write local DNS record to your /etc/hosts, sudo required

本节介绍如何快速拉起Pigsty沙箱环境,更多信息请参阅快速上手

准备机器

使用预分配好的机器,或基于预定义的沙箱Vagrantfile在本地生成演示虚拟机,选定一台作为中控机。

配置中控机到其他机器的SSH免密码访问,并确认所使用的的SSH用户在机器上具有免密码sudo的权限。

如果您在本机安装有vagrant和virtualbox,则可直接在项目根目录下执行以make up拉个四节点虚拟机环境,详见Vagrant供给

make up

准备项目

在中控机上安装Ansible,并克隆本项目。如果采用本地虚拟机环境,亦可在宿主机上安装ansible执行命令。

git clone https://github.com/vonng/pigsty && cd pigsty

如果目标环境没有互联网访问,或者速度不佳,考虑下载预打包的离线安装包,或使用有互联网访问/代理的同系统的另一台机器制作离线安装包。离线安装细节请参考离线安装教程。

修改配置

按需修改配置文件。配置文件使用YAML格式与Ansible清单语义,配置项与格式详情请参考配置教程

vi conf/all.yml # 默认配置文件路径

初始化基础设施

执行此剧本,将基础设施定义参数实例化,详情请参阅 基础设施供给

./infra.yml # 执行此剧本,将基础设施定义参数实例化

初始化数据库集群

执行此剧本,将拉起所有的数据库集群,数据库集群供给详情请参阅 数据库集群供给

./initdb.yml # 执行此剧本,将所有数据库集群定义实例化

开始探索

可以通过参数nginx_upstream中自定义的域名(沙箱环境中默认为http://pigsty)访问Pigsty主页。

监控系统的默认域名为http://g.pigsty,默认用户名与密码均为admin

监控系统可以直接通过meta节点上的3000端口访问,如需从本地通过域名访问,可以执行sudo make dns将所需的DNS记录写入宿主机中。

Take standard demo cluster as an example, this cluster consist of four nodes: meta , node-1 , node-2, node-3.

postgres, pgbouncer, patroni, haproxy, node_exporter, pg_exporter, pgbouncer_exporter,consul等服务pg-meta 与 pg-test。其中pg-test为一主两从结构,pg-meta为单主结构。meta节点上运行有基础设施服务:nginx, repo, ntp, dns, consul server/etcd, prometheus, grafana, alertmanager等Pigsty provides multiple ways to connect to database:

pg-test, primary.pg-test, replica.pg-test)And multiple ways to route (read-only/read-write) traffic:

pg-test, pg-test-primary, pg-test-replica)target_session_attrs=read-write)Lot’s of configurable parameters items, refer to Proxy Configuration Guide for more detail.

Database Access Guide provides information about how to connect to database.

第一个问题是Observability,可观测性。 那么,什么是可观测性呢?对于这样的问题,列举定义是枯燥乏味的,让我们直接以Postgres本身为例。

这张图,显示了Postgres本身的可观测性。PostgreSQL 提供了丰富的观测接口,包括系统目录,统计视图,辅助函数。 (简单介绍) 这些都是我们可以观测的对象,我能很荣幸地宣称,这里列出的信息全部被Pigsty所收录,并且通过精心的设计,将晦涩的指标数据,转换成了人类可以轻松理解的Insight

下面让我们以一个最经典的例子来深入探索可观测性: pg_stat_statements ,这是Postgres官方提供的统计插件,可以暴露出数据库中执行的每一类查询的详细统计指标。与图中Query Planning和Execution相对应

当我们在说高可用时,究竟在说什么?Several nines ?

说到底,对于传统单领导者数据库来说,核心问题是就是故障切换,是领导权力交接的问题。

集群状况介绍

postgres://dbuser_test:dbuser_test@testdb:5555/testdbpostgres://dbuser_test:dbuser_test@testdb:5556/testdbHA的两个核心场景:

故障切换的四个核心问题:

https://github.com/Vonng/pigsty

关键问题:DCS的SLA如何保障?

==在自动切换模式下,如果DCS挂了,当前主库会在retry_timeout 后Demote成从库,导致所有集群不可写==。

作为分布式共识数据库,Consul/Etcd是相当稳健的,但仍必须确保DCS的SLA高于DB的SLA。

解决方法:配置一个足够大的retry_timeout,并通过几种以下方式从管理上解决此问题。

retry_timeout之内解决DCS Service Down的问题。retry_timeout之内将关闭集群的自动切换功能(打开维护模式)。可以优化的点? 添加绕开DCS的P2P检测,如果主库意识到自己所处的分区仍为Major分区,不触发操作。

关键问题:HA策略,RPO优先或RTO优先?

可用性与一致性谁优先?例如,普通库RTO优先,金融支付类RPO优先。

普通库允许紧急故障切换时丢失极少量数据(阈值可配置,例如最近1M写入)

与钱相关的库不允许丢数据,相应地在故障切换时需要更多更审慎的检查或人工介入。

关键问题:Fencing机制,是否允许关机?

在正常情况下,Patroni会在发生Leader Change时先执行Primary Fencing,通过杀掉PG进程的方式进行。

但在某些极端情况下,比如vm暂停,软件Bug,或者极高负载,有可能没法成功完成这一点。那么就需要通过重启机器的方式一了百了。是否可以接受?在极端环境下会有怎样的表现?

关键操作:选主之后

选主之后要记得存盘。手工做一次Checkpoint确保万无一失。

关键问题:流量切换怎样做,2层,4层,7层

关键问题:一主一从的特殊场景

假设集群包括一台主库P,n台从库S,所有从库直接挂载在主库上。

primary_conninfo指向C,作为级连从库,滚动重启生效。.primary.primary. ,指向Cstandby.,摘除C(一主一从除外)pg_rewind,如果成功则继续,如果失败则直接重做从库。recovery.conf(12-)|postgresql.auto.conf(12),将其primary_conninfo指向C< max(standby_sequence) + 1>.standby.standby.,向S中添加P,承接读流量自动切换的核心区别在于主库不可用。如果主库可用,那么完全同主动切换一样即可。 自动切换相比之下要多了两个问题,即检测与选主的问题,同时拓扑调整也因为主库不可用而有所区别。

pg_exporter,或者由Agent定期向DCS汇报。.primary.primary. ,指向Cstandby.,摘除C(一主一从除外)primary_conninfo指向C,作为级连从库,滚动重启生效,并追赶新主库C。pg_rewind,如果成功则继续,如果失败则直接重做从库。recovery.conf(12-)|postgresql.auto.conf(12),将其primary_conninfo指向C< max(standby_sequence) + 1>.standby.standby.,向S中添加P,承接读流量< max(standby_sequence) + 1>.standby.向集群添加新从库。Pigsty is based on open source projects like prometheus, grafana, pg_exporter and follow their best practices.

Grafana provides the final user interface, turn metrics into charts.

Prometheus scrape, collect metrics and serve queries

Exporter (node, postgres, pgbouncer, haproxy) expose server metrics

Exporter service are registed into consul, and be discovered by prometheus

Read more about pg_exporter

Available metrics

There are several different levels for monitoring:

pg-test-tt) that reflect business, and used as namespace. which usually consist of multiple database instances, contains multiple nodes, and two typical serivce: <cluster>-primary (read-write) and <cluster>-replica (read-only).Basic Facts

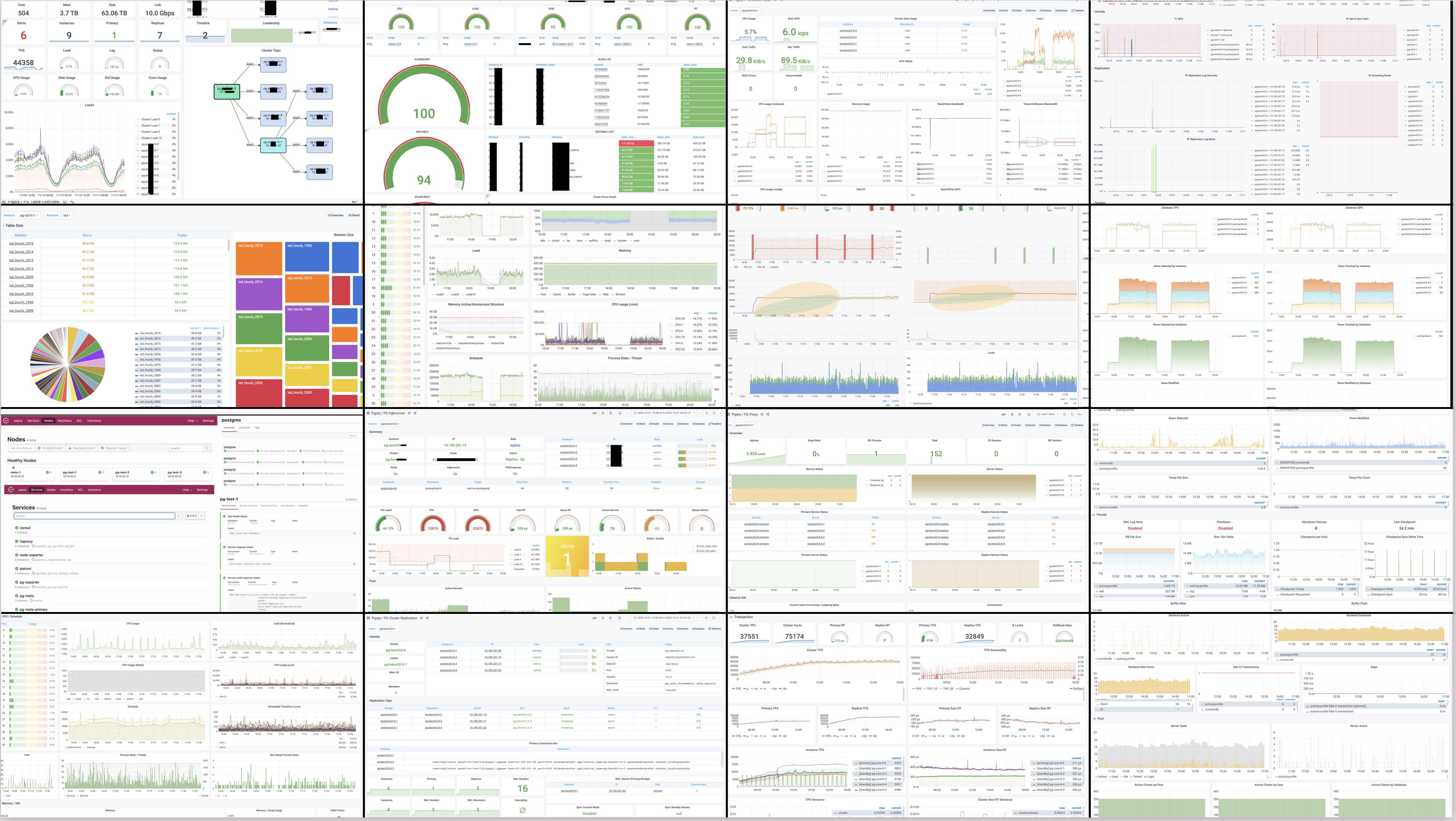

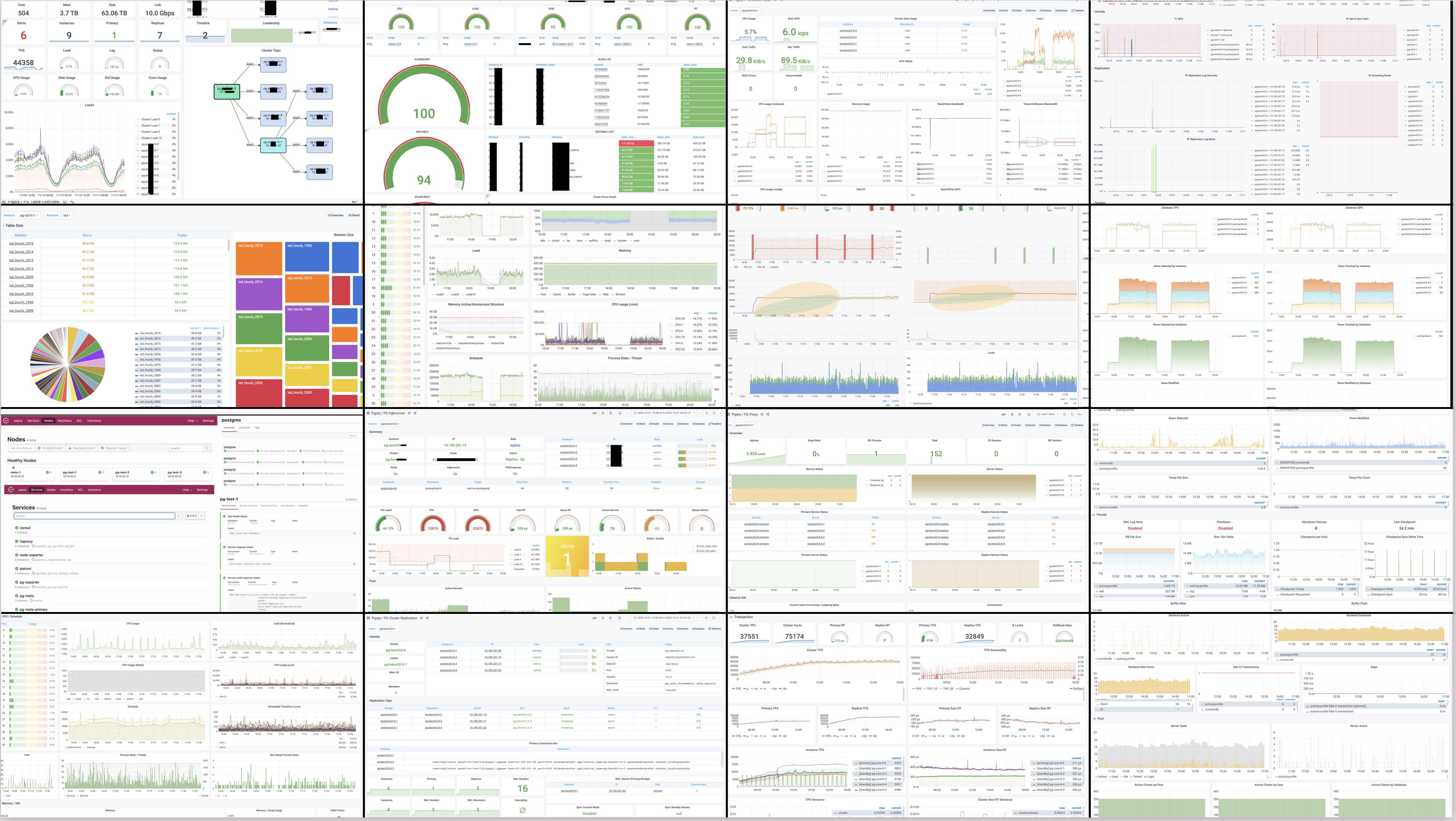

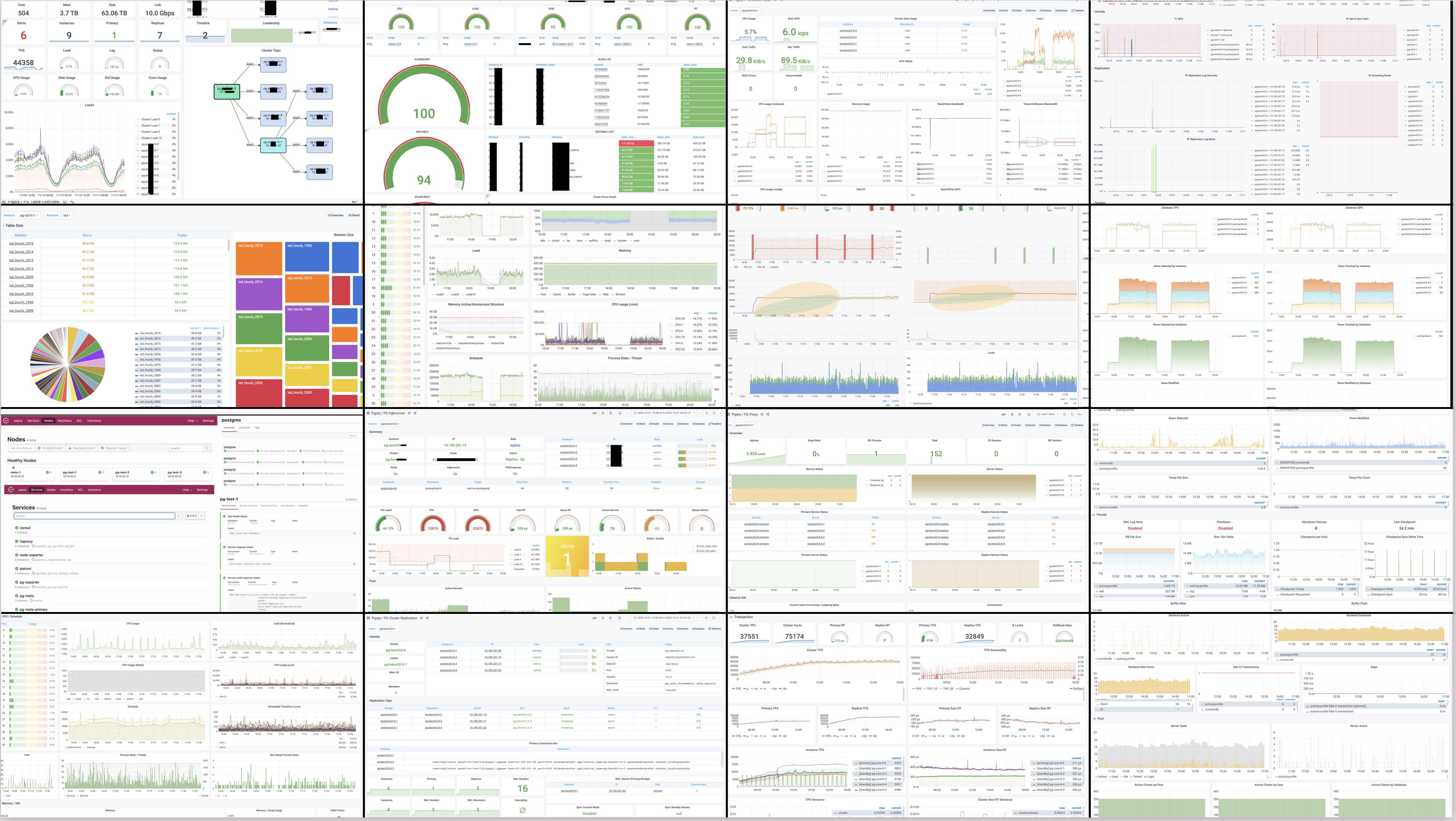

PG Overview dashboard is the entrance of entire monitoring system.

Indexing clusters and instances, finding anomalies. Visualizing key metrics.

Other overview level dashboards:

Overview of entire environment

Index page for database cluster resource: services, instances, nodes.

Aggregated metrics on cluster level.

Cluster level dashboards:

Dashboard that focus on an autonomous database cluster

PG Service Dashboard focusing on proxy , servers, traffic routes.

Focusing on DNS, read-write/read-only, traffic routing, proxy & server health, etc…

PG Instance Dashboard provides tons of metrics

Focusing on instance level metrics

There may be multiple databases sharing same instance / cluster. So metrics here are focusing on one specific database rather than entire instance.

Focusing on database level metrics

PG Table Overview dashboard focus on objects within a database. For example: Table, Index, Function.

Focusing on tables of a specific database

This dashboard focus on specific query in a specific database. It provides valuable informtion on database loads.

PG Table Catalog will query database catalog directly using monitor user. It is not recommend but sometimes convinient.

View system catalog information of any specific table in database directly

Classical Node Exporter Dashboard

There are tons of metrics available in Pigsty.

那么,Pigsty总共包含了多少指标呢? 这里是一副各个指标来源占比的饼图。我们可以看到,右侧蓝绿黄对应的部分是数据库及数据库相关组件所暴露的指标,而左下方红橙色部分则对应着机器节点相关指标。左上方紫色部分则是负载均衡器的相关指标。

数据库指标中,与postgres本身有关的原始指标约230个,与中间件有关的原始指标约50个,基于这些原始指标,Pigsty又通过层次聚合与预计算,精心设计出约350个与DB相关的衍生指标。 因此,对于每个数据库集群来说,单纯针对数据库及其附件的监控指标就有621个。而机器原始指标281个,衍生指标83个一共364个。加上负载均衡器的170个指标,我们总共有接近1200类指标。

注意,这里我们必须辨析一下metric 与 Time-series的区别。 这里我们使用的量词是 类 而不是个 。 因为一个meitric可能对应多个时间序列。例如一个数据库中有20张表,那么 pg_table_index_scan 这样的Mertric就会对应有20个Time Series

Metrics are collected from exporters.

Pigsty的监控数据,主要有四个来源: 数据库本身,中间件,操作系统,负载均衡器。通过相应的exporter对外暴露。 所有的这些指标,还会进行进一步的加工处理。比如,按照不同的层次进行聚合

Metrics can be categorized as four major groups: Error, Saturation, Traffic and Latency.

There are just a small portion of metrics.

In addition to metrics above, there are a large number of derived metrics. For example, QPS from pgbouncer will have following derived metrics

################################################################

# QPS (Pgbouncer) #

################################################################

# TPS realtime (irate1m)

- record: pg:db:qps_realtime

expr: irate(pgbouncer_stat_total_query_count{}[1m])

- record: pg:ins:qps_realtime

expr: sum without(datname) (pg:db:qps_realtime{})

- record: pg:svc:qps_realtime

expr: sum by(cls, role) (pg:ins:qps_realtime{})

- record: pg:cls:qps_realtime

expr: sum by(cls) (pg:ins:qps_realtime{})

- record: pg:all:qps_realtime

expr: sum(pg:cls:qps_realtime{})

# qps (rate1m)

- record: pg:db:qps

expr: pgbouncer_stat_avg_query_count{datname!="pgbouncer"}

- record: pg:ins:qps

expr: sum without(datname) (pg:db:qps)

- record: pg:svc:qps

expr: sum by (cls, role) (pg:ins:qps)

- record: pg:cls:qps

expr: sum by(cls) (pg:ins:qps)

- record: pg:all:qps

expr: sum(pg:cls:qps)

# qps avg30m

- record: pg:db:qps_avg30m

expr: avg_over_time(pg:db:qps[30m])

- record: pg:ins:qps_avg30m

expr: avg_over_time(pg:ins:qps[30m])

- record: pg:svc:qps_avg30m

expr: avg_over_time(pg:svc:qps[30m])

- record: pg:cls:qps_avg30m

expr: avg_over_time(pg:cls:qps[30m])

- record: pg:all:qps_avg30m

expr: avg_over_time(pg:all:qps[30m])

# qps µ

- record: pg:db:qps_mu

expr: avg_over_time(pg:db:qps_avg30m[30m])

- record: pg:ins:qps_mu

expr: avg_over_time(pg:ins:qps_avg30m[30m])

- record: pg:svc:qps_mu

expr: avg_over_time(pg:svc:qps_avg30m[30m])

- record: pg:cls:qps_mu

expr: avg_over_time(pg:cls:qps_avg30m[30m])

- record: pg:all:qps_mu

expr: avg_over_time(pg:all:qps_avg30m[30m])

# qps σ: stddev30m qps

- record: pg:db:qps_sigma

expr: stddev_over_time(pg:db:qps[30m])

- record: pg:ins:qps_sigma

expr: stddev_over_time(pg:ins:qps[30m])

- record: pg:svc:qps_sigma

expr: stddev_over_time(pg:svc:qps[30m])

- record: pg:cls:qps_sigma

expr: stddev_over_time(pg:cls:qps[30m])

- record: pg:all:qps_sigma

expr: stddev_over_time(pg:all:qps[30m])

There are hundreds of rules defining extra metrics based on primitive metrics.

These basic sample guidelines assume that your Pigsty site is deployed using Netlify and your files are stored in GitHub. You can use the guidelines “as is” or adapt them with your own instructions: for example, other deployment options, information about your doc project’s file structure, project-specific review guidelines, versioning guidelines, or any other information your users might find useful when updating your site. Kubeflow has a great example.

Don’t forget to link to your own doc repo rather than our example site! Also make sure users can find these guidelines from your doc repo README: either add them there and link to them from this page, add them here and link to them from the README, or include them in both locations.

We use Hugo to format and generate our website, the Pigsty theme for styling and site structure, and Netlify to manage the deployment of the site. Hugo is an open-source static site generator that provides us with templates, content organisation in a standard directory structure, and a website generation engine. You write the pages in Markdown (or HTML if you want), and Hugo wraps them up into a website.

All submissions, including submissions by project members, require review. We use GitHub pull requests for this purpose. Consult GitHub Help for more information on using pull requests.

Here’s a quick guide to updating the docs. It assumes you’re familiar with the GitHub workflow and you’re happy to use the automated preview of your doc updates:

If you’ve just spotted something you’d like to change while using the docs, Pigsty has a shortcut for you:

If you want to run your own local Hugo server to preview your changes as you work:

Follow the instructions in Getting started to install Hugo and any other tools you need. You’ll need at least Hugo version 0.45 (we recommend using the most recent available version), and it must be the extended version, which supports SCSS.

Fork the Goldydocs repo repo into your own project, then create a local copy using git clone. Don’t forget to use --recurse-submodules or you won’t pull down some of the code you need to generate a working site.

git clone --recurse-submodules --depth 1 https://github.com/Vonng/pigsty-example.git

Run hugo server in the site root directory. By default your site will be available at http://localhost:1313/. Now that you’re serving your site locally, Hugo will watch for changes to the content and automatically refresh your site.

Continue with the usual GitHub workflow to edit files, commit them, push the changes up to your fork, and create a pull request.

If you’ve found a problem in the docs, but you’re not sure how to fix it yourself, please create an issue in the Goldydocs repo. You can also create an issue about a specific page by clicking the Create Issue button in the top right hand corner of the page.

These basic sample guidelines assume that your Pigsty site is deployed using Netlify and your files are stored in GitHub. You can use the guidelines “as is” or adapt them with your own instructions: for example, other deployment options, information about your doc project’s file structure, project-specific review guidelines, versioning guidelines, or any other information your users might find useful when updating your site. Kubeflow has a great example.

Don’t forget to link to your own doc repo rather than our example site! Also make sure users can find these guidelines from your doc repo README: either add them there and link to them from this page, add them here and link to them from the README, or include them in both locations.

We use Hugo to format and generate our website, the Pigsty theme for styling and site structure, and Netlify to manage the deployment of the site. Hugo is an open-source static site generator that provides us with templates, content organisation in a standard directory structure, and a website generation engine. You write the pages in Markdown (or HTML if you want), and Hugo wraps them up into a website.

All submissions, including submissions by project members, require review. We use GitHub pull requests for this purpose. Consult GitHub Help for more information on using pull requests.

Here’s a quick guide to updating the docs. It assumes you’re familiar with the GitHub workflow and you’re happy to use the automated preview of your doc updates:

If you’ve just spotted something you’d like to change while using the docs, Pigsty has a shortcut for you:

If you want to run your own local Hugo server to preview your changes as you work:

Follow the instructions in Getting started to install Hugo and any other tools you need. You’ll need at least Hugo version 0.45 (we recommend using the most recent available version), and it must be the extended version, which supports SCSS.

Fork the Goldydocs repo repo into your own project, then create a local copy using git clone. Don’t forget to use --recurse-submodules or you won’t pull down some of the code you need to generate a working site.

git clone --recurse-submodules --depth 1 https://github.com/Vonng/pigsty-example.git

Run hugo server in the site root directory. By default your site will be available at http://localhost:1313/. Now that you’re serving your site locally, Hugo will watch for changes to the content and automatically refresh your site.

Continue with the usual GitHub workflow to edit files, commit them, push the changes up to your fork, and create a pull request.

If you’ve found a problem in the docs, but you’re not sure how to fix it yourself, please create an issue in the Goldydocs repo. You can also create an issue about a specific page by clicking the Create Issue button in the top right hand corner of the page.

These basic sample guidelines assume that your Pigsty site is deployed using Netlify and your files are stored in GitHub. You can use the guidelines “as is” or adapt them with your own instructions: for example, other deployment options, information about your doc project’s file structure, project-specific review guidelines, versioning guidelines, or any other information your users might find useful when updating your site. Kubeflow has a great example.

Don’t forget to link to your own doc repo rather than our example site! Also make sure users can find these guidelines from your doc repo README: either add them there and link to them from this page, add them here and link to them from the README, or include them in both locations.

We use Hugo to format and generate our website, the Pigsty theme for styling and site structure, and Netlify to manage the deployment of the site. Hugo is an open-source static site generator that provides us with templates, content organisation in a standard directory structure, and a website generation engine. You write the pages in Markdown (or HTML if you want), and Hugo wraps them up into a website.

All submissions, including submissions by project members, require review. We use GitHub pull requests for this purpose. Consult GitHub Help for more information on using pull requests.

Here’s a quick guide to updating the docs. It assumes you’re familiar with the GitHub workflow and you’re happy to use the automated preview of your doc updates:

If you’ve just spotted something you’d like to change while using the docs, Pigsty has a shortcut for you:

If you want to run your own local Hugo server to preview your changes as you work:

Follow the instructions in Getting started to install Hugo and any other tools you need. You’ll need at least Hugo version 0.45 (we recommend using the most recent available version), and it must be the extended version, which supports SCSS.

Fork the Goldydocs repo repo into your own project, then create a local copy using git clone. Don’t forget to use --recurse-submodules or you won’t pull down some of the code you need to generate a working site.

git clone --recurse-submodules --depth 1 https://github.com/Vonng/pigsty-example.git

Run hugo server in the site root directory. By default your site will be available at http://localhost:1313/. Now that you’re serving your site locally, Hugo will watch for changes to the content and automatically refresh your site.

Continue with the usual GitHub workflow to edit files, commit them, push the changes up to your fork, and create a pull request.

If you’ve found a problem in the docs, but you’re not sure how to fix it yourself, please create an issue in the Goldydocs repo. You can also create an issue about a specific page by clicking the Create Issue button in the top right hand corner of the page.

These basic sample guidelines assume that your Pigsty site is deployed using Netlify and your files are stored in GitHub. You can use the guidelines “as is” or adapt them with your own instructions: for example, other deployment options, information about your doc project’s file structure, project-specific review guidelines, versioning guidelines, or any other information your users might find useful when updating your site. Kubeflow has a great example.

Don’t forget to link to your own doc repo rather than our example site! Also make sure users can find these guidelines from your doc repo README: either add them there and link to them from this page, add them here and link to them from the README, or include them in both locations.

We use Hugo to format and generate our website, the Pigsty theme for styling and site structure, and Netlify to manage the deployment of the site. Hugo is an open-source static site generator that provides us with templates, content organisation in a standard directory structure, and a website generation engine. You write the pages in Markdown (or HTML if you want), and Hugo wraps them up into a website.

All submissions, including submissions by project members, require review. We use GitHub pull requests for this purpose. Consult GitHub Help for more information on using pull requests.

Here’s a quick guide to updating the docs. It assumes you’re familiar with the GitHub workflow and you’re happy to use the automated preview of your doc updates:

If you’ve just spotted something you’d like to change while using the docs, Pigsty has a shortcut for you:

If you want to run your own local Hugo server to preview your changes as you work:

Follow the instructions in Getting started to install Hugo and any other tools you need. You’ll need at least Hugo version 0.45 (we recommend using the most recent available version), and it must be the extended version, which supports SCSS.

Fork the Goldydocs repo repo into your own project, then create a local copy using git clone. Don’t forget to use --recurse-submodules or you won’t pull down some of the code you need to generate a working site.

git clone --recurse-submodules --depth 1 https://github.com/Vonng/pigsty-example.git

Run hugo server in the site root directory. By default your site will be available at http://localhost:1313/. Now that you’re serving your site locally, Hugo will watch for changes to the content and automatically refresh your site.

Continue with the usual GitHub workflow to edit files, commit them, push the changes up to your fork, and create a pull request.

If you’ve found a problem in the docs, but you’re not sure how to fix it yourself, please create an issue in the Goldydocs repo. You can also create an issue about a specific page by clicking the Create Issue button in the top right hand corner of the page.

These basic sample guidelines assume that your Pigsty site is deployed using Netlify and your files are stored in GitHub. You can use the guidelines “as is” or adapt them with your own instructions: for example, other deployment options, information about your doc project’s file structure, project-specific review guidelines, versioning guidelines, or any other information your users might find useful when updating your site. Kubeflow has a great example.

Don’t forget to link to your own doc repo rather than our example site! Also make sure users can find these guidelines from your doc repo README: either add them there and link to them from this page, add them here and link to them from the README, or include them in both locations.

We use Hugo to format and generate our website, the Pigsty theme for styling and site structure, and Netlify to manage the deployment of the site. Hugo is an open-source static site generator that provides us with templates, content organisation in a standard directory structure, and a website generation engine. You write the pages in Markdown (or HTML if you want), and Hugo wraps them up into a website.

All submissions, including submissions by project members, require review. We use GitHub pull requests for this purpose. Consult GitHub Help for more information on using pull requests.

Here’s a quick guide to updating the docs. It assumes you’re familiar with the GitHub workflow and you’re happy to use the automated preview of your doc updates:

If you’ve just spotted something you’d like to change while using the docs, Pigsty has a shortcut for you:

If you want to run your own local Hugo server to preview your changes as you work:

Follow the instructions in Getting started to install Hugo and any other tools you need. You’ll need at least Hugo version 0.45 (we recommend using the most recent available version), and it must be the extended version, which supports SCSS.

Fork the Goldydocs repo repo into your own project, then create a local copy using git clone. Don’t forget to use --recurse-submodules or you won’t pull down some of the code you need to generate a working site.

git clone --recurse-submodules --depth 1 https://github.com/Vonng/pigsty-example.git

Run hugo server in the site root directory. By default your site will be available at http://localhost:1313/. Now that you’re serving your site locally, Hugo will watch for changes to the content and automatically refresh your site.

Continue with the usual GitHub workflow to edit files, commit them, push the changes up to your fork, and create a pull request.

If you’ve found a problem in the docs, but you’re not sure how to fix it yourself, please create an issue in the Goldydocs repo. You can also create an issue about a specific page by clicking the Create Issue button in the top right hand corner of the page.

These basic sample guidelines assume that your Pigsty site is deployed using Netlify and your files are stored in GitHub. You can use the guidelines “as is” or adapt them with your own instructions: for example, other deployment options, information about your doc project’s file structure, project-specific review guidelines, versioning guidelines, or any other information your users might find useful when updating your site. Kubeflow has a great example.

Don’t forget to link to your own doc repo rather than our example site! Also make sure users can find these guidelines from your doc repo README: either add them there and link to them from this page, add them here and link to them from the README, or include them in both locations.

We use Hugo to format and generate our website, the Pigsty theme for styling and site structure, and Netlify to manage the deployment of the site. Hugo is an open-source static site generator that provides us with templates, content organisation in a standard directory structure, and a website generation engine. You write the pages in Markdown (or HTML if you want), and Hugo wraps them up into a website.

All submissions, including submissions by project members, require review. We use GitHub pull requests for this purpose. Consult GitHub Help for more information on using pull requests.

Here’s a quick guide to updating the docs. It assumes you’re familiar with the GitHub workflow and you’re happy to use the automated preview of your doc updates:

If you’ve just spotted something you’d like to change while using the docs, Pigsty has a shortcut for you:

If you want to run your own local Hugo server to preview your changes as you work:

Follow the instructions in Getting started to install Hugo and any other tools you need. You’ll need at least Hugo version 0.45 (we recommend using the most recent available version), and it must be the extended version, which supports SCSS.

Fork the Goldydocs repo repo into your own project, then create a local copy using git clone. Don’t forget to use --recurse-submodules or you won’t pull down some of the code you need to generate a working site.

git clone --recurse-submodules --depth 1 https://github.com/Vonng/pigsty-example.git

Run hugo server in the site root directory. By default your site will be available at http://localhost:1313/. Now that you’re serving your site locally, Hugo will watch for changes to the content and automatically refresh your site.

Continue with the usual GitHub workflow to edit files, commit them, push the changes up to your fork, and create a pull request.

If you’ve found a problem in the docs, but you’re not sure how to fix it yourself, please create an issue in the Goldydocs repo. You can also create an issue about a specific page by clicking the Create Issue button in the top right hand corner of the page.

These basic sample guidelines assume that your Pigsty site is deployed using Netlify and your files are stored in GitHub. You can use the guidelines “as is” or adapt them with your own instructions: for example, other deployment options, information about your doc project’s file structure, project-specific review guidelines, versioning guidelines, or any other information your users might find useful when updating your site. Kubeflow has a great example.

Don’t forget to link to your own doc repo rather than our example site! Also make sure users can find these guidelines from your doc repo README: either add them there and link to them from this page, add them here and link to them from the README, or include them in both locations.

We use Hugo to format and generate our website, the Pigsty theme for styling and site structure, and Netlify to manage the deployment of the site. Hugo is an open-source static site generator that provides us with templates, content organisation in a standard directory structure, and a website generation engine. You write the pages in Markdown (or HTML if you want), and Hugo wraps them up into a website.

All submissions, including submissions by project members, require review. We use GitHub pull requests for this purpose. Consult GitHub Help for more information on using pull requests.

Here’s a quick guide to updating the docs. It assumes you’re familiar with the GitHub workflow and you’re happy to use the automated preview of your doc updates:

If you’ve just spotted something you’d like to change while using the docs, Pigsty has a shortcut for you:

If you want to run your own local Hugo server to preview your changes as you work:

Follow the instructions in Getting started to install Hugo and any other tools you need. You’ll need at least Hugo version 0.45 (we recommend using the most recent available version), and it must be the extended version, which supports SCSS.

Fork the Goldydocs repo repo into your own project, then create a local copy using git clone. Don’t forget to use --recurse-submodules or you won’t pull down some of the code you need to generate a working site.

git clone --recurse-submodules --depth 1 https://github.com/Vonng/pigsty-example.git

Run hugo server in the site root directory. By default your site will be available at http://localhost:1313/. Now that you’re serving your site locally, Hugo will watch for changes to the content and automatically refresh your site.

Continue with the usual GitHub workflow to edit files, commit them, push the changes up to your fork, and create a pull request.

If you’ve found a problem in the docs, but you’re not sure how to fix it yourself, please create an issue in the Goldydocs repo. You can also create an issue about a specific page by clicking the Create Issue button in the top right hand corner of the page.

These basic sample guidelines assume that your Pigsty site is deployed using Netlify and your files are stored in GitHub. You can use the guidelines “as is” or adapt them with your own instructions: for example, other deployment options, information about your doc project’s file structure, project-specific review guidelines, versioning guidelines, or any other information your users might find useful when updating your site. Kubeflow has a great example.

Don’t forget to link to your own doc repo rather than our example site! Also make sure users can find these guidelines from your doc repo README: either add them there and link to them from this page, add them here and link to them from the README, or include them in both locations.

We use Hugo to format and generate our website, the Pigsty theme for styling and site structure, and Netlify to manage the deployment of the site. Hugo is an open-source static site generator that provides us with templates, content organisation in a standard directory structure, and a website generation engine. You write the pages in Markdown (or HTML if you want), and Hugo wraps them up into a website.

All submissions, including submissions by project members, require review. We use GitHub pull requests for this purpose. Consult GitHub Help for more information on using pull requests.

Here’s a quick guide to updating the docs. It assumes you’re familiar with the GitHub workflow and you’re happy to use the automated preview of your doc updates:

If you’ve just spotted something you’d like to change while using the docs, Pigsty has a shortcut for you:

If you want to run your own local Hugo server to preview your changes as you work:

Follow the instructions in Getting started to install Hugo and any other tools you need. You’ll need at least Hugo version 0.45 (we recommend using the most recent available version), and it must be the extended version, which supports SCSS.

Fork the Goldydocs repo repo into your own project, then create a local copy using git clone. Don’t forget to use --recurse-submodules or you won’t pull down some of the code you need to generate a working site.

git clone --recurse-submodules --depth 1 https://github.com/Vonng/pigsty-example.git

Run hugo server in the site root directory. By default your site will be available at http://localhost:1313/. Now that you’re serving your site locally, Hugo will watch for changes to the content and automatically refresh your site.

Continue with the usual GitHub workflow to edit files, commit them, push the changes up to your fork, and create a pull request.

If you’ve found a problem in the docs, but you’re not sure how to fix it yourself, please create an issue in the Goldydocs repo. You can also create an issue about a specific page by clicking the Create Issue button in the top right hand corner of the page.

These basic sample guidelines assume that your Pigsty site is deployed using Netlify and your files are stored in GitHub. You can use the guidelines “as is” or adapt them with your own instructions: for example, other deployment options, information about your doc project’s file structure, project-specific review guidelines, versioning guidelines, or any other information your users might find useful when updating your site. Kubeflow has a great example.

Don’t forget to link to your own doc repo rather than our example site! Also make sure users can find these guidelines from your doc repo README: either add them there and link to them from this page, add them here and link to them from the README, or include them in both locations.

We use Hugo to format and generate our website, the Pigsty theme for styling and site structure, and Netlify to manage the deployment of the site. Hugo is an open-source static site generator that provides us with templates, content organisation in a standard directory structure, and a website generation engine. You write the pages in Markdown (or HTML if you want), and Hugo wraps them up into a website.

All submissions, including submissions by project members, require review. We use GitHub pull requests for this purpose. Consult GitHub Help for more information on using pull requests.

Here’s a quick guide to updating the docs. It assumes you’re familiar with the GitHub workflow and you’re happy to use the automated preview of your doc updates:

If you’ve just spotted something you’d like to change while using the docs, Pigsty has a shortcut for you:

If you want to run your own local Hugo server to preview your changes as you work:

Follow the instructions in Getting started to install Hugo and any other tools you need. You’ll need at least Hugo version 0.45 (we recommend using the most recent available version), and it must be the extended version, which supports SCSS.

Fork the Goldydocs repo repo into your own project, then create a local copy using git clone. Don’t forget to use --recurse-submodules or you won’t pull down some of the code you need to generate a working site.

git clone --recurse-submodules --depth 1 https://github.com/Vonng/pigsty-example.git

Run hugo server in the site root directory. By default your site will be available at http://localhost:1313/. Now that you’re serving your site locally, Hugo will watch for changes to the content and automatically refresh your site.

Continue with the usual GitHub workflow to edit files, commit them, push the changes up to your fork, and create a pull request.

If you’ve found a problem in the docs, but you’re not sure how to fix it yourself, please create an issue in the Goldydocs repo. You can also create an issue about a specific page by clicking the Create Issue button in the top right hand corner of the page.

pigsty protocol:

you can customize :

If you wish to run pigsty on your laptop, consider using vagrant and virtualbox as vm provisioner

brew install virtualbox vagrant ansible # MacOS, other may not work this way

Vagrantfile, it will provision 4 nodes (via virtualbox) for this project.make up # pull up vm nodes. alternative: cd vagrant && vagrant up

make ssh # cd vagrant && vagrant ssh-config > ~/.ssh/pigsty_config

make # launch cluster

make new # create a new pigsty cluster

make dns # write pigsty dns record to your /etc/hosts (sudo required)

make ssh # write ssh config to your ~/.ssh/config

make clean # delete current cluster

make cache # copy local yum repo packages to your pigsty/pkg

Vagrant provision scripts tested on MacOS 10.15 Catalina.

如果您希望在本地环境运行Pigsty示例,可以考虑使用 vagrant与virtualbox初始化本地虚拟机。

在宿主机上安装 vagrant, virtualbox 与ansible(可选)

具体安装方式因平台而异,请参照软件官网文档进行,以MacOS为例,可以使用homebrew一键安装:

brew install virtualbox vagrant ansible # MacOS命令行

make up,系统会使用 Vagrantfile中的定义拉起四台虚拟机。make up # 拉起所有节点,也可以通过进入vagrant目录执行vagrant up实现

make ssh # 等价于执行 cd vagrant && vagrant ssh-config > ~/.ssh/pigsty_config

make # 启动集群

make new # 销毁并创建新集群

make dns # 将Pigsty域名记录写入本机/etc/hosts (需要sudo权限)

make ssh # 将虚拟机SSH配置信息写入 ~/.ssh/config

make clean # 销毁现有本地集群

make cache # 制作离线安装包,并拷贝至宿主机本地,加速后续集群创建

make upload # 将离线安装缓存包 pkg.tgz 上传并解压至默认目录 /www/pigsty

附带的Vagrantfile在MacOS 10.15下测试

pigsty can be configured via 200+ parameters. Which defines the infrastructure and all database clusters.

all.children.<cluster_name>, one entry per clusterall.vars defines unified configuration among entire environmentall.children.<cluster>.vars defines database-cluster-wide configurationsall.children.<cluster>.hosts, one entry per host. Host variable can be defined and override group & global & default values.pg_cluster and Host variables pg_role , pg_seq are required for each cluster.pg_role=primary (even if it is a standby clutster leader)Here is an minimum configuration example that defines a single node environment and one database cluster pg-meta

---

######################################################################

# Minimal Environment Inventory #

######################################################################

all: # top-level namespace, match all hosts

#==================================================================#

# Clusters #

#==================================================================#

children: # top-level groups, one group per database cluster (and special group 'meta')

#-----------------------------

# meta controller

#-----------------------------

meta: # special group 'meta' defines the main controller machine

vars:

meta_node: true # mark node as meta controller

ansible_group_priority: 99 # meta group is top priority

# nodes in meta group (1-3)

hosts:

10.10.10.10: # meta node IP ADDRESS

ansible_host: meta # comment this if not access via ssh alias

#-----------------------------

# cluster: pg-meta

#-----------------------------

pg-meta:

# - cluster configs - #

vars:

pg_cluster: pg-meta # define actual cluster name

pg_version: 12 # define installed pgsql version

pg_default_username: meta # default business username

pg_default_password: meta # default business password

pg_default_database: meta # default database name

vip_enabled: true # enable/disable vip (require members in same LAN)

vip_address: 10.10.10.2 # virtual ip address

vip_cidrmask: 8 # cidr network mask length

vip_interface: eth1 # interface to add virtual ip

#==================================================================#

# Globals #

#==================================================================#

vars:

proxy_env: # global proxy env when downloading packages

no_proxy: "localhost,127.0.0.1,10.0.0.0/8,192.168.0.0/16,*.pigsty,*.aliyun.com"

...

Cluster inventory define clusters and instances to be managed. Minimal information required including:

pg_cluster, follow DNS naming standard ([a-z][a-z0-9-]*)pg_seq, integer that unique among clusterpg_role, which could be primary, or replicaHere is an example of ansible cluster inventory definition in ini format (which is more compat but not recommended):

[pg-test]

10.10.10.11 pg_role=primary pg_seq=1

10.10.10.12 pg_role=replica pg_seq=2

10.10.10.13 pg_role=replica pg_seq=3

[pg-test:vars]

pg_cluster = pg-test

pg_version = 12

You can override cluster variables in all.children.<cluster>.vars and override instance variables in all.children.<cluster>.hosts.<host>. Here are some variables can be set in cluster or instance level. (Note that all variables are merged into host level before execution).

#------------------------------------------------------------------------------

# POSTGRES INSTALLATION

#------------------------------------------------------------------------------

# - dbsu - #

pg_dbsu: postgres # os user for database, postgres by default (change it is not recommended!)

pg_dbsu_uid: 26 # os dbsu uid and gid, 26 for default postgres users and groups

pg_dbsu_sudo: limit # none|limit|all|nopass (Privilege for dbsu, limit is recommended)

pg_dbsu_home: /var/lib/pgsql # postgresql binary

pg_dbsu_ssh_exchange: false # exchange ssh key among same cluster

# - postgres packages - #

pg_version: 12 # default postgresql version

pgdg_repo: false # use official pgdg yum repo (disable if you have local mirror)

pg_add_repo: false # add postgres related repo before install (useful if you want a simple install)

pg_bin_dir: /usr/pgsql/bin # postgres binary dir

pg_packages: [] # packages to be installed

pg_extensions: [] # extensions to be installed

#------------------------------------------------------------------------------

# POSTGRES CLUSTER PROVISION

#------------------------------------------------------------------------------

# - identity - #

pg_cluster: # [REQUIRED] cluster name (validated during pg_preflight)

pg_seq: 0 # [REQUIRED] instance seq (validated during pg_preflight)

pg_role: replica # [REQUIRED] service role (validated during pg_preflight)

pg_hostname: false # overwrite node hostname with pg instance name

pg_nodename: true # overwrite consul nodename with pg instance name

# - retention - #

# pg_exists_action, available options: abort|clean|skip

# - abort: abort entire play's execution (default)

# - clean: remove existing cluster (dangerous)

# - skip: end current play for this host

# pg_exists: false # auxiliary flag variable (DO NOT SET THIS)

pg_exists_action: clean

# - storage - #

pg_data: /pg/data # postgres data directory

pg_fs_main: /export # data disk mount point /pg -> {{ pg_fs_main }}/postgres/{{ pg_instance }}

pg_fs_bkup: /var/backups # backup disk mount point /pg/* -> {{ pg_fs_bkup }}/postgres/{{ pg_instance }}/*

# - connection - #

pg_listen: '0.0.0.0' # postgres listen address, '0.0.0.0' by default (all ipv4 addr)

pg_port: 5432 # postgres port (5432 by default)

# - patroni - #

# patroni_mode, available options: default|pause|remove

# - default: default ha mode

# - pause: into maintenance mode

# - remove: remove patroni after bootstrap

patroni_mode: default # pause|default|remove

pg_namespace: /pg # top level key namespace in dcs

patroni_port: 8008 # default patroni port

patroni_watchdog_mode: automatic # watchdog mode: off|automatic|required

# - template - #

pg_conf: tiny.yml # user provided patroni config template path

pg_init: initdb.sh # user provided post-init script path, default: initdb.sh

# - authentication - #

pg_hba_common: [] # hba entries for all instances

pg_hba_primary: [] # hba entries for primary instance

pg_hba_replica: [] # hba entries for replicas instances

pg_hba_pgbouncer: [] # hba entries for pgbouncer

# - credential - #

pg_dbsu_password: '' # dbsu password (leaving blank will disable sa password login)

pg_replication_username: replicator # replication user

pg_replication_password: replicator # replication password

pg_monitor_username: dbuser_monitor # monitor user

pg_monitor_password: dbuser_monitor # monitor password

# - default - #

pg_default_username: postgres # non 'postgres' will create a default admin user (not superuser)

pg_default_password: postgres # dbsu password, omit for 'postgres'

pg_default_database: postgres # non 'postgres' will create a default database

pg_default_schema: public # default schema will be create under default database and used as first element of search_path

pg_default_extensions: "tablefunc,postgres_fdw,file_fdw,btree_gist,btree_gin,pg_trgm"

# - pgbouncer - #

pgbouncer_port: 6432 # default pgbouncer port

pgbouncer_poolmode: transaction # default pooling mode: transaction pooling

pgbouncer_max_db_conn: 100 # important! do not set this larger than postgres max conn or conn limit

#------------------------------------------------------------------------------

# MONITOR PROVISION

#------------------------------------------------------------------------------

# - monitor options -

node_exporter_port: 9100 # default port for node exporter

pg_exporter_port: 9630 # default port for pg exporter

pgbouncer_exporter_port: 9631 # default port for pgbouncer exporter

exporter_metrics_path: /metrics # default metric path for pg related exporter

#------------------------------------------------------------------------------

# PROXY PROVISION

#------------------------------------------------------------------------------

# - vip - #

vip_enabled: true # level2 vip requires primary/standby under same switch

vip_address: 127.0.0.1 # virtual ip address ip/cidr

vip_cidrmask: 32 # virtual ip address cidr mask

vip_interface: eth0 # virtual ip network interface

# - haproxy - #

haproxy_enabled: true # enable haproxy among every cluster members

haproxy_policy: leastconn # roundrobin, leastconn

haproxy_admin_username: admin # default haproxy admin username

haproxy_admin_password: admin # default haproxy admin password

haproxy_client_timeout: 3h # client side connection timeout

haproxy_server_timeout: 3h # server side connection timeout

haproxy_exporter_port: 9101 # default admin/exporter port

haproxy_check_port: 8008 # default health check port (patroni 8008 by default)

haproxy_primary_port: 5433 # default primary port 5433

haproxy_replica_port: 5434 # default replica port 5434

haproxy_backend_port: 6432 # default target port: pgbouncer:6432 postgres:5432

Global variables are defined in all.vars. (Or any other ways that follows ansible standard)

Global variables are aiming at unification of environment. Define different infrastructure (e.g DCS, DNS, NTP address, packages to be installed, unified admin user, etc…) for different environments.

Global variables are merged into host variables before execution. And follows ansible variable precedence.

There are lot’s of variables can be defined, Refer to role document for more detail

Variables are divided into 8 sections

Here is an example for demo environment:

---

######################################################################

# File : dev.yml

# Path : inventory/dev.yml

# Desc : Configuration file for development (demo) environment

# Note : follow ansible inventory file format

# Ctime : 2020-09-22

# Mtime : 2020-09-22

# Copyright (C) 2019-2020 Ruohang Feng

######################################################################

######################################################################

# Development Environment Inventory #

######################################################################

all: # top-level namespace, match all hosts

#==================================================================#

# Clusters #

#==================================================================#

children: # top-level groups, one group per database cluster (and special group 'meta')

#-----------------------------

# meta controller

#-----------------------------

meta: # special group 'meta' defines the main controller machine

vars:

meta_node: true # mark node as meta controller

ansible_group_priority: 99 # meta group is top priority

# nodes in meta group (1-3)

hosts:

10.10.10.10: # meta node IP ADDRESS

ansible_host: meta # comment this if not access via ssh alias

#-----------------------------

# cluster: pg-meta

#-----------------------------

pg-meta:

# - cluster configs - #

vars:

# basic settings

pg_cluster: pg-meta # define actual cluster name

pg_version: 13 # define installed pgsql version

node_tune: oltp # tune node into oltp|olap|crit|tiny mode

pg_conf: oltp.yml # tune pgsql into oltp/olap/crit/tiny mode

# misc

patroni_mode: pause # enter maintenance mode, {default|pause|remove}

patroni_watchdog_mode: off # disable watchdog (require|automatic|off)

pg_hostname: false # overwrite node hostname with pg instance name

pg_nodename: true # overwrite consul nodename with pg instance name

# bootstrap template

pg_init: initdb.sh # bootstrap postgres cluster with initdb.sh

pg_default_username: meta # default business username

pg_default_password: meta # default business password

pg_default_database: meta # default database name

# vip settings

vip_enabled: true # enable/disable vip (require members in same LAN)

vip_address: 10.10.10.2 # virtual ip address

vip_cidrmask: 8 # cidr network mask length

vip_interface: eth1 # interface to add virtual ip

# - cluster members - #

hosts:

10.10.10.10:

ansible_host: meta # comment this if not access via ssh alias

pg_role: primary # initial role: primary & replica

pg_seq: 1 # instance sequence among cluster

#-----------------------------

# cluster: pg-test

#-----------------------------

pg-test: # define cluster named 'pg-test'

# - cluster configs - #

vars:

# basic settings

pg_cluster: pg-test # define actual cluster name

pg_version: 13 # define installed pgsql version

node_tune: tiny # tune node into oltp|olap|crit|tiny mode

pg_conf: tiny.yml # tune pgsql into oltp/olap/crit/tiny mode

# bootstrap template

pg_init: initdb.sh # bootstrap postgres cluster with initdb.sh

pg_default_username: test # default business username

pg_default_password: test # default business password

pg_default_database: test # default database name

# vip settings

vip_enabled: true # enable/disable vip (require members in same LAN)

vip_address: 10.10.10.3 # virtual ip address

vip_cidrmask: 8 # cidr network mask length

vip_interface: eth1 # interface to add virtual ip

# - cluster members - #

hosts:

10.10.10.11:

ansible_host: node-1 # comment this if not access via ssh alias

pg_role: primary # initial role: primary & replica

pg_seq: 1 # instance sequence among cluster

10.10.10.12:

ansible_host: node-2 # comment this if not access via ssh alias

pg_role: replica # initial role: primary & replica

pg_seq: 2 # instance sequence among cluster

10.10.10.13:

ansible_host: node-3 # comment this if not access via ssh alias

pg_role: replica # initial role: primary & replica

pg_seq: 3 # instance sequence among cluster

#==================================================================#

# Globals #

#==================================================================#

vars:

#------------------------------------------------------------------------------

# CONNECTION PARAMETERS

#------------------------------------------------------------------------------

# this section defines connection parameters

# ansible_user: vagrant # admin user with ssh access and sudo privilege

proxy_env: # global proxy env when downloading packages

no_proxy: "localhost,127.0.0.1,10.0.0.0/8,192.168.0.0/16,*.pigsty,*.aliyun.com"

#------------------------------------------------------------------------------

# REPO PROVISION

#------------------------------------------------------------------------------

# this section defines how to build a local repo

repo_enabled: true # build local yum repo on meta nodes?

repo_name: pigsty # local repo name

repo_address: yum.pigsty # repo external address (ip:port or url)

repo_port: 80 # listen address, must same as repo_address

repo_home: /www # default repo dir location

repo_rebuild: false # force re-download packages

repo_remove: true # remove existing repos

# - where to download - #

repo_upstreams:

- name: base

description: CentOS-$releasever - Base - Aliyun Mirror

baseurl:

- http://mirrors.aliyun.com/centos/$releasever/os/$basearch/

- http://mirrors.aliyuncs.com/centos/$releasever/os/$basearch/

- http://mirrors.cloud.aliyuncs.com/centos/$releasever/os/$basearch/

gpgcheck: no

failovermethod: priority

- name: updates

description: CentOS-$releasever - Updates - Aliyun Mirror

baseurl:

- http://mirrors.aliyun.com/centos/$releasever/updates/$basearch/

- http://mirrors.aliyuncs.com/centos/$releasever/updates/$basearch/

- http://mirrors.cloud.aliyuncs.com/centos/$releasever/updates/$basearch/

gpgcheck: no

failovermethod: priority

- name: extras

description: CentOS-$releasever - Extras - Aliyun Mirror

baseurl:

- http://mirrors.aliyun.com/centos/$releasever/extras/$basearch/

- http://mirrors.aliyuncs.com/centos/$releasever/extras/$basearch/

- http://mirrors.cloud.aliyuncs.com/centos/$releasever/extras/$basearch/

gpgcheck: no

failovermethod: priority

- name: epel

description: CentOS $releasever - EPEL - Aliyun Mirror

baseurl: http://mirrors.aliyun.com/epel/$releasever/$basearch

gpgcheck: no

failovermethod: priority

- name: grafana

description: Grafana - TsingHua Mirror

gpgcheck: no

baseurl: https://mirrors.tuna.tsinghua.edu.cn/grafana/yum/rpm

- name: prometheus

description: Prometheus and exporters

gpgcheck: no

baseurl: https://packagecloud.io/prometheus-rpm/release/el/$releasever/$basearch

- name: pgdg-common

description: PostgreSQL common RPMs for RHEL/CentOS $releasever - $basearch

gpgcheck: no

baseurl: https://download.postgresql.org/pub/repos/yum/common/redhat/rhel-$releasever-$basearch

- name: pgdg13

description: PostgreSQL 13 for RHEL/CentOS $releasever - $basearch - Updates testing

gpgcheck: no

baseurl: https://download.postgresql.org/pub/repos/yum/13/redhat/rhel-$releasever-$basearch

- name: centos-sclo

description: CentOS-$releasever - SCLo

gpgcheck: no

mirrorlist: http://mirrorlist.centos.org?arch=$basearch&release=7&repo=sclo-sclo

- name: centos-sclo-rh

description: CentOS-$releasever - SCLo rh

gpgcheck: no

mirrorlist: http://mirrorlist.centos.org?arch=$basearch&release=7&repo=sclo-rh

- name: nginx

description: Nginx Official Yum Repo

skip_if_unavailable: true

gpgcheck: no

baseurl: http://nginx.org/packages/centos/$releasever/$basearch/

- name: haproxy

description: Copr repo for haproxy

skip_if_unavailable: true

gpgcheck: no

baseurl: https://download.copr.fedorainfracloud.org/results/roidelapluie/haproxy/epel-$releasever-$basearch/

# - what to download - #

repo_packages:

# repo bootstrap packages

- epel-release nginx wget yum-utils yum createrepo # bootstrap packages

# node basic packages

- ntp chrony uuid lz4 nc pv jq vim-enhanced make patch bash lsof wget unzip git tuned # basic system util

- readline zlib openssl libyaml libxml2 libxslt perl-ExtUtils-Embed ca-certificates # basic pg dependency

- numactl grubby sysstat dstat iotop bind-utils net-tools tcpdump socat ipvsadm telnet # system utils

# dcs & monitor packages

- grafana prometheus2 pushgateway alertmanager # monitor and ui

- node_exporter postgres_exporter nginx_exporter blackbox_exporter # exporter

- consul consul_exporter consul-template etcd # dcs

# python3 dependencies

- ansible python python-pip python-psycopg2 # ansible & python

- python3 python3-psycopg2 python36-requests python3-etcd python3-consul # python3

- python36-urllib3 python36-idna python36-pyOpenSSL python36-cryptography # python3 patroni extra deps

# proxy and load balancer

- haproxy keepalived dnsmasq # proxy and dns

# postgres common Packages

- patroni patroni-consul patroni-etcd pgbouncer pg_cli pgbadger pg_activity # major components

- pgcenter boxinfo check_postgres emaj pgbconsole pg_bloat_check pgquarrel # other common utils

- barman barman-cli pgloader pgFormatter pitrery pspg pgxnclient PyGreSQL pgadmin4

# postgres 13 packages

- postgresql13* postgis31* # postgres 13 and postgis 31

- pg_qualstats13 pg_stat_kcache13 system_stats_13 bgw_replstatus13 # stats extensions

- plr13 plsh13 plpgsql_check_13 pldebugger13 # pl extensions

- hdfs_fdw_13 mongo_fdw13 mysql_fdw_13 ogr_fdw13 redis_fdw_13 # FDW extensions

- wal2json13 count_distinct13 ddlx_13 geoip13 orafce13 # other extensions

- hypopg_13 ip4r13 jsquery_13 logerrors_13 periods_13 pg_auto_failover_13 pg_catcheck13

- pg_fkpart13 pg_jobmon13 pg_partman13 pg_prioritize_13 pg_track_settings13 pgaudit15_13

- pgcryptokey13 pgexportdoc13 pgimportdoc13 pgmemcache-13 pgmp13 pgq-13 # pgrouting_13

- pguint13 pguri13 prefix13 safeupdate_13 semver13 table_version13 tdigest13

# Postgres 12 Packages

# - postgresql12* postgis30_12* timescaledb_12 citus_12 pglogical_12 # postgres 12 basic

# - pg_qualstats12 pg_cron_12 pg_repack12 pg_squeeze12 pg_stat_kcache12 wal2json12 pgpool-II-12 pgpool-II-12-extensions python3-psycopg2 python2-psycopg2

# - ddlx_12 bgw_replstatus12 count_distinct12 extra_window_functions_12 geoip12 hll_12 hypopg_12 ip4r12 jsquery_12 multicorn12 osm_fdw12 mysql_fdw_12 ogr_fdw12 mongo_fdw12 hdfs_fdw_12 cstore_fdw_12 wal2mongo12 orafce12 pagila12 pam-pgsql12 passwordcheck_cracklib12 periods_12 pg_auto_failover_12 pg_bulkload12 pg_catcheck12 pg_comparator12 pg_filedump12 pg_fkpart12 pg_jobmon12 pg_partman12 pg_pathman12 pg_track_settings12 pg_wait_sampling_12 pgagent_12 pgaudit14_12 pgauditlogtofile-12 pgbconsole12 pgcryptokey12 pgexportdoc12 pgfincore12 pgimportdoc12 pgmemcache-12 pgmp12 pgq-12 pgrouting_12 pgtap12 plpgsql_check_12 plr12 plsh12 postgresql_anonymizer12 postgresql-unit12 powa_12 prefix12 repmgr12 safeupdate_12 semver12 slony1-12 sqlite_fdw12 sslutils_12 system_stats_12 table_version12 topn_12

repo_url_packages:

- https://github.com/Vonng/pg_exporter/releases/download/v0.2.0/pg_exporter-0.2.0-1.el7.x86_64.rpm

- https://github.com/cybertec-postgresql/vip-manager/releases/download/v0.6/vip-manager_0.6-1_amd64.rpm

- http://guichaz.free.fr/polysh/files/polysh-0.4-1.noarch.rpm

#------------------------------------------------------------------------------

# NODE PROVISION

#------------------------------------------------------------------------------

# this section defines how to provision nodes

# - node dns - #

node_dns_hosts: # static dns records in /etc/hosts

- 10.10.10.10 yum.pigsty

node_dns_server: add # add (default) | none (skip) | overwrite (remove old settings)

node_dns_servers: # dynamic nameserver in /etc/resolv.conf

- 10.10.10.10

node_dns_options: # dns resolv options

- options single-request-reopen timeout:1 rotate

- domain service.consul

# - node repo - #

node_repo_method: local # none|local|public (use local repo for production env)

node_repo_remove: true # whether remove existing repo

# local repo url (if method=local, make sure firewall is configured or disabled)

node_local_repo_url:

- http://yum.pigsty/pigsty.repo

# - node packages - #

node_packages: # common packages for all nodes

- wget,yum-utils,ntp,chrony,tuned,uuid,lz4,vim-minimal,make,patch,bash,lsof,wget,unzip,git,readline,zlib,openssl

- numactl,grubby,sysstat,dstat,iotop,bind-utils,net-tools,tcpdump,socat,ipvsadm,telnet,tuned,pv,jq

- python3,python3-psycopg2,python36-requests,python3-etcd,python3-consul

- python36-urllib3,python36-idna,python36-pyOpenSSL,python36-cryptography

- node_exporter,consul,consul-template,etcd,haproxy,keepalived,vip-manager

node_extra_packages: # extra packages for all nodes

- patroni,patroni-consul,patroni-etcd,pgbouncer,pgbadger,pg_activity

node_meta_packages: # packages for meta nodes only

- grafana,prometheus2,alertmanager,nginx_exporter,blackbox_exporter,pushgateway

- dnsmasq,nginx,ansible,pgbadger,polysh

# - node features - #

node_disable_numa: false # disable numa, important for production database, reboot required

node_disable_swap: false # disable swap, important for production database

node_disable_firewall: true # disable firewall (required if using kubernetes)

node_disable_selinux: true # disable selinux (required if using kubernetes)

node_static_network: true # keep dns resolver settings after reboot

node_disk_prefetch: false # setup disk prefetch on HDD to increase performance

# - node kernel modules - #

node_kernel_modules:

- softdog

- br_netfilter

- ip_vs

- ip_vs_rr

- ip_vs_rr

- ip_vs_wrr

- ip_vs_sh

- nf_conntrack_ipv4

# - node tuned - #

node_tune: tiny # install and activate tuned profile: none|oltp|olap|crit|tiny

node_sysctl_params: # set additional sysctl parameters, k:v format

net.bridge.bridge-nf-call-iptables: 1 # for kubernetes

# - node user - #

node_admin_setup: true # setup an default admin user ?

node_admin_uid: 88 # uid and gid for admin user

node_admin_username: admin # default admin user

node_admin_ssh_exchange: true # exchange ssh key among cluster ?

node_admin_pks: # public key list that will be installed

- 'ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAAAgQC7IMAMNavYtWwzAJajKqwdn3ar5BhvcwCnBTxxEkXhGlCO2vfgosSAQMEflfgvkiI5nM1HIFQ8KINlx1XLO7SdL5KdInG5LIJjAFh0pujS4kNCT9a5IGvSq1BrzGqhbEcwWYdju1ZPYBcJm/MG+JD0dYCh8vfrYB/cYMD0SOmNkQ== vagrant@pigsty.com'

# - node ntp - #

node_ntp_service: ntp # ntp or chrony

node_ntp_config: true # overwrite existing ntp config?

node_timezone: Asia/Shanghai # default node timezone

node_ntp_servers: # default NTP servers

- pool cn.pool.ntp.org iburst

- pool pool.ntp.org iburst

- pool time.pool.aliyun.com iburst

- server 10.10.10.10 iburst

#------------------------------------------------------------------------------

# META PROVISION

#------------------------------------------------------------------------------

# - ca - #

ca_method: create # create|copy|recreate

ca_subject: "/CN=root-ca" # self-signed CA subject

ca_homedir: /ca # ca cert directory

ca_cert: ca.crt # ca public key/cert

ca_key: ca.key # ca private key

# - nginx - #

nginx_upstream:

- { name: consul, host: c.pigsty, url: "127.0.0.1:8500" }

- { name: grafana, host: g.pigsty, url: "127.0.0.1:3000" }

- { name: prometheus, host: p.pigsty, url: "127.0.0.1:9090" }

- { name: alertmanager, host: a.pigsty, url: "127.0.0.1:9093" }

# - nameserver - #

dns_records: # dynamic dns record resolved by dnsmasq

- 10.10.10.2 pg-meta # sandbox vip for pg-meta

- 10.10.10.3 pg-test # sandbox vip for pg-test

- 10.10.10.10 meta-1 # sandbox node meta-1 (node-0)

- 10.10.10.11 node-1 # sandbox node node-1

- 10.10.10.12 node-2 # sandbox node node-2

- 10.10.10.13 node-3 # sandbox node node-3

- 10.10.10.10 pigsty

- 10.10.10.10 y.pigsty yum.pigsty

- 10.10.10.10 c.pigsty consul.pigsty

- 10.10.10.10 g.pigsty grafana.pigsty

- 10.10.10.10 p.pigsty prometheus.pigsty

- 10.10.10.10 a.pigsty alertmanager.pigsty

- 10.10.10.10 n.pigsty ntp.pigsty

# - prometheus - #

prometheus_scrape_interval: 2s # global scrape & evaluation interval (2s for dev, 15s for prod)

prometheus_scrape_timeout: 1s # global scrape timeout (1s for dev, 8s for prod)

prometheus_metrics_path: /metrics # default metrics path (only affect job 'pg')

prometheus_data_dir: /export/prometheus/data # prometheus data dir

prometheus_retention: 30d # how long to keep

# - grafana - #

grafana_url: http://10.10.10.10:3000 # grafana url

grafana_admin_password: admin # default grafana admin user password

grafana_plugin: install # none|install|reinstall

grafana_cache: /www/pigsty/grafana/plugins.tar.gz # path to grafana plugins tarball

grafana_provision_mode: db # none|db|api

grafana_plugins: # default grafana plugins list

- redis-datasource

- simpod-json-datasource

- fifemon-graphql-datasource

- sbueringer-consul-datasource

- camptocamp-prometheus-alertmanager-datasource

- ryantxu-ajax-panel

- marcusolsson-hourly-heatmap-panel

- michaeldmoore-multistat-panel

- marcusolsson-treemap-panel

- pr0ps-trackmap-panel

- dalvany-image-panel

- magnesium-wordcloud-panel

- cloudspout-button-panel

- speakyourcode-button-panel

- jdbranham-diagram-panel

- grafana-piechart-panel

- snuids-radar-panel

- digrich-bubblechart-panel

grafana_git_plugins:

- https://github.com/Vonng/grafana-echarts

# grafana_dashboards: [] # default dashboards (use role default)

#------------------------------------------------------------------------------

# DCS PROVISION

#------------------------------------------------------------------------------

dcs_type: consul # consul | etcd | both

dcs_name: pigsty # consul dc name | etcd initial cluster token

# dcs server dict in name:ip format

dcs_servers:

meta-1: 10.10.10.10 # you could use existing dcs cluster

# meta-2: 10.10.10.11 # host which have their IP listed here will be init as server

# meta-3: 10.10.10.12 # 3 or 5 dcs nodes are recommend for production environment

dcs_exists_action: skip # abort|skip|clean if dcs server already exists

consul_data_dir: /var/lib/consul # consul data dir (/var/lib/consul by default)

etcd_data_dir: /var/lib/etcd # etcd data dir (/var/lib/consul by default)

#------------------------------------------------------------------------------

# POSTGRES INSTALLATION

#------------------------------------------------------------------------------

# - dbsu - #

pg_dbsu: postgres # os user for database, postgres by default (change it is not recommended!)

pg_dbsu_uid: 26 # os dbsu uid and gid, 26 for default postgres users and groups

pg_dbsu_sudo: limit # none|limit|all|nopass (Privilege for dbsu, limit is recommended)

pg_dbsu_home: /var/lib/pgsql # postgresql binary

pg_dbsu_ssh_exchange: false # exchange ssh key among same cluster

# - postgres packages - #

pg_version: 12 # default postgresql version

pgdg_repo: false # use official pgdg yum repo (disable if you have local mirror)

pg_add_repo: false # add postgres related repo before install (useful if you want a simple install)

pg_bin_dir: /usr/pgsql/bin # postgres binary dir

pg_packages:

- postgresql${pg_version}*

- postgis31_${pg_version}*

- pgbouncer patroni pg_exporter pgbadger

- patroni patroni-consul patroni-etcd pgbouncer pgbadger pg_activity

- python3 python3-psycopg2 python36-requests python3-etcd python3-consul

- python36-urllib3 python36-idna python36-pyOpenSSL python36-cryptography

pg_extensions:

- pg_qualstats${pg_version} pg_stat_kcache${pg_version} wal2json${pg_version}

# - ogr_fdw${pg_version} mysql_fdw_${pg_version} redis_fdw_${pg_version} mongo_fdw${pg_version} hdfs_fdw_${pg_version}

# - count_distinct${version} ddlx_${version} geoip${version} orafce${version} # popular features

# - hypopg_${version} ip4r${version} jsquery_${version} logerrors_${version} periods_${version} pg_auto_failover_${version} pg_catcheck${version}

# - pg_fkpart${version} pg_jobmon${version} pg_partman${version} pg_prioritize_${version} pg_track_settings${version} pgaudit15_${version}

# - pgcryptokey${version} pgexportdoc${version} pgimportdoc${version} pgmemcache-${version} pgmp${version} pgq-${version} pgquarrel pgrouting_${version}

# - pguint${version} pguri${version} prefix${version} safeupdate_${version} semver${version} table_version${version} tdigest${version}

#------------------------------------------------------------------------------

# POSTGRES CLUSTER PROVISION

#------------------------------------------------------------------------------

# - identity - #

# pg_cluster: # [REQUIRED] cluster name (validated during pg_preflight)

# pg_seq: 0 # [REQUIRED] instance seq (validated during pg_preflight)

# pg_role: replica # [REQUIRED] service role (validated during pg_preflight)

pg_hostname: false # overwrite node hostname with pg instance name

pg_nodename: true # overwrite consul nodename with pg instance name

# - retention - #

# pg_exists_action, available options: abort|clean|skip

# - abort: abort entire play's execution (default)

# - clean: remove existing cluster (dangerous)

# - skip: end current play for this host

# pg_exists: false # auxiliary flag variable (DO NOT SET THIS)

pg_exists_action: clean

# - storage - #

pg_data: /pg/data # postgres data directory

pg_fs_main: /export # data disk mount point /pg -> {{ pg_fs_main }}/postgres/{{ pg_instance }}

pg_fs_bkup: /var/backups # backup disk mount point /pg/* -> {{ pg_fs_bkup }}/postgres/{{ pg_instance }}/*

# - connection - #

pg_listen: '0.0.0.0' # postgres listen address, '0.0.0.0' by default (all ipv4 addr)

pg_port: 5432 # postgres port (5432 by default)

# - patroni - #

# patroni_mode, available options: default|pause|remove

# - default: default ha mode

# - pause: into maintenance mode

# - remove: remove patroni after bootstrap

patroni_mode: default # pause|default|remove

pg_namespace: /pg # top level key namespace in dcs

patroni_port: 8008 # default patroni port

patroni_watchdog_mode: automatic # watchdog mode: off|automatic|required

# - template - #

pg_conf: tiny.yml # user provided patroni config template path

pg_init: initdb.sh # user provided post-init script path, default: initdb.sh

# - authentication - #

pg_hba_common:

- '"# allow: meta node access with password"'

- host all all 10.10.10.10/32 md5

- '"# allow: intranet admin role with password"'

- host all +dbrole_admin 10.0.0.0/8 md5

- host all +dbrole_admin 172.16.0.0/12 md5

- host all +dbrole_admin 192.168.0.0/16 md5

- '"# allow local (pgbouncer) read-write user (production user) password access"'

- local all +dbrole_readwrite md5

- host all +dbrole_readwrite 127.0.0.1/32 md5

- '"# intranet common user password access"'

- host all all 10.0.0.0/8 md5

- host all all 172.16.0.0/12 md5

- host all all 192.168.0.0/16 md5

pg_hba_primary: [ ]

pg_hba_replica: