This the multi-page printable view of this section. Click here to print.

Monitoring

- 1: Hierarchy

- 2: Observability

- 3: Identity Management

- 4: Metrics

- 5: Alert Rules

1 - Hierarchy

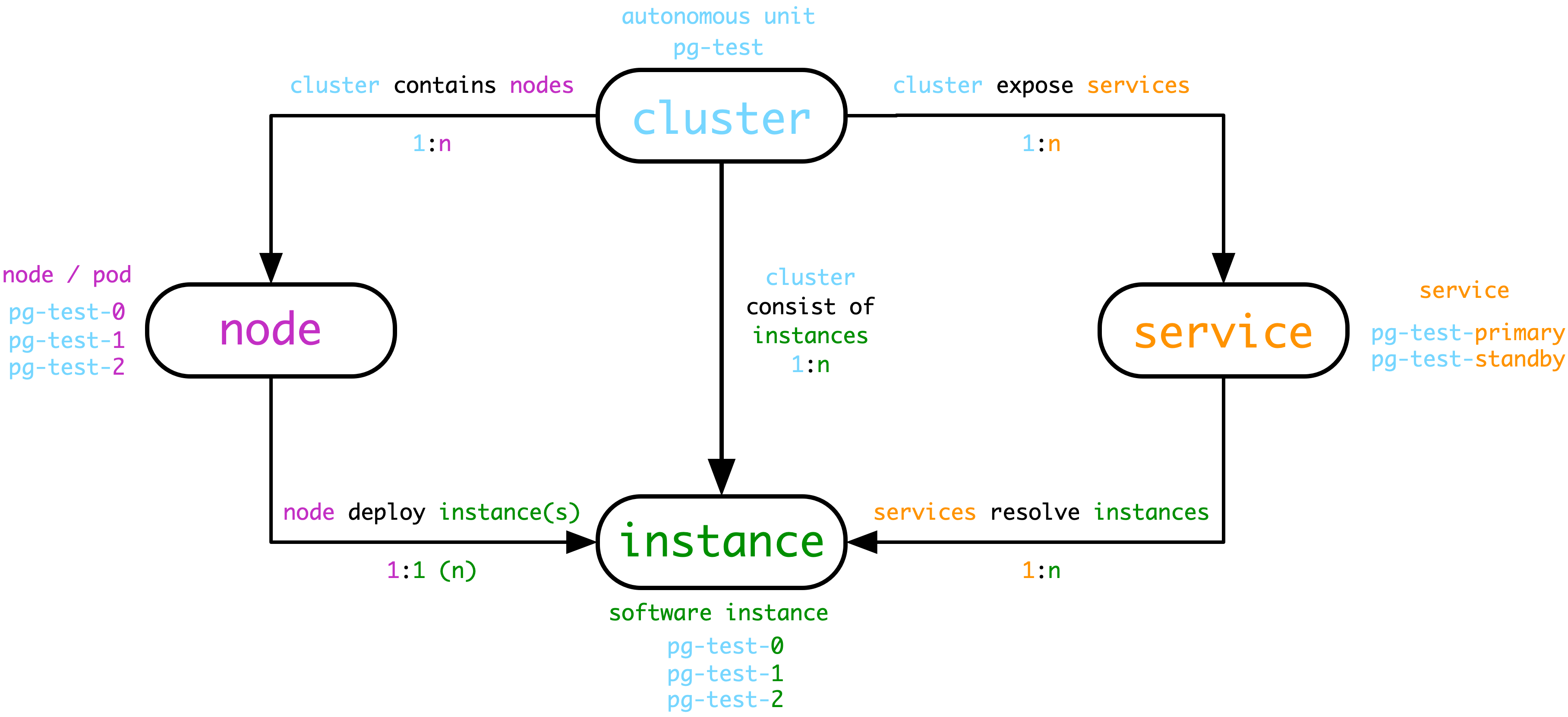

As in Naming Principle, objects in Pigsty are divided into multiple levels: clusters, services, instances, and nodes.

Monitoring system hierarchy

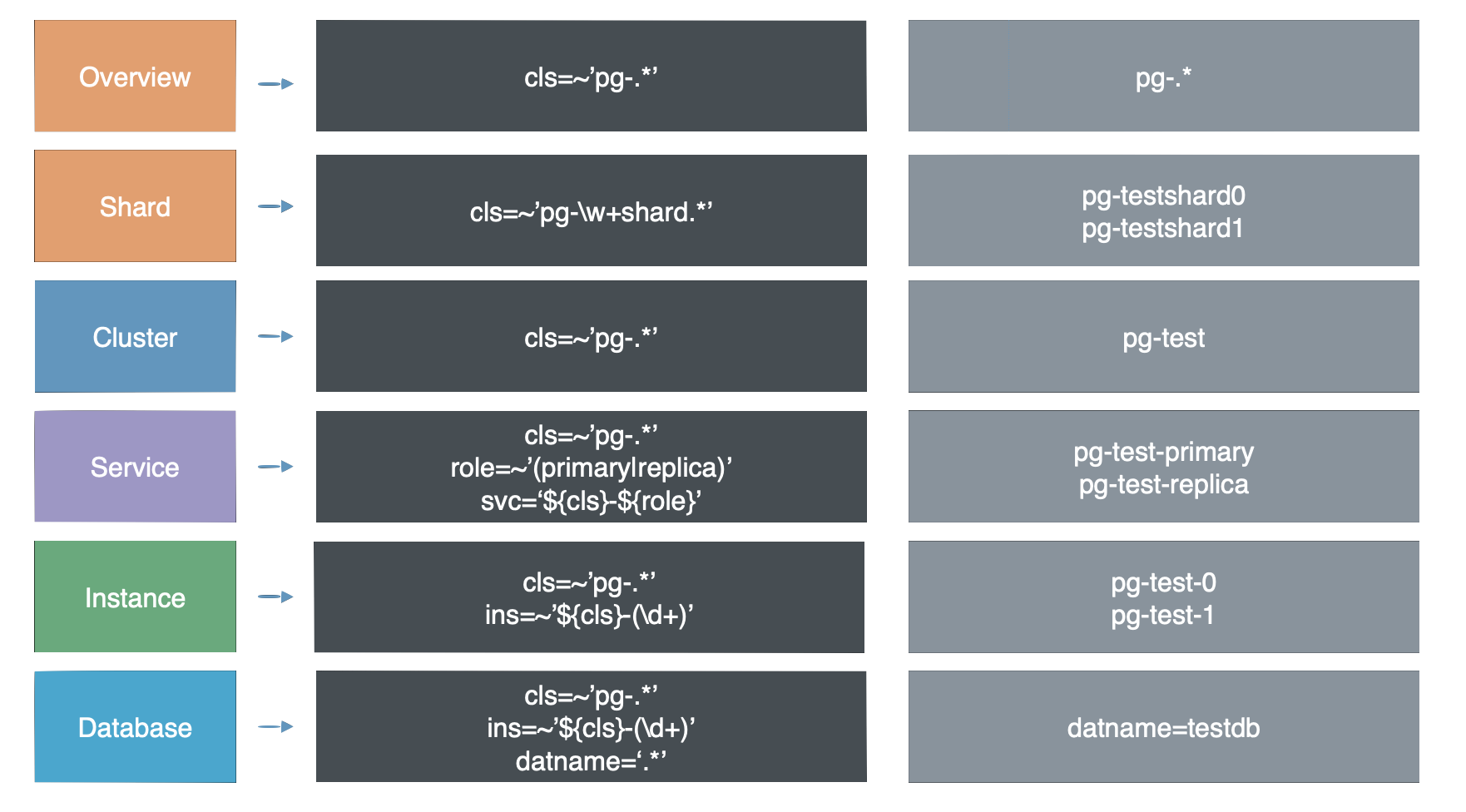

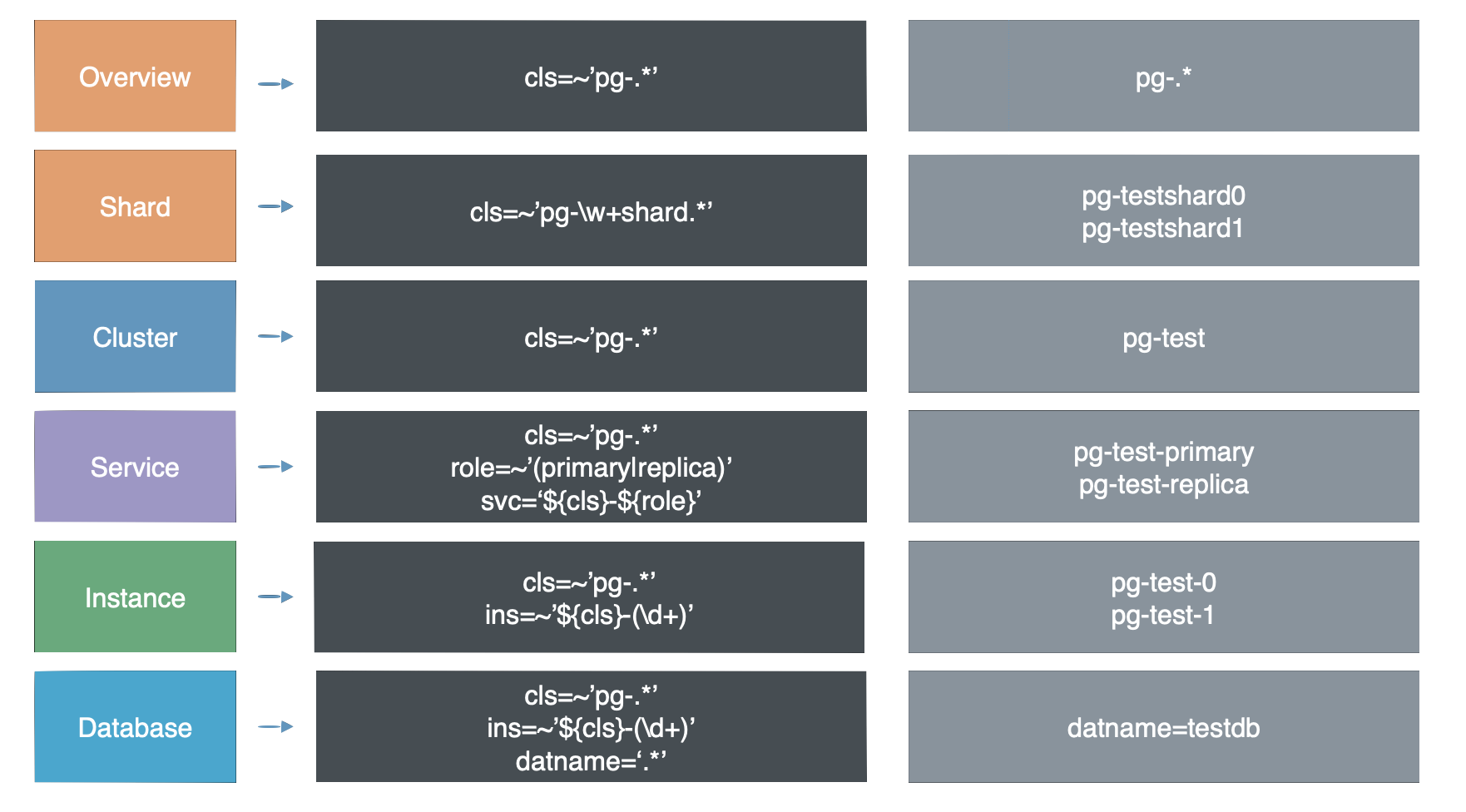

Pigsty’s monitoring system has more layers. In addition to the two most common layers, Instance and Cluster, there are other layers of organization throughout the system. From the top down, there are 7 levels: **Overview, Slice, Cluster, Service, Instance, Database, Object. **

Figure : Pigsty’s monitoring panel is divided into 7 logical hierarchies and 5 implementation hierarchies

Logical levels

Databases in production environments are often organized in clusters, which are the basic business service units and the most important level of monitoring.

A cluster is a set of database instances associated by master-slave replication, and instance is the most basic level of monitoring.

Whereas multiple database clusters together form a real-world production environment, the Overview level of monitoring provides an overall description of the entire environment.

Multiple database clusters serving the same business in a horizontally split pattern are called Shards, and monitoring at the shard level is useful for locating data distribution, skew, and other issues.

Service is the layer sandwiched between the cluster and the instance, and the service is usually closely associated with resources such as DNS, domain names, VIPs, NodePort, etc.

Database is a sub-instance level object where a database cluster/instance may have multiple databases existing at the same time, and database level monitoring is concerned with activity within a single database.

Object are entities within a database, including tables, indexes, sequence numbers, functions, queries, connection pools, etc. Object-level monitoring focuses on the statistical metrics of these objects, which are closely related to the business.

Hierarchical Streamlining

As a streamlining, just as the OSI 7-layer model for networking was reduced in practice to a five-layer model for TCP/IP, these seven layers were also reduced to five layers bounded by Clusters and Instances: Overview , Clusters , Services , Instance , Databases .

This makes the final hierarchy very concise: all information above the cluster level is the Overview level, all monitoring below the instance level is counted as the Database level, and sandwiched between the Cluster and Instance is the Service level.

Naming Rules

After the hierarchy, the most important issue is naming.

-

there needs to be a way to identify and refer to the components within the different layers of the system.

-

the naming convention should reasonably reflect the hierarchical relationship of the entities in the system

-

the naming scheme should be automatically generated according to the rules, so that it can run maintenance-free and automatically when the cluster is scaled up and down and Failover.

Once we have clarified the hierarchy of the system, we can proceed to name each entity in the system.

For the basic naming rules followed by Pigsty, please refer to Naming Principles section.

Pigsty uses an independent name management mechanism and the naming of entities is self-contained.

If you need to interface with external systems, you can use this naming system directly, or adopt your own naming system by transferring the adaptation.

Cluster Naming

Pigsty’s cluster names are specified by the user and satisfy the regular expression of [a-z0-9][a-z0-9-]*, in the form of pg-test, pg-meta.

node naming

Pigsty’s nodes are subordinate to clusters. pigsty’s node names consist of two parts: [cluster name](#cluster naming) and node number, and are concatenated with -.

The form is ${pg_cluster}-${pg_seq}, e.g. pg-meta-1, pg-test-2.

Formally, node numbers are natural numbers of reasonable length (including 0), unique within the cluster, and each node has its own number.

Instance numbers can be explicitly specified and assigned by the user, usually using an assignment starting from 0 or 1, once assigned, they do not change again for the lifetime of the cluster.

Instance Naming

Pigsty’s instance is subordinate to the cluster and is deployed in an exclusive node style.

Since there is a one-to-one correspondence between instances and nodes, the instance name remains the same as the node life.

Service Naming

Pigsty’s Service is subordinate to the cluster. pigsty’s service name consists of two parts: [cluster name](#cluster naming) and Role, and is concatenated with -.

The form is ${pg_cluster}-${pg_role}, e.g. pg-meta-primary, pg-test-replica.

The options available for pg_role include: primary|replica|offline|delayed.

primary is a special role that each cluster must and can only define one instance of pg_role = primary as the primary library.

The other roles are largely user-defined, with replica|offline|delayed being a Pigsty predefined role.

What next?

After delineating the monitoring hierarchy, you need to [assign identity] to the monitoring object (../identity) to be able to manage them.

2 - Observability

对于系统管理来说,最重要到问题之一就是可观测性(Observability),下图展示了Postgres的可观测性。

原图地址:https://pgstats.dev/

PostgreSQL 提供了丰富的观测接口,包括系统目录,统计视图,辅助函数。 这些都是用户可以观测的信息。这里列出的信息全部为Pigsty所收录。Pigsty通过精心的设计,将晦涩的指标数据,转换成了人类可以轻松理解的洞察。

可观测性

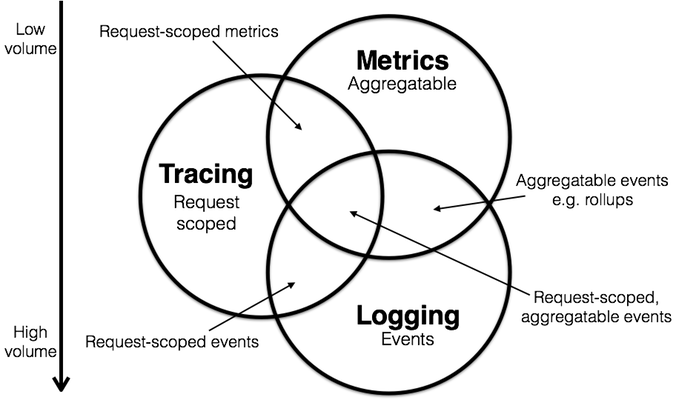

经典的监控模型中,有三类重要信息:

Pigsty重点关注 指标 信息,也会在后续加入对 日志 的采集、处理与展示,但Pigsty不会收集数据库的 追踪 信息。

指标

下面让以一个具体的例子来介绍指标的获取及其加工产物。

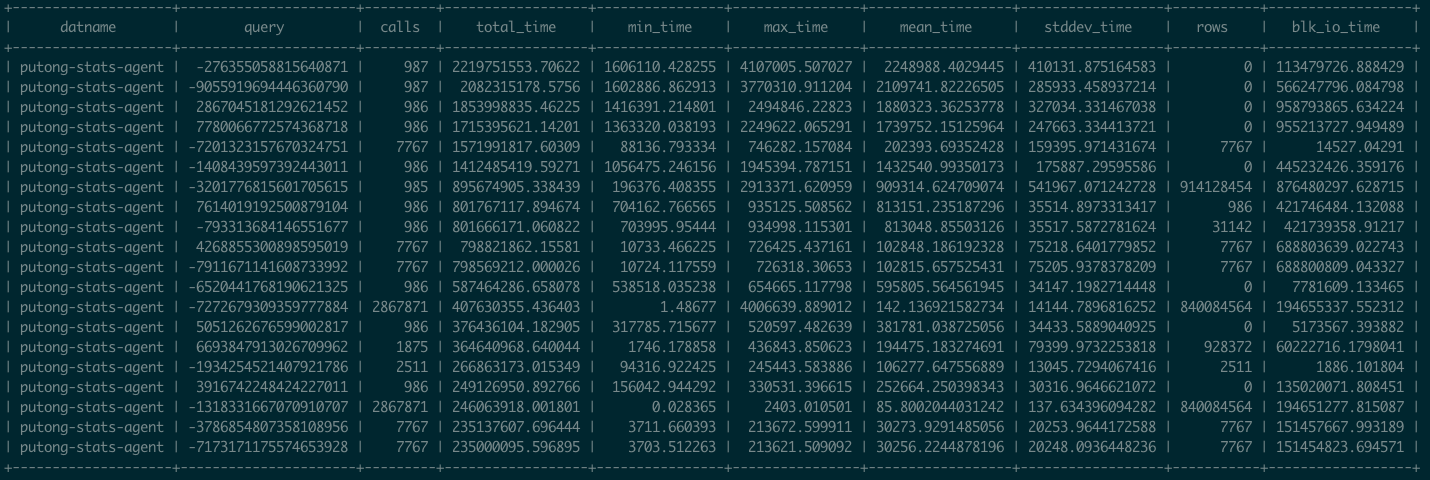

pg_stat_statements是Postgres官方提供的统计插件,可以暴露出数据库中执行的每一类查询的详细统计指标。

图:

pg_stat_statements原始数据视图

这里pg_stat_statements提供的原始指标数据以表格的形式呈现。每一类查询都分配有一个查询ID,紧接着是调用次数,总耗时,最大、最小、平均单次耗时,响应时间都标准差,每次调用平均返回的行数,用于块IO的时间这些指标,(如果是PG13,还有更为细化的计划时间、执行时间、产生的WAL记录数量等新指标)。

这些系统视图与系统信息函数,就是Pigsty中指标数据的原始来源。直接查阅这种数据表很容易让人眼花缭乱,失去焦点。需要将这种指标转换为洞察,也就是以直观图表的方式呈现。

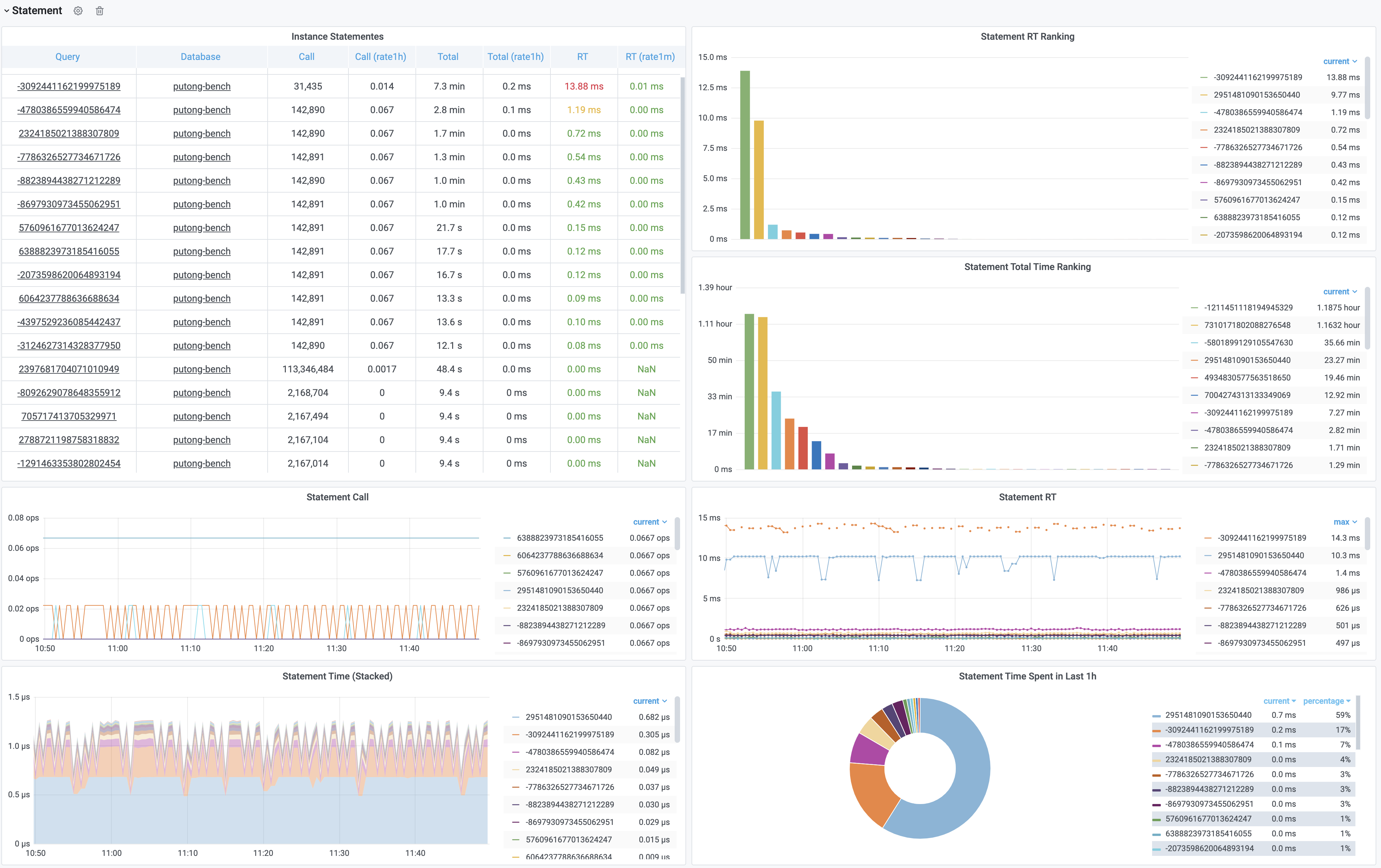

图:加工后的相关监控面板,PG Cluster Query看板部分截图

这里的表格数据经过一系列的加工处理,最终呈现为若干监控面板。最基本的数据加工是对表格中的原始数据进行标红上色,但也足以提供相当实用的改进:慢查询一览无余,但这不过是雕虫小技。重要的是,原始数据视图只能呈现当前时刻的快照;而通过Pigsty,用户可以回溯任意时刻或任意时间段。获取更深刻的性能洞察。

上图是集群视角下的查询看板 (PG Cluster Query),用户可以看到整个集群中所有查询的概览,包括每一类查询的QPS与RT,平均响应时间排名,以及耗费的总时间占比。

当用户对某一类具体查询感兴趣时,就可以点击查询ID,跳转到查询详情页(PG Query Detail)中。如下图所示。这里会显示查询的语句,以及一些核心指标。

图:呈现单类查询的详细信息,PG Query Detail 看板截图

上图是实际生产环境中的一次慢查询优化记录,用户可以从右侧中间的Realtime Response Time 面板中发现一个突变。该查询的平均响应时间从七八秒突降到了七八毫秒。我们定位到了这个慢查询并添加了适当的索引,那么优化的效果就立刻在图表上以直观的形式展现出来,给出实时的反馈。

这就是Pigsty需要解决的核心问题:From observability to insight。

日志

除了指标外,还有一类重要的观测数据:日志(Log),日志是对离散事件的记录与描述。

如果说指标是对数据库系统的被动观测,那么日志就是数据库系统及其周边组件主动上报的信息。

Pigsty目前尚未对数据库日志进行挖掘,但在后续的版本中将集成pgbadger与mtail,引入日志统一收集、分析、处理的基础设施。并添加数据库日志相关的监控指标。

用户可以自行使用开源组件对PostgreSQL日志进行分析。

追踪

PostgreSQL提供了对DTrace的支持,用户也可以使用采样探针分析PostgreSQL查询执行时的性能瓶颈。但此类数据仅在某些特定场景会用到,实用性一般,因此Pigsty不会针对数据库收集Trace数据。

接下来?

只有指标并不够,我们还需要将这些信息组织起来,才能构建出体系来。阅读 监控层级 了解更多信息

3 - Identity Management

All instances have Identity, which is the metadata associated with the instance that identifies it.

Figure : Identity information with Postgres service when using Consul service discovery

Identity parameters

[identity-parameters](. /… /… /config/7-pg-provision/# identity-parameters) is a unique identifier that must be defined for any cluster and instance.

| name | variables | type | description | |

|---|---|---|---|---|

| cluster | [pg_cluster](… /… /… /config/7-pg-provision/#pg_cluster) |

Core identity parameters | Cluster name, top-level namespace for resources within the cluster | |

| role | [pg_role](. /… /… /config/7-pg-provision/#pg_role) |

Core identity parameters | Instance role, primary, replica, offline, … |

|

| markers | [pg_seq](… /… /… /config/7-pg-provision/#pg_seq) |

Core identity parameters | Instance serial number, positive integer, unique within the cluster. | |

| instance | pg_instance |

derived identity parameter | ${pg_cluster}-${pg_seq} |

|

| service | pg_service |

derived identity parameters | ${pg_cluster}-${pg_role} |

Identity association

After naming the objects in the system, you also need to associate identity information to specific instances.

Identity information is business-given metadata, and the database instance itself is not aware of this identity information; it does not know who it serves, which business it is subordinate to, or what number of instances it is in the cluster.

Identity assignment can take many forms, and the most rudimentary way to associate identities is Operator’s memory: the DBA remembers in his mind that the database instance on IP address 10.2.3.4 is the one used for payments, while the database instance on the other one is used for user management. A better way to manage the identity of cluster members is through profile, or by using service discovery.

Pigsty offers both ways of identity management: based on [Consul](. /identity/#consul service discovery), versus [Profile](. /identity/#static file service discovery)

Parameters [prometheus_sd_method (consul|static)](… /… /… /config/4-meta/#prometheus_sd_method) controls this behavior.

consul: service discovery based on Consul, default configurationstatic: service discovery based on local configuration files

Pigsty recommends using consul service discovery, where the monitoring system automatically corrects the identity registered by the target instance when a Failover occurs on the server.

Consul service discovery

Pigsty by default uses Consul service discovery to manage the services in your environment.

All services in Pigsty are automatically registered to the DCS, so metadata is automatically corrected when database clusters are created, destroyed, or modified, and the monitoring system can automatically discover monitoring targets without the need to manually maintain the configuration. The monitoring system can automatically discover the monitoring targets, eliminating the need for manual configuration maintenance.

Users can also use the DNS and service discovery mechanism provided by Consul to achieve automatic DNS-based traffic switching.

Consul uses a Client/Server architecture, and there are 1 to 5 Consul Servers ranging from 1 to 5 in the whole environment for the actual metadata storage. The Consul Agent is deployed on all nodes to proxy the communication between the native services and the Consul Server. pigsty registers the services by default by means of the local Consul configuration file.

Service Registration

On each node, there is a consul agent running, and services are registered to the DCS by the consul agent via JSON configuration files.

The default location of the JSON configuration file is /etc/consul.d/, using the naming convention of svc-<service>.json, using postgres as an example.

{

"service": {

"name": "postgres",

"port": {{ pg_port }},

"tags": [

"{{ pg_role }}",

"{{ pg_cluster }}"

],

"meta": {

"type": "postgres",

"role": "{{ pg_role }}",

"seq": "{{ pg_seq }}",

"instance": "{{ pg_instance }}",

"service": "{{ pg_service }}",

"cluster": "{{ pg_cluster }}",

"version": "{{ pg_version }}"

},

"check": {

"tcp": "127.0.0.1:{{ pg_port }}",

"interval": "15s",

"timeout": "1s"

}

}

}

其中meta与tags部分是服务的元数据,存储有实例的身份信息。

服务查询

用户可以通过Consul提供的DNS服务,或者直接调用Consul API发现注册到Consul中的服务

使用DNS API查阅consul服务的方式,请参阅Consul文档。

图:查询

pg-bench-1上的pg_exporter服务。

服务发现

Prometheus会自动通过consul_sd_configs发现环境中的监控对象。同时带有pg和exporter标签的服务会自动被识别为抓取对象:

- job_name: pg

# https://prometheus.io/docs/prometheus/latest/configuration/configuration/#consul_sd_config

consul_sd_configs:

- server: localhost:8500

refresh_interval: 5s

tags:

- pg

- exporter

图:被Prometheus发现的服务,身份信息已关联至实例的指标维度上。

服务维护

有时候,因为数据库主从发生切换,导致注册的角色与数据库实例的实际角色出现偏差。这时候需要通过反熵过程处理这种异常。

基于Patroni的故障切换可以正常地通过回调逻辑修正注册的角色,但人工完成的角色切换则需要人工介入处理。

使用以下脚本可以自动检测并修复数据库的服务注册。建议在数据库实例上配置Crontab,或在元节点上设置定期巡检任务。

/pg/bin/pg-register $(pg-role)

静态文件服务发现

static服务发现依赖/etc/prometheus/targets/*.yml中的配置进行服务发现。采用这种方式的优势是不依赖Consul。

当Pigsty监控系统与外部管控方案集成时,这种模式对原系统的侵入性较小。但是缺点是,当集群内发生主从切换时,用户需要自行维护实例角色信息。手动维护时,可以根据以下命令从配置文件生成Prometheus所需的监控对象配置文件并载入生效。

详见 Prometheus服务发现。

./infra.yml --tags=prometheus_targtes,prometheus_reload

Pigsty默认生成的静态监控对象文件示例如下:

#==============================================================#

# File : targets/all.yml

# Ctime : 2021-02-18

# Mtime : 2021-02-18

# Desc : Prometheus Static Monitoring Targets Definition

# Path : /etc/prometheus/targets/all.yml

# Copyright (C) 2018-2021 Ruohang Feng

#==============================================================#

#======> pg-meta-1 [primary]

- labels: {cls: pg-meta, ins: pg-meta-1, ip: 10.10.10.10, role: primary, svc: pg-meta-primary}

targets: [10.10.10.10:9630, 10.10.10.10:9100, 10.10.10.10:9631, 10.10.10.10:9101]

#======> pg-test-1 [primary]

- labels: {cls: pg-test, ins: pg-test-1, ip: 10.10.10.11, role: primary, svc: pg-test-primary}

targets: [10.10.10.11:9630, 10.10.10.11:9100, 10.10.10.11:9631, 10.10.10.11:9101]

#======> pg-test-2 [replica]

- labels: {cls: pg-test, ins: pg-test-2, ip: 10.10.10.12, role: replica, svc: pg-test-replica}

targets: [10.10.10.12:9630, 10.10.10.12:9100, 10.10.10.12:9631, 10.10.10.12:9101]

#======> pg-test-3 [replica]

- labels: {cls: pg-test, ins: pg-test-3, ip: 10.10.10.13, role: replica, svc: pg-test-replica}

targets: [10.10.10.13:9630, 10.10.10.13:9100, 10.10.10.13:9631, 10.10.10.13:9101]

身份关联

无论是通过Consul服务发现,还是静态文件服务发现。最终的效果是实现身份信息与实例监控指标相互关联。

这一关联,是通过 监控指标 的维度标签实现的。

| 身份参数 | 维度标签 | 取值样例 |

|---|---|---|

pg_cluster |

cls |

pg-test |

pg_instance |

ins |

pg-test-1 |

pg_services |

svc |

pg-test-primary |

pg_role |

role |

primary |

node_ip |

ip |

10.10.10.11 |

阅读下一节 监控指标 ,了解这些指标是如何通过标签组织起来的。

4 - Metrics

Metrics lies in the core part of pigsty monitoring system.

指标形式

指标在形式上是可累加的,原子性的逻辑计量单元,可在时间段上进行更新与统计汇总。

指标通常以 带有维度标签的时间序列 的形式存在。举个例子,Pigsty沙箱中的pg:ins:qps_realtime指展示了所有实例的实时QPS。

pg:ins:qps_realtime{cls="pg-meta", ins="pg-meta-1", ip="10.10.10.10", role="primary"} 0

pg:ins:qps_realtime{cls="pg-test", ins="pg-test-1", ip="10.10.10.11", role="primary"} 327.6

pg:ins:qps_realtime{cls="pg-test", ins="pg-test-2", ip="10.10.10.12", role="replica"} 517.0

pg:ins:qps_realtime{cls="pg-test", ins="pg-test-3", ip="10.10.10.13", role="replica"} 0

用户可以对指标进行运算:求和、求导,聚合,等等。例如:

$ sum(pg:ins:qps_realtime) by (cls) -- 查询按集群聚合的 实时实例QPS

{cls="pg-meta"} 0

{cls="pg-test"} 844.6

$ avg(pg:ins:qps_realtime) by (cls) -- 查询每个集群中 所有实例的平均 实时实例QPS

{cls="pg-meta"} 0

{cls="pg-test"} 280

$ avg_over_time(pg:ins:qps_realtime[30m]) -- 过去30分钟内实例的平均QPS

pg:ins:qps_realtime{cls="pg-meta", ins="pg-meta-1", ip="10.10.10.10", role="primary"} 0

pg:ins:qps_realtime{cls="pg-test", ins="pg-test-1", ip="10.10.10.11", role="primary"} 130

pg:ins:qps_realtime{cls="pg-test", ins="pg-test-2", ip="10.10.10.12", role="replica"} 100

pg:ins:qps_realtime{cls="pg-test", ins="pg-test-3", ip="10.10.10.13", role="replica"} 0

指标模型

每一个指标(Metric),都是一类数据,通常会对应多个时间序列(time series)。同一个指标对应的不同时间序列通过维度进行区分。

指标 + 维度,可以具体定位一个时间序列。每一个时间序列都是由 (时间戳,取值)二元组构成的数组。

Pigsty采用Prometheus的指标模型,其逻辑概念可以用以下的SQL DDL表示。

-- 指标表,指标与时间序列构成1:n关系

CREATE TABLE metrics (

id INT PRIMARY KEY, -- 指标标识

name TEXT UNIQUE -- 指标名称,[...其他指标元数据,例如类型]

);

-- 时间序列表,每个时间序列都对应一个指标。

CREATE TABLE series (

id BIGINT PRIMARY KEY, -- 时间序列标识

metric_id INTEGER REFERENCES metrics (id), -- 时间序列所属的指标

dimension JSONB DEFAULT '{}' -- 时间序列带有的维度信息,采用键值对的形式表示

);

-- 时许数据表,保存最终的采样数据点。每个采样点都属于一个时间序列

CREATE TABLE series_data (

series_id BIGINT REFERENCES series(id), -- 时间序列标识

ts TIMESTAMP, -- 采样点时间戳

value FLOAT, -- 采样点指标值

PRIMARY KEY (series_id, ts) -- 每个采样点可以通过 所属时间序列 与 时间戳 唯一标识

);

这里我们以pg:ins:qps指标为例:

-- 样例指标数据

INSERT INTO metrics VALUES(1, 'pg:ins:qps'); -- 该指标名为 pg:ins:qps ,是一个 GAUGE。

INSERT INTO series VALUES -- 该指标包含有四个时间序列,通过维度标签区分

(1001, 1, '{"cls": "pg-meta", "ins": "pg-meta-1", "role": "primary", "other": "..."}'),

(1002, 1, '{"cls": "pg-test", "ins": "pg-test-1", "role": "primary", "other": "..."}'),

(1003, 1, '{"cls": "pg-test", "ins": "pg-test-2", "role": "replica", "other": "..."}'),

(1004, 1, '{"cls": "pg-test", "ins": "pg-test-3", "role": "replica", "other": "..."}');

INSERT INTO series_data VALUES -- 每个时间序列底层的采样点

(1001, now(), 1000), -- 实例 pg-meta-1 在当前时刻QPS为1000

(1002, now(), 1000), -- 实例 pg-test-1 在当前时刻QPS为1000

(1003, now(), 5000), -- 实例 pg-test-2 在当前时刻QPS为1000

(1004, now(), 5001); -- 实例 pg-test-3 在当前时刻QPS为5001

pg_up是一个指标,包含有4个时间序列。记录了整个环境中所有实例的存活状态。pg_up{ins": "pg-test-1", ...}是一个时间序列,记录了特定实例pg-test-1的存活状态

指标来源

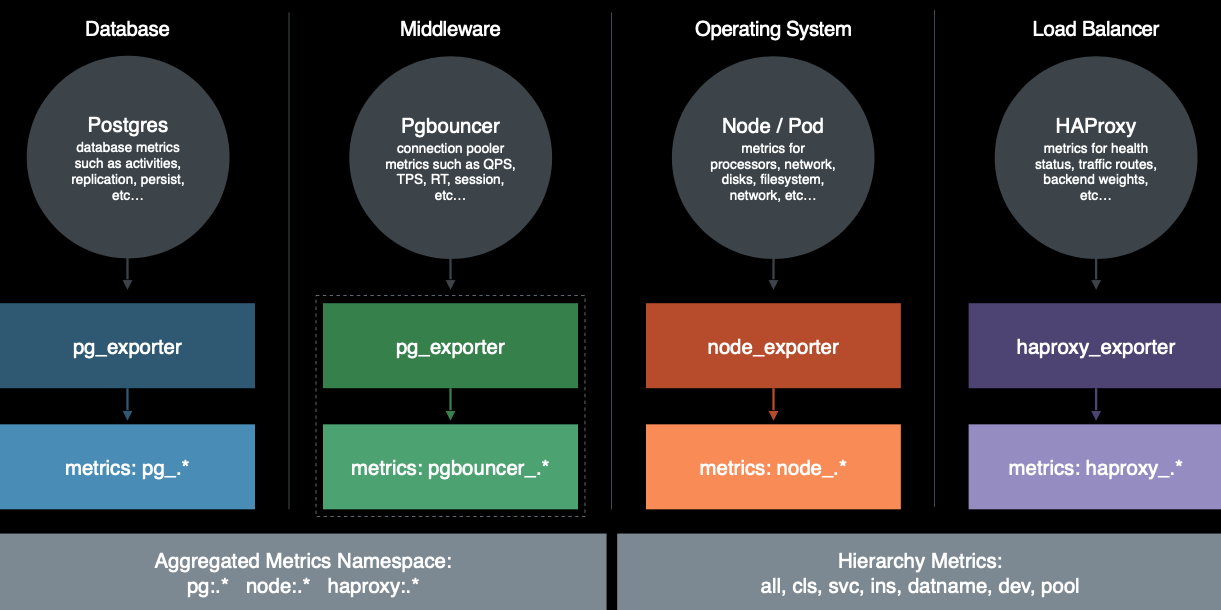

Pigsty的监控数据主要有四种主要来源: 数据库,连接池,操作系统,负载均衡器。通过相应的exporter对外暴露。

完整来源包括:

- PostgreSQL本身的监控指标

- PostgreSQL日志中的统计指标

- PostgreSQL系统目录信息

- Pgbouncer连接池中间价的指标

- PgExporter指标

- 数据库工作节点Node的指标

- 负载均衡器Haproxy指标

- DCS(Consul)工作指标

- 监控系统自身工作指标:Grafana,Prometheus,Nginx

- Blackbox探活指标

关于全部可用的指标清单,请查阅 参考-指标清单 一节

指标数量

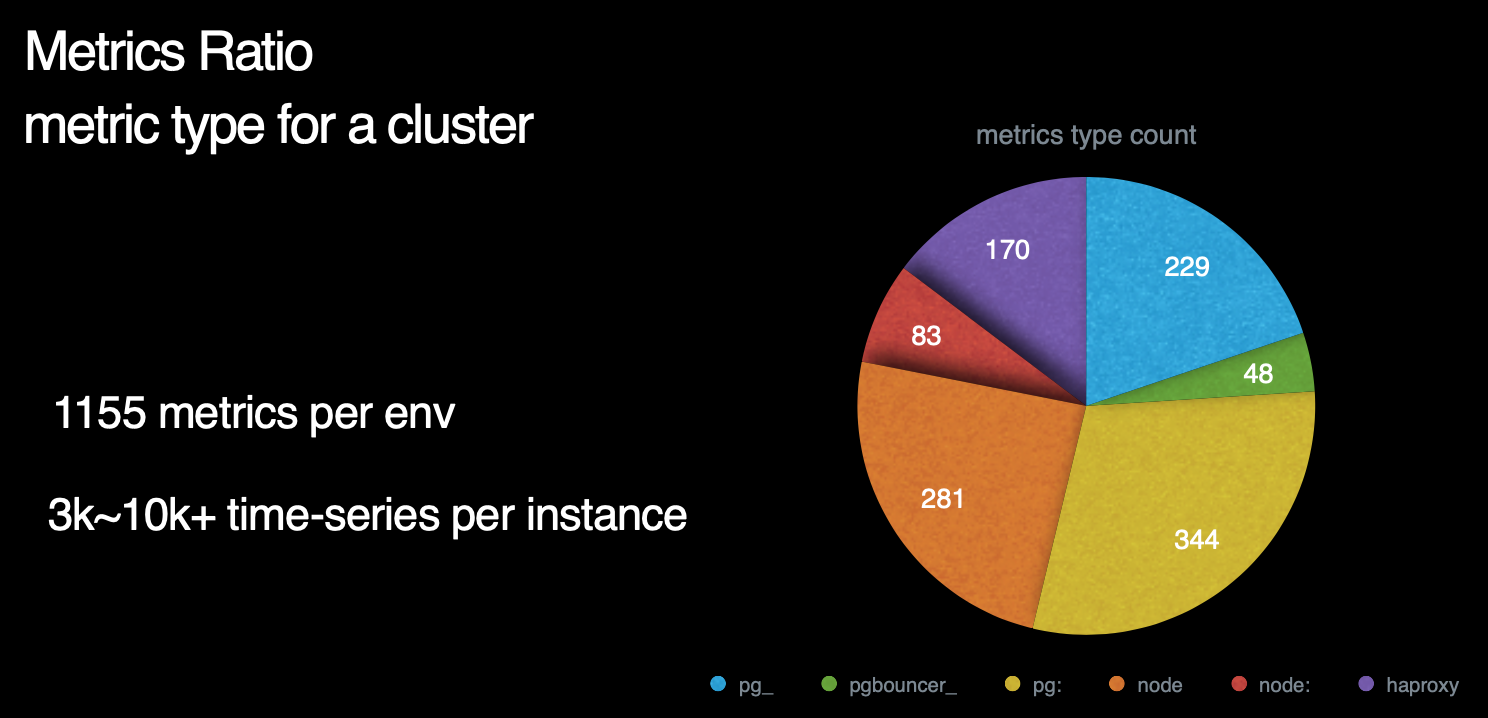

那么,Pigsty总共包含了多少指标呢? 这里是一副各个指标来源占比的饼图。我们可以看到,右侧蓝绿黄对应的部分是数据库及数据库相关组件所暴露的指标,而左下方红橙色部分则对应着机器节点相关指标。左上方紫色部分则是负载均衡器的相关指标。

数据库指标中,与postgres本身有关的原始指标约230个,与中间件有关的原始指标约50个,基于这些原始指标,Pigsty又通过层次聚合与预计算,精心设计出约350个与DB相关的衍生指标。

因此,对于每个数据库集群来说,单纯针对数据库及其附件的监控指标就有621个。而机器原始指标281个,衍生指标83个一共364个。加上负载均衡器的170个指标,我们总共有接近1200类指标。

注意,这里我们必须辨析一下指标(metric)与时间序列( Time-series)的区别。

这里我们使用的量词是 类 而不是个 。 因为一个指标可能对应多个时间序列。例如一个数据库中有20张表,那么 pg_table_index_scan 这样的指标就会对应有20个对应的时间序列。

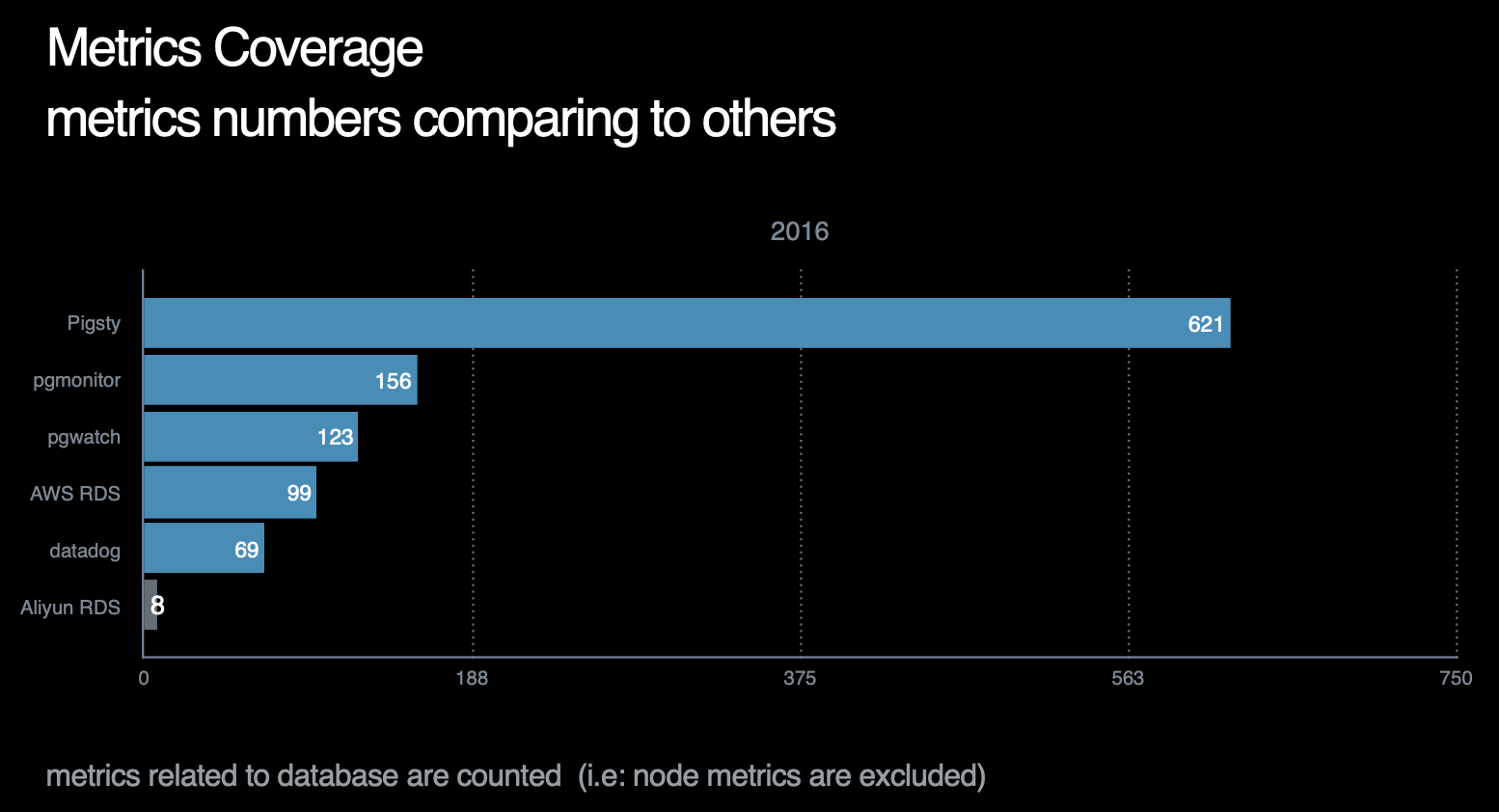

截止至2021年,Pigsty的指标覆盖率在所有作者已知的开源/商业监控系统中一骑绝尘,详情请参考横向对比。

指标层次

Pigsty还会基于现有指标进行加工处理,产出 衍生指标(Derived Metrics) 。

例如指标可以按照不同的层次进行聚合

从原始监控时间序列数据,到最终的成品图表,中间还有着若干道加工工序。

这里以TPS指标的衍生流程为例。

原始数据是从Pgbouncer抓取得到的事务计数器,集群中有四个实例,而每个实例上又有两个数据库,所以一个实例总共有8个DB层次的TPS指标。

而下面的图表,则是整个集群内每个实例的QPS横向对比,因此在这里,我们使用预定义的规则,首先对原始事务计数器求导获取8个DB层面的TPS指标,然后将8个DB层次的时间序列聚合为4个实例层次的TPS指标,最后再将这四个实例级别的TPS指标聚合为集群层次的TPS指标。

Pigsty共定义了360类衍生聚合指标,后续还会不断增加。衍生指标定义规则详见 参考-衍生指标

特殊指标

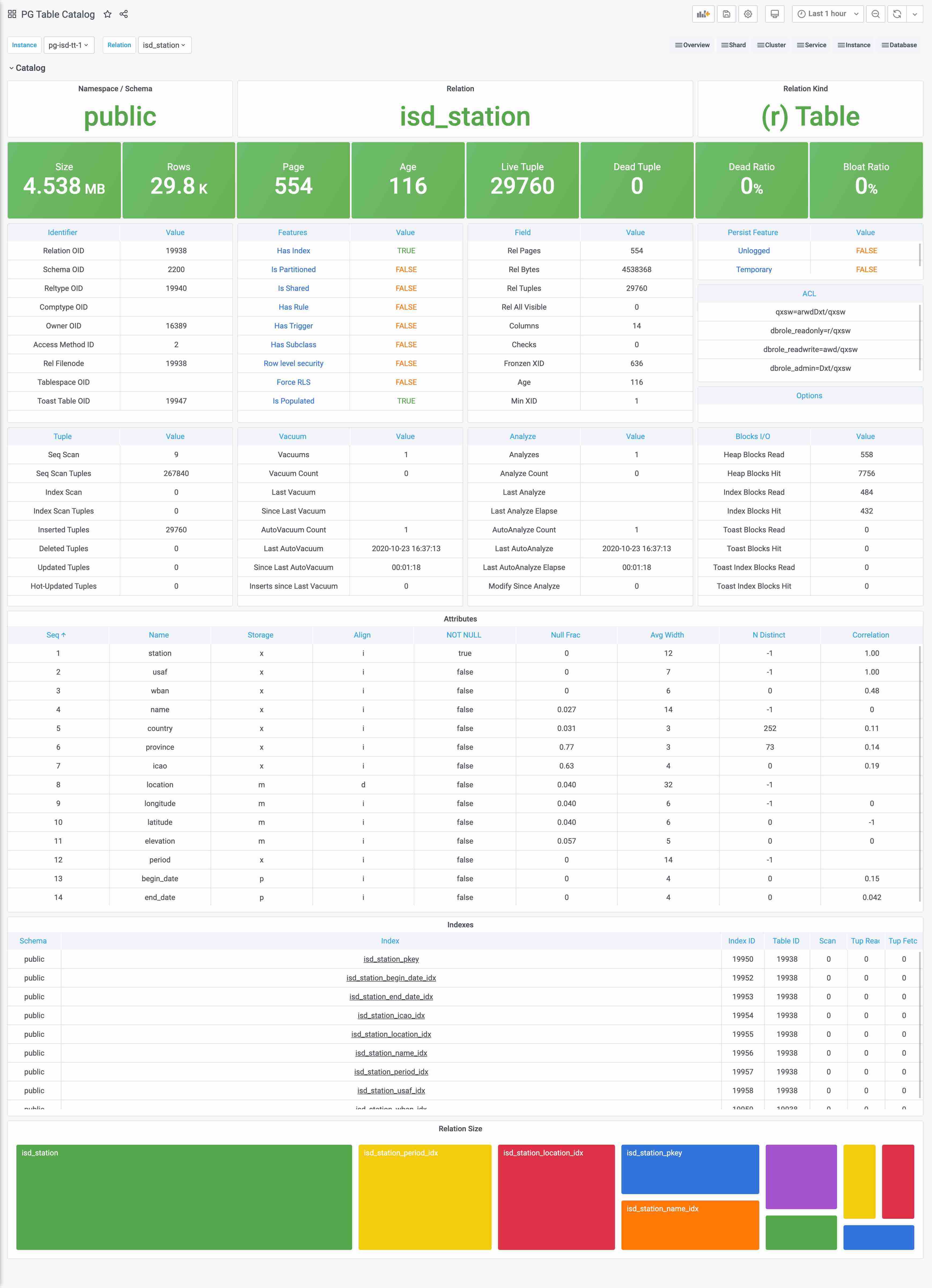

目录(Catalog) 是一种特殊的指标

Catalog与Metrics比较相似但又不完全相同,边界比较模糊。最简单的例子,一个表的页面数量和元组数量,应该算Catalog还是算Metrics?

跳过这种概念游戏,实践上Catalog和Metrics主要的区别是,Catalog里的信息通常是不怎么变化的,比如表的定义之类的,如果也像Metrics这样比如几秒抓一次,显然是一种浪费。所以我们会将这一类偏静态的信息划归Catalog。

Catalog主要由定时任务(例如巡检)负责抓取,而不由Prometheus采集。一些特别重要的Catalog信息,例如pg_class中的一些信息,也会转换为指标被Prometheus所采集。

小结

了解了Pigsty指标后,不妨了解一下Pigsty的 报警系统 是如何将这些指标数据用于实际生产用途的。

5 - Alert Rules

Alerts are critical to daily fault response and to improve system availability.

Missed alarms lead to reduced availability and false alarms lead to reduced sensitivity, necessitating a prudent design of alarm rules.

- Reasonable definition of alarm levels and the corresponding processing flow

- Reasonable definition of alarm indicators, removal of duplicate alarm items, and supplementation of missing alarm items

- Scientific configuration of alarm thresholds based on historical monitoring data to reduce the false alarm rate.

- Reasonable sparse special case rules to eliminate false alarms caused by maintenance work, ETL, and offline query.

Alert taxonomy

**By Urgency **

-

P0: FATAL: Incidents that have significant off-site impact and require urgent intervention to handle. For example, main library down, replication outage. (Serious incident)

-

P1: ERROR: Incidents with minor off-site impact, or incidents with redundant processing, requiring response processing at the minute level. (Incident)

-

P2: WARNING: imminent impact, let loose may worsen at the hourly level, response is required at the hourly level. (Incident of concern)

-

P3: NOTICE: Needs attention, will not have an immediate impact, but needs to be responded to within the day level. (Deviation phenomena)

By Level

- DBA will only pay special attention to CPU and disk alarms, and the rest is the responsibility of operation and maintenance.

- Database level: Alarms of database itself, DBA focus on. This is generated by PG, PGB, and Exporter’s own monitoring metrics.

- Application level: Application alarms are the responsibility of the business side itself, but DBA will set alarms for business metrics such as QPS, TPS, Rollback, Seasonality, etc.

By Metric Type

- Errors: PG Down, PGB Down, Exporter Down, Stream Replication Outage, Single Set Cluster Multi-Master

- Traffic: QPS, TPS, Rollback, Seasonality

- Latency: Average Response Time, Replication Latency

- Saturation: Connection Stacking, Number of Idle Transactions, CPU, Disk, Age (Transaction Number), Buffers

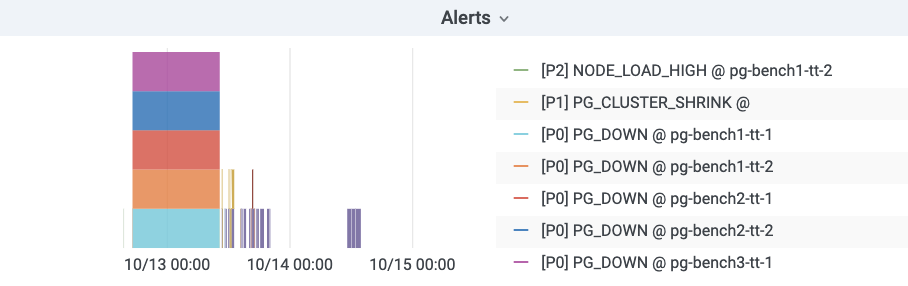

Alarm Visualization

Pigsty uses bar graphs to present alarm information. The horizontal axis represents the time period and a color bar represents the alarm event. Only alarms that are in the Firing state are displayed in the alarm chart.

Alarm rules in detail

Alarm rules can be roughly divided into four categories by type: error, delay, saturation, and flow. Among them.

- Errors: mainly concerned with the survivability (Aliveness) of each component, as well as network outages, brain fractures and other abnormalities, the level is usually higher (P0|P1).

- Latency: mainly concerned with query response time, replication latency, slow queries, and long transactions.

- Saturation: mainly focus on CPU, disk (these two belong to system monitoring but very important for DB so incorporate), connection pool queue, number of database back-end connections, age (essentially the saturation of available thing numbers), SSD life, etc.

- Traffic: QPS, TPS, Rollback (traffic is usually related to business metrics belongs to business monitoring, but incorporated because it is important for DB), seasonality of QPS, burst of TPS.

Error Alarm

Postgres instance downtime distinguishes between master and slave, master library downtime triggers P0 alarm, slave library downtime triggers P1 alarm. Both require immediate intervention, but slave libraries usually have multiple instances and can be downgraded to query on the master library, which has a higher processing margin, so slave library downtime is designated as P1.

# primary|master instance down for 1m triggers a P0 alert

- alert: PG_PRIMARY_DOWN

expr: pg_up{instance=~'.*master.*'}

for: 1m

labels:

team: DBA

urgency: P0

annotations:

summary: "P0 Postgres Primary Instance Down: {{$labels.instance}}"

description: "pg_up = {{ $value }} {{$labels.instance}}"

# standby|slave instance down for 1m triggers a P1 alert

- alert: PG_STANDBY_DOWN

expr: pg_up{instance!~'.*master.*'}

for: 1m

labels:

team: DBA

urgency: P1

annotations:

summary: "P1 Postgres Standby Instance Down: {{$labels.instance}}"

description: "pg_up = {{ $value }} {{$labels.instance}}"

Pgbouncer实例因为与Postgres实例一一对应,其存活性报警规则与Postgres统一。

# primary pgbouncer down for 1m triggers a P0 alert

- alert: PGB_PRIMARY_DOWN

expr: pgbouncer_up{instance=~'.*master.*'}

for: 1m

labels:

team: DBA

urgency: P0

annotations:

summary: "P0 Pgbouncer Primary Instance Down: {{$labels.instance}}"

description: "pgbouncer_up = {{ $value }} {{$labels.instance}}"

# standby pgbouncer down for 1m triggers a P1 alert

- alert: PGB_STANDBY_DOWN

expr: pgbouncer_up{instance!~'.*master.*'}

for: 1m

labels:

team: DBA

urgency: P1

annotations:

summary: "P1 Pgbouncer Standby Instance Down: {{$labels.instance}}"

description: "pgbouncer_up = {{ $value }} {{$labels.instance}}"

Prometheus Exporter的存活性定级为P1,虽然Exporter宕机本身并不影响数据库服务,但这通常预示着一些不好的情况,而且监控数据的缺失也会产生某些相应的报警。Exporter的存活性是通过Prometheus自己的up指标检测的,需要注意某些单实例多DB的特例。

# exporter down for 1m triggers a P1 alert

- alert: PG_EXPORTER_DOWN

expr: up{port=~"(9185|9127)"} == 0

for: 1m

labels:

team: DBA

urgency: P1

annotations:

summary: "P1 Exporter Down: {{$labels.instance}} {{$labels.port}}"

description: "port = {{$labels.port}}, {{$labels.instance}}"

所有存活性检测的持续时间阈值设定为1分钟,对15s的默认采集周期而言是四个样本点。常规的重启操作通常不会触发存活性报警。

延迟报警

与复制延迟有关的报警有三个:复制中断,复制延迟高,复制延迟异常,分别定级为P1, P2, P3

-

其中复制中断是一种错误,使用指标:

pg_repl_state_count{state="streaming"}进行判断,当前streaming状态的从库如果数量发生负向变动,则触发break报警。walsender会决定复制的状态,从库直接断开会产生此现象,缓冲区出现积压时会从streaming进入catchup状态也会触发此报警。此外,采用-Xs手工制作备份结束时也会产生此报警,此报警会在10分钟后自动Resolve。复制中断会导致客户端读到陈旧的数据,具有一定的场外影响,定级为P1。 -

复制延迟可以使用延迟时间或者延迟字节数判定。以延迟字节数为权威指标。常规状态下,复制延迟时间在百毫秒量级,复制延迟字节在百KB量级均属于正常。目前采用的是5s,15s的时间报警阈值。根据历史经验数据,这里采用了时间8秒与字节32MB的阈值,大致报警频率为每天个位数个。延迟时间更符合直觉,所以采用8s的P2报警,但并不是所有的从库都能有效取到该指标所以使用32MB的字节阈值触发P3报警补漏。

-

特例:

antispam,stats,coredb均经常出现复制延迟。

# replication break for 1m triggers a P0 alert. auto-resolved after 10 minutes.

- alert: PG_REPLICATION_BREAK

expr: pg_repl_state_count{state="streaming"} - (pg_repl_state_count{state="streaming"} OFFSET 10m) < 0

for: 1m

labels:

team: DBA

urgency: P0

annotations:

summary: "P0 Postgres Streaming Replication Break: {{$labels.instance}}"

description: "delta = {{ $value }} {{$labels.instance}}"

# replication lag greater than 8 second for 3m triggers a P1 alert

- alert: PG_REPLICATION_LAG

expr: pg_repl_replay_lag{application_name="walreceiver"} > 8

for: 3m

labels:

team: DBA

urgency: P1

annotations:

summary: "P1 Postgres Replication Lagged: {{$labels.instance}}"

description: "lag = {{ $value }} seconds, {{$labels.instance}}"

# replication diff greater than 32MB for 5m triggers a P3 alert

- alert: PG_REPLICATOIN_DIFF

expr: pg_repl_lsn{application_name="walreceiver"} - pg_repl_replay_lsn{application_name="walreceiver"} > 33554432

for: 5m

labels:

team: DBA

urgency: P3

annotations:

summary: "P3 Postgres Replication Diff Deviant: {{$labels.instance}}"

description: "delta = {{ $value }} {{$labels.instance}}"

饱和度报警

饱和度指标主要资源,包含很多系统级监控的指标。主要包括:CPU,磁盘(这两个属于系统监控但对于DB非常重要所以纳入),连接池排队,数据库后端连接数,年龄(本质是可用事物号的饱和度),SSD寿命等。

堆积检测

堆积主要包含两类指标,一方面是PG本身的后端连接数与活跃连接数,另一方面是连接池的排队情况。

PGB排队是决定性的指标,它代表用户端可感知的阻塞已经出现,因此,配置排队超过15持续1分钟触发P0报警。

# more than 8 client waiting in queue for 1 min triggers a P0 alert

- alert: PGB_QUEUING

expr: sum(pgbouncer_pool_waiting_clients{datname!="pgbouncer"}) by (instance,datname) > 8

for: 1m

labels:

team: DBA

urgency: P0

annotations:

summary: "P0 Pgbouncer {{ $value }} Clients Wait in Queue: {{$labels.instance}}"

description: "waiting clients = {{ $value }} {{$labels.instance}}"

后端连接数是一个重要的报警指标,如果后端连接持续达到最大连接数,往往也意味着雪崩。连接池的排队连接数也能反映这种情况,但不能覆盖应用直连数据库的情况。后端连接数的主要问题是它与连接池关系密切,连接池在短暂堵塞后会迅速打满后端连接,但堵塞恢复后这些连接必须在默认约10min的Timeout后才被释放。因此收到短暂堆积的影响较大。同时外晚上1点备份时也会出现这种情况,容易产生误报。

注意后端连接数与后端活跃连接数不同,目前报警使用的是活跃连接数。后端活跃连接数通常在0~1,一些慢库在十几左右,离线库可能会达到20~30。但后端连接/进程数(不管活跃不活跃),通常均值可达50。后端连接数更为直观准确。

对于后端连接数,这里使用两个等级的报警:超过90持续3分钟P1,以及超过80持续10分钟P2,考虑到通常数据库最大连接数为100。这样做可以以尽可能低的误报率检测到雪崩堆积。

# num of backend exceed 90 for 3m

- alert: PG_BACKEND_HIGH

expr: sum(pg_db_numbackends) by (node) > 90

for: 3m

labels:

team: DBA

urgency: P1

annotations:

summary: "P1 Postgres Backend Number High: {{$labels.instance}}"

description: "numbackend = {{ $value }} {{$labels.instance}}"

# num of backend exceed 80 for 10m (avoid pgbouncer jam false alert)

- alert: PG_BACKEND_WARN

expr: sum(pg_db_numbackends) by (node) > 80

for: 10m

labels:

team: DBA

urgency: P2

annotations:

summary: "P2 Postgres Backend Number Warn: {{$labels.instance}}"

description: "numbackend = {{ $value }} {{$labels.instance}}"

空闲事务

目前监控使用IDEL In Xact的绝对数量作为报警条件,其实 Idle In Xact的最长持续时间可能会更有意义。因为这种现象其实已经被后端连接数覆盖了。长时间的空闲是我们真正关注的,因此这里使用所有空闲事务中最高的闲置时长作为报警指标。设置3分钟为P2报警阈值。经常出现IDLE的非Offline库有:moderation, location, stats,sms, device, moderationdevice

# max idle xact duration exceed 3m

- alert: PG_IDLE_XACT

expr: pg_activity_max_duration{instance!~".*offline.*", state=~"^idle in transaction.*"} > 180

for: 3m

labels:

team: DBA

urgency: P2

annotations:

summary: "P2 Postgres Long Idle Transaction: {{$labels.instance}}"

description: "duration = {{ $value }} {{$labels.instance}}"

资源报警

CPU, 磁盘,AGE

默认清理年龄为2亿,超过10Y报P1,既留下了充分的余量,又不至于让人忽视。

# age wrap around (progress in half 10Y) triggers a P1 alert

- alert: PG_XID_WRAP

expr: pg_database_age{} > 1000000000

for: 3m

labels:

team: DBA

urgency: P1

annotations:

summary: "P1 Postgres XID Wrap Around: {{$labels.instance}}"

description: "age = {{ $value }} {{$labels.instance}}"

磁盘和CPU由运维配置,不变

流量

因为各个业务的负载情况不一,为流量指标设置绝对值是相对困难的。这里只对TPS和Rollback设置绝对值指标。而且较为宽松。

Rollback OPS超过4则发出P3警告,TPS超过24000发P2,超过30000发P1

# more than 30k TPS lasts for 1m triggers a P1 (pgbouncer bottleneck)

- alert: PG_TPS_HIGH

expr: rate(pg_db_xact_total{}[1m]) > 30000

for: 1m

labels:

team: DBA

urgency: P1

annotations:

summary: "P1 Postgres TPS High: {{$labels.instance}} {{$labels.datname}}"

description: "TPS = {{ $value }} {{$labels.instance}}"

# more than 24k TPS lasts for 3m triggers a P2

- alert: PG_TPS_WARN

expr: rate(pg_db_xact_total{}[1m]) > 24000

for: 3m

labels:

team: DBA

urgency: P2

annotations:

summary: "P2 Postgres TPS Warning: {{$labels.instance}} {{$labels.datname}}"

description: "TPS = {{ $value }} {{$labels.instance}}"

# more than 4 rollback per seconds lasts for 5m

- alert: PG_ROLLBACK_WARN

expr: rate(pg_db_xact_rollback{}[1m]) > 4

for: 5m

labels:

team: DBA

urgency: P2

annotations:

summary: "P2 Postgres Rollback Warning: {{$labels.instance}}"

description: "rollback per sec = {{ $value }} {{$labels.instance}}"

QPS的指标与业务高度相关,因此不适合配置绝对值,可以为QPS突增配置一个报警项

短时间(和10分钟)前比突增30%会触发一个P2警报,同时避免小QPS下的突发流量,设置一个绝对阈值10k

# QPS > 10000 and have a 30% inc for 3m triggers P2 alert

- alert: PG_QPS_BURST

expr: sum by(datname,instance)(rate(pgbouncer_stat_total_query_count{datname!="pgbouncer"}[1m]))/sum by(datname,instance) (rate(pgbouncer_stat_total_query_count{datname!="pgbouncer"}[1m] offset 10m)) > 1.3 and sum by(datname,instance) (rate(pgbouncer_stat_total_query_count{datname!="pgbouncer"}[1m])) > 10000

for: 3m

labels:

team: DBA

urgency: P1

annotations:

summary: "P2 Pgbouncer QPS Burst 30% and exceed 10000: {{$labels.instance}}"

description: "qps = {{ $value }} {{$labels.instance}}"

Prometheus报警规则

完整的报警规则详见:参考-报警规则