Provisioning

Concepts about pigsty provisioning systems

By Provisioning Solution, we mean a system that delivers database services and monitoring systems to users.

Provisioning Solution is not a database, but a database factory.

The user submits a configuration to the provisioning system, and the provisioning system creates the required database cluster in the environment according to the user’s desired specifications.

This is more similar to submitting a YAML file to Kubernetes to create the various resources required.

Defining a database cluster

For example, the following configuration information declares a set of PostgreSQL database clusters named pg-test.

#-----------------------------

# cluster: pg-test

#-----------------------------

pg-test: # define cluster named 'pg-test'

# - cluster members - #

hosts:

10.10.10.11: {pg_seq: 1, pg_role: primary, ansible_host: node-1}

10.10.10.12: {pg_seq: 2, pg_role: replica, ansible_host: node-2}

10.10.10.13: {pg_seq: 3, pg_role: offline, ansible_host: node-3}

# - cluster configs - #

vars:

# basic settings

pg_cluster: pg-test # define actual cluster name

pg_version: 13 # define installed pgsql version

node_tune: tiny # tune node into oltp|olap|crit|tiny mode

pg_conf: tiny.yml # tune pgsql into oltp/olap/crit/tiny mode

# business users, adjust on your own needs

pg_users:

- name: test # example production user have read-write access

password: test # example user's password

roles: [dbrole_readwrite] # dborole_admin|dbrole_readwrite|dbrole_readonly|dbrole_offline

pgbouncer: true # production user that access via pgbouncer

comment: default test user for production usage

pg_databases: # create a business database 'test'

- name: test # use the simplest form

pg_default_database: test # default database will be used as primary monitor target

# proxy settings

vip_mode: l2 # enable/disable vip (require members in same LAN)

vip_address: 10.10.10.3 # virtual ip address

vip_cidrmask: 8 # cidr network mask length

vip_interface: eth1 # interface to add virtual ip

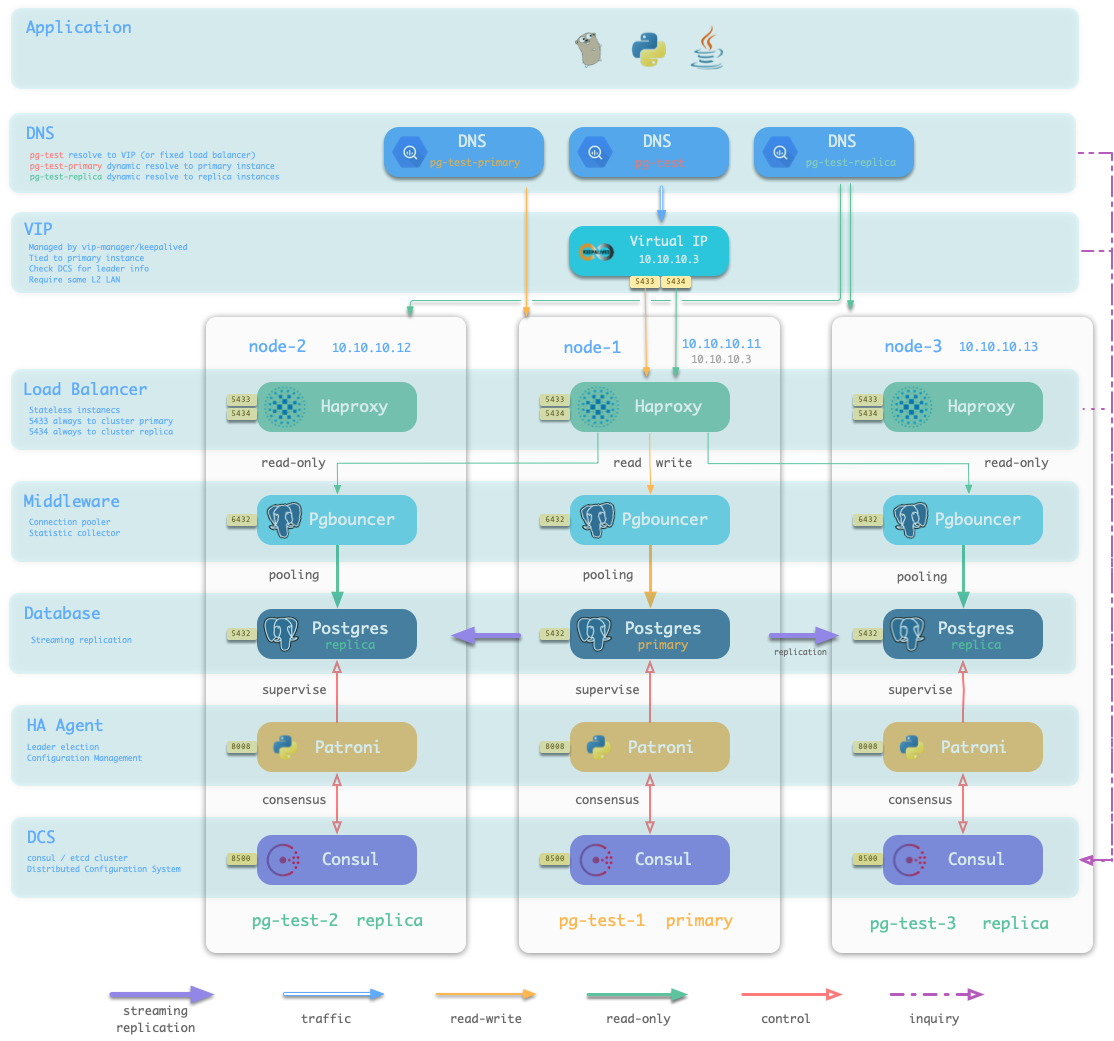

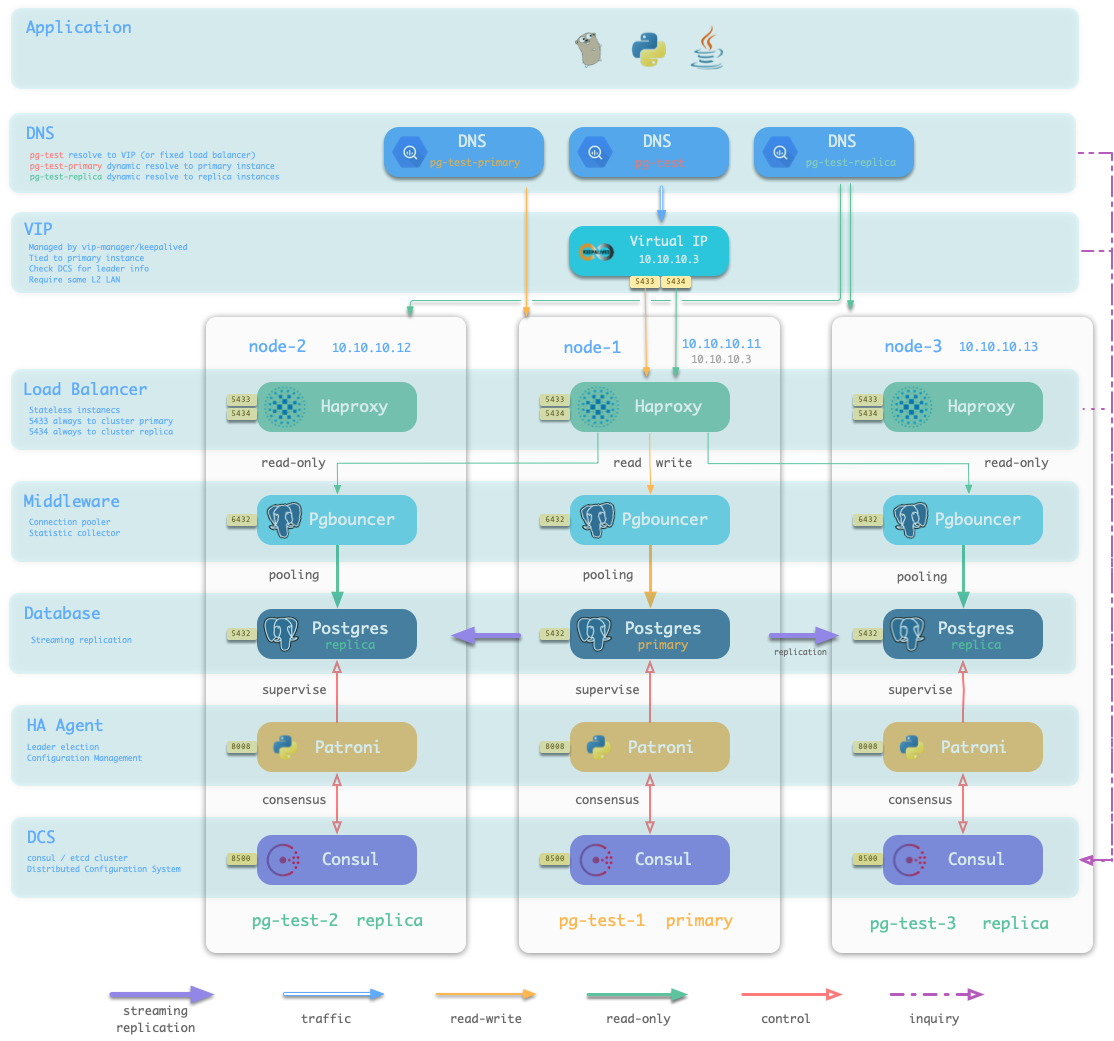

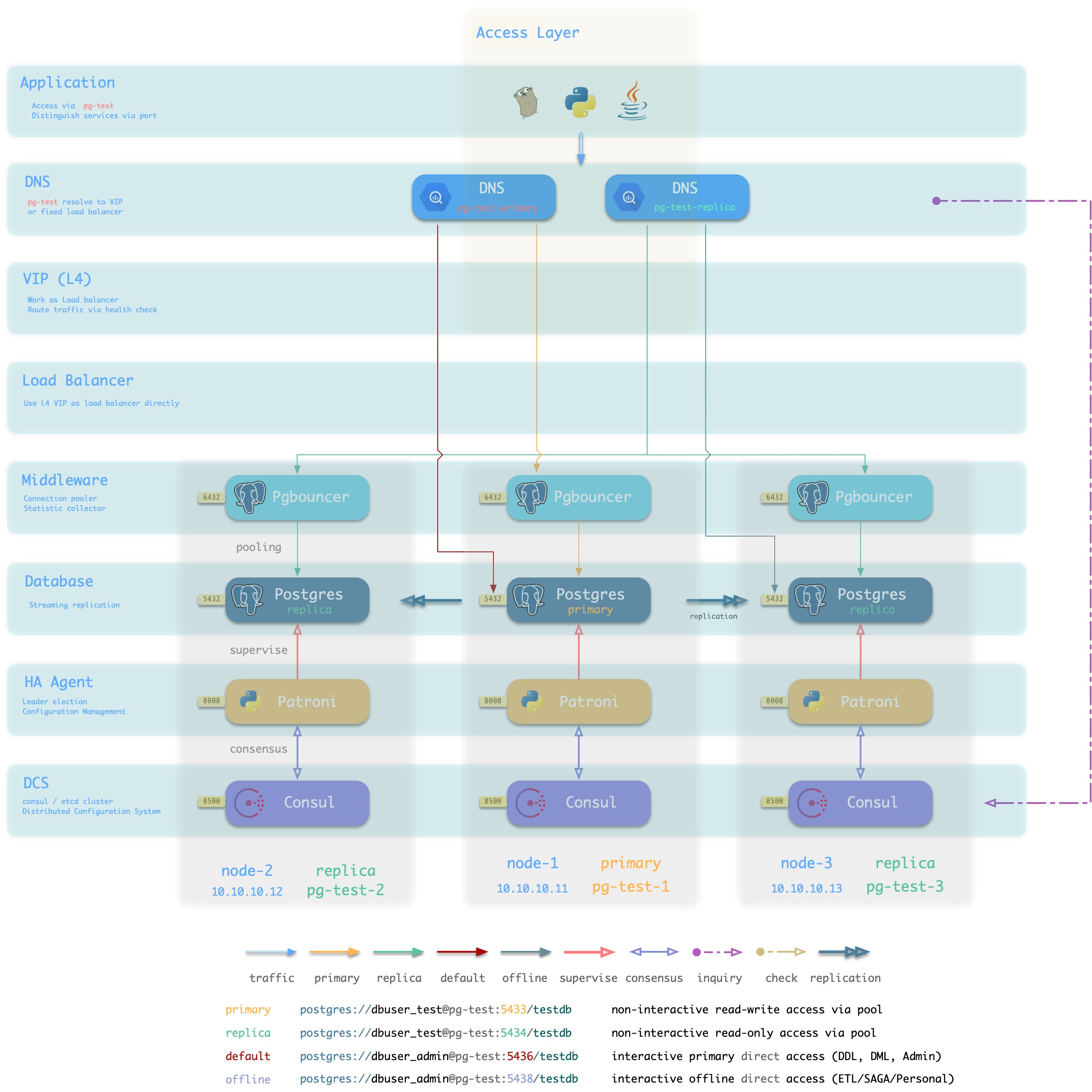

When executing database provisioning script . /pgsql.yml, the provisioning system will generate a one-master-two-slave PostgreSQL cluster pg-test on the three machines 10.10.10.11, 10.10.10.12, and 10.10.10.13, as defined in the manifest. And create a user and database named test. At the same time, Pigsty will also declare a 10.10.10.3 VIP binding on top of the cluster’s master library upon request. The structure is shown in the figure below.

Defining the infrastructure

The user is able to define not only the database cluster, but also the entire infrastructure.

Pigsty implements a complete representation of the database runtime environment with 154 variables.

For detailed configurable items, please refer to the Configuration Guide

Responsibilities of the provisioning scheme

The provisioning solution is usually only responsible for the creation of the cluster. Once the cluster is created, the day-to-day management should be the responsibility of the control platform.

However, Pigsty does not currently include a control platform component, so a simple resource recovery and destruction script is provided and can also be used for resource updates and management. However, it is not the job of the provisioning solution to do this.

1 - DB Access

How to access database?

Database access methods

Users can access the database services in several ways.

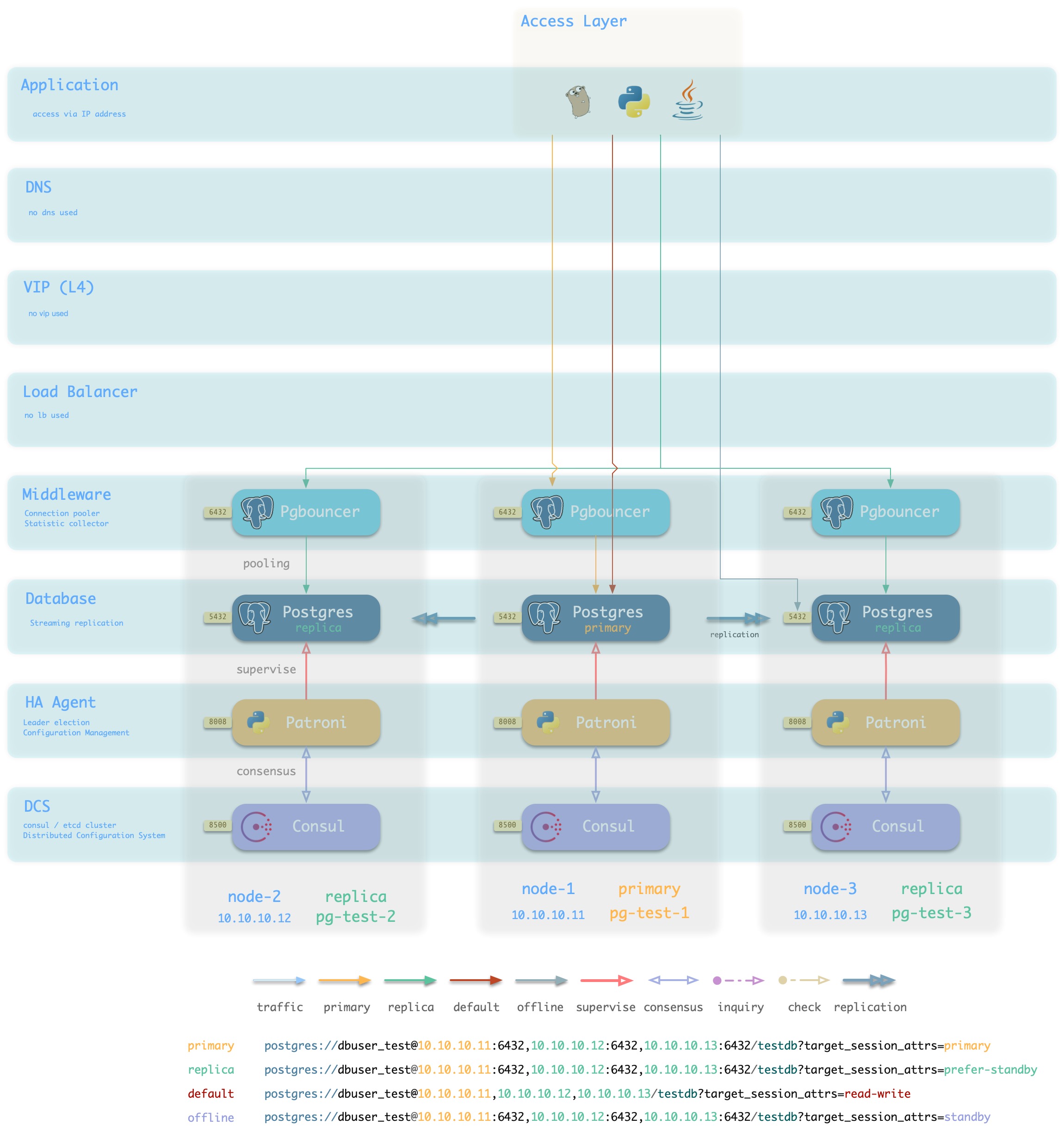

At the cluster level, users can access the [four default services] provided by the cluster via cluster domain + service port (. /service#default services), which Pigsty strongly recommends. Of course users can also bypass the domain name and access the database cluster directly using the cluster’s VIP (L2 or L4).

At the instance level, users can connect directly to the Postgres database via the node IP/domain name + port 5432, or they can use port 6432 to access the database via Pgbouncer. Services provided by the cluster to which the instance belongs can also be accessed via Haproxy via 5433~543x.

How the database is accessed ultimately depends on the traffic access scheme used by the database.

Typical access scheme

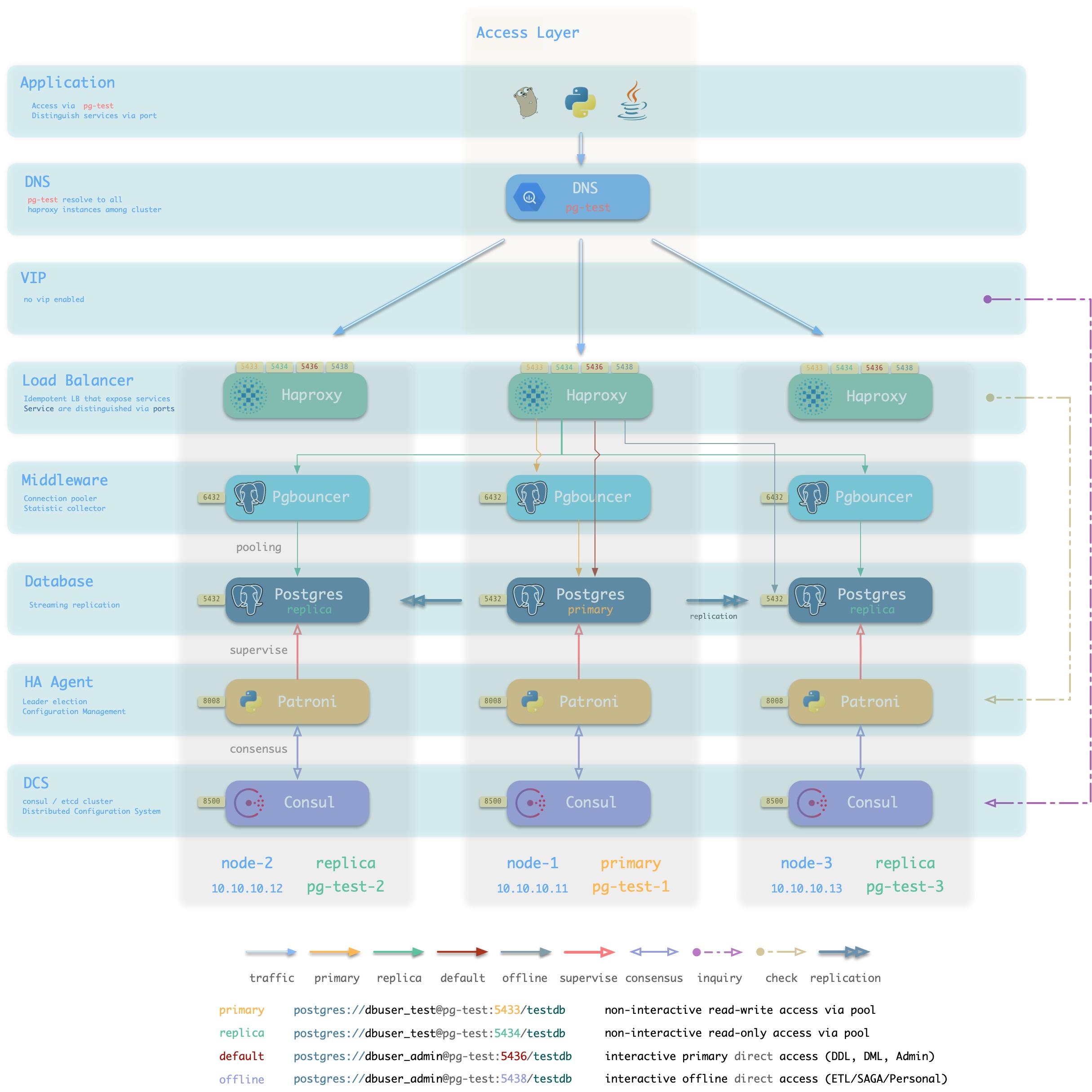

Pigsty recommends using a Haproxy-based access scheme (1/2), or in production environments with infrastructure support, an L4VIP (or equivalent load balancing service) based access scheme (3) can be used.

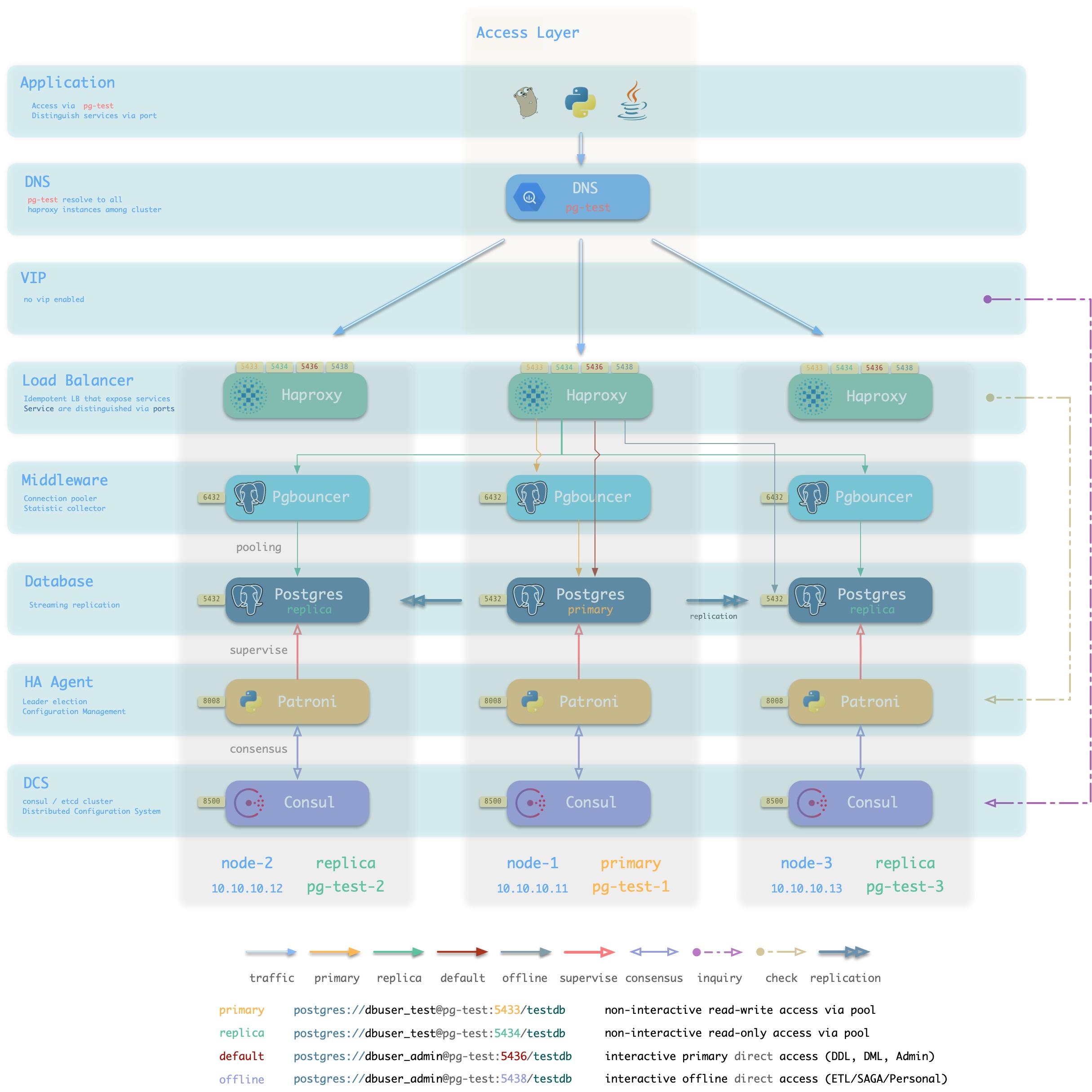

DNS + Haproxy

方案简介

标准高可用接入方案,系统无单点。灵活性,适用性,性能的最佳平衡点。

集群中的Haproxy采用Node Port的方式统一对外暴露 服务。每个Haproxy都是幂等的实例,提供完整的负载均衡与服务分发功能。Haproxy部署于每一个数据库节点上,因此整个集群的每一个成员在使用效果上都是幂等的。(例如访问任何一个成员的5433端口都会连接至主库连接池,访问任意成员的5434端口都会连接至某个从库的连接池)

Haproxy本身的可用性通过幂等副本实现,每一个Haproxy都可以作为访问入口,用户可以使用一个、两个、多个,所有Haproxy实例,每一个Haproxy提供的功能都是完全相同的。

用户需要自行确保应用能够访问到任意一个健康的Haproxy实例。作为最朴素的一种实现,用户可以将数据库集群的DNS域名解析至若干Haproxy实例,并启用DNS轮询响应。而客户端可以选择完全不缓存DNS,或者使用长连接并实现建立连接失败后重试的机制。又或者参考方案2,在架构侧通过额外的L2/L4 VIP确保Haproxy本身的高可用。

方案优越性

-

无单点,高可用

-

VIP固定绑定至主库,可以灵活访问

方案局限性

-

多一跳

-

Client IP地址丢失,部分HBA策略无法正常生效

-

Haproxy本身的高可用通过幂等副本,DNS轮询与客户端重连实现

DNS应有轮询机制,客户端应当使用长连接,并有建连失败重试机制。以便单Haproxy故障时可以自动漂移至集群中的其他Haproxy实例。如果无法做到这一点,可以考虑使用接入方案2,使用L2/L4 VIP确保Haproxy高可用。

方案示意

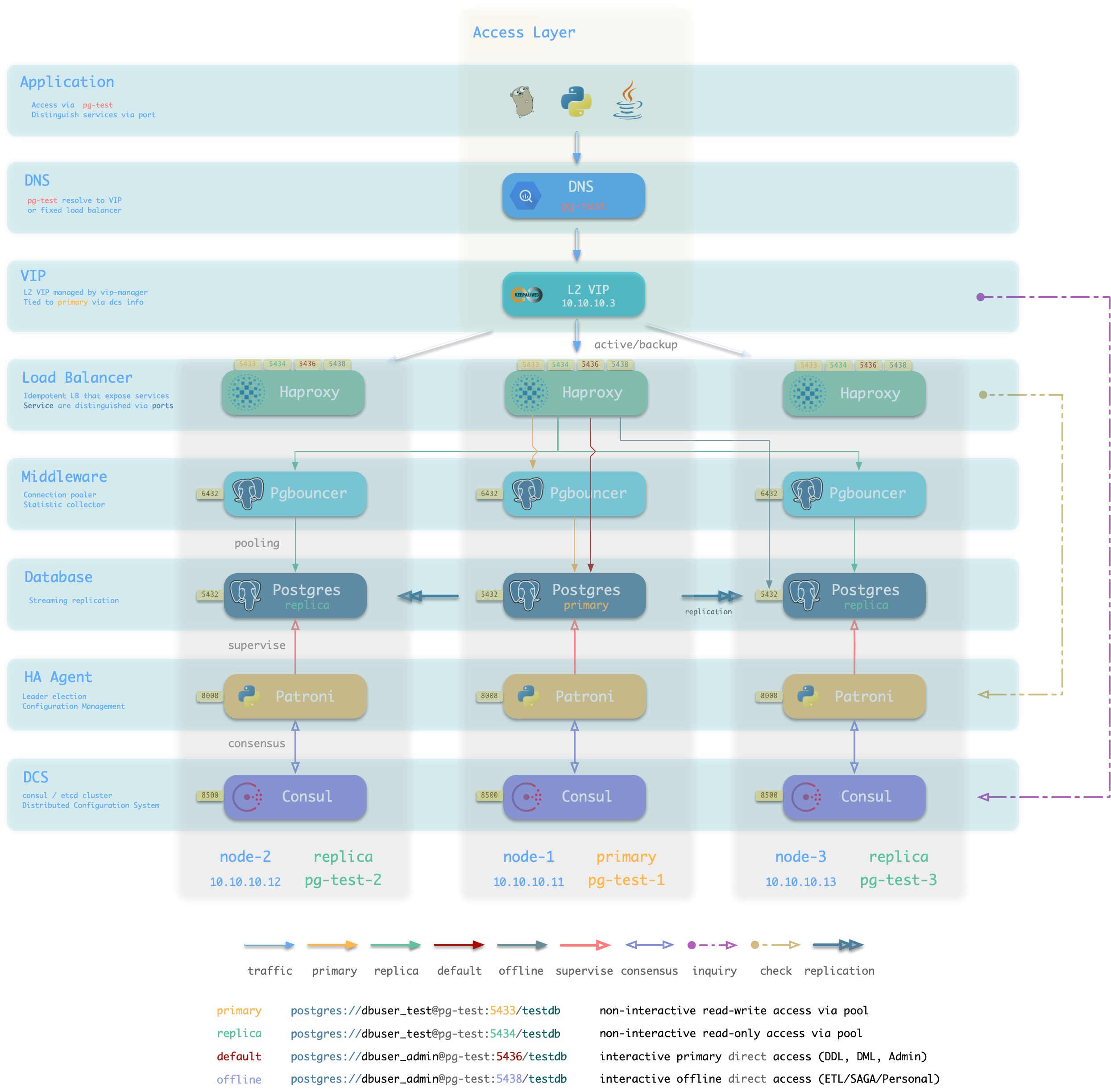

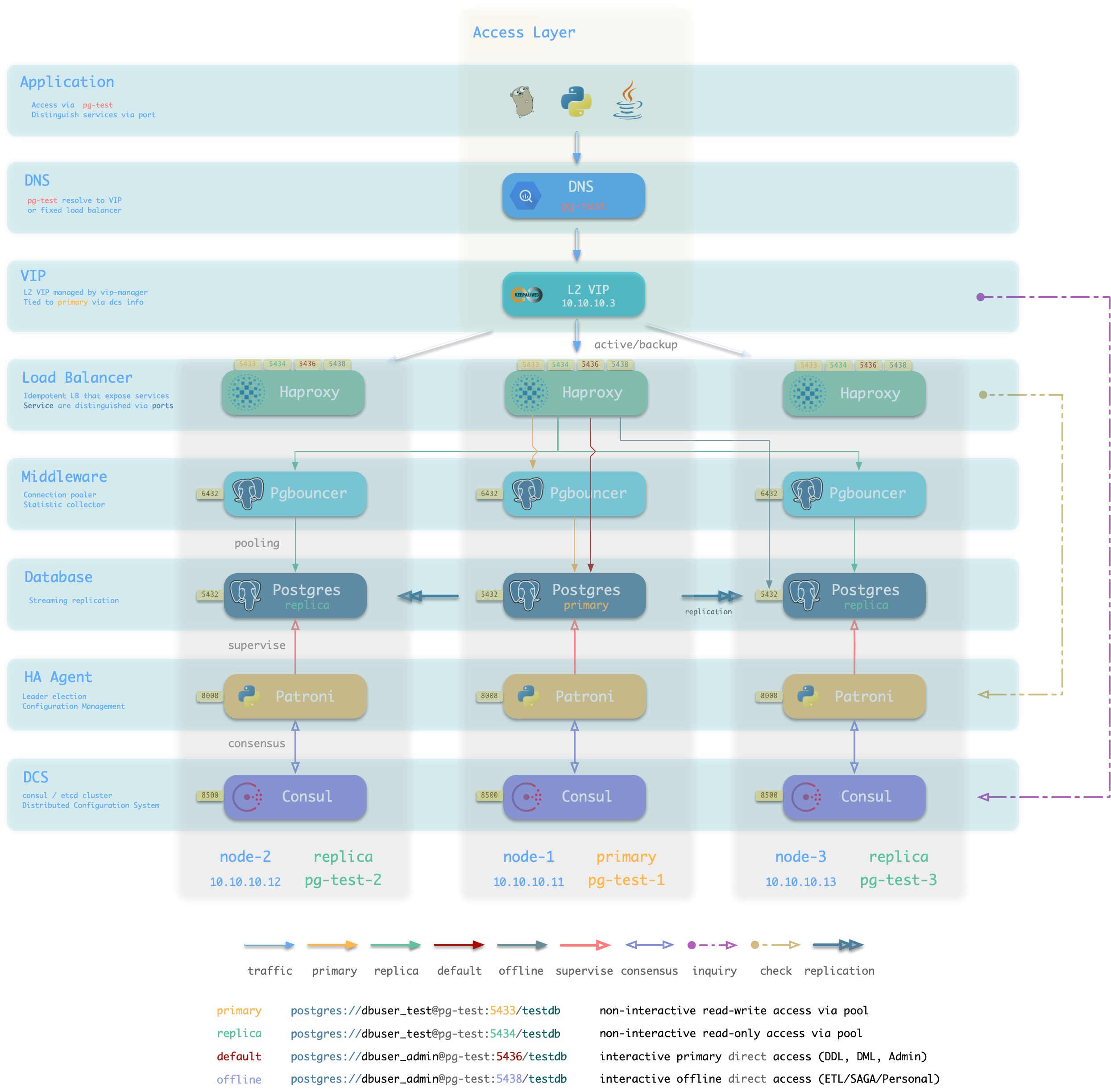

L2 VIP + Haproxy

方案简介

Pigsty沙箱使用的标准接入方案,采用单个域名绑定至单个L2 VIP,VIP指向集群中的HAProxy。

集群中的Haproxy采用Node Port的方式统一对外暴露 服务。每个Haproxy都是幂等的实例,提供完整的负载均衡与服务分发功能。而Haproxy本身的可用性则通过L2 VIP来保证。

每个集群都分配有一个L2 VIP,固定绑定至集群主库。当主库发生切换时,该L2 VIP也会随之漂移至新的主库上。这是通过vip-manager实现的:vip-manager会查询Consul获取集群当前主库信息,然后在主库上监听VIP地址。

集群的L2 VIP有与之对应的域名。域名固定解析至该L2 VIP,在生命周期中不发生变化。

方案优越性

-

无单点,高可用

-

VIP固定绑定至主库,可以灵活访问

方案局限性

方案示意

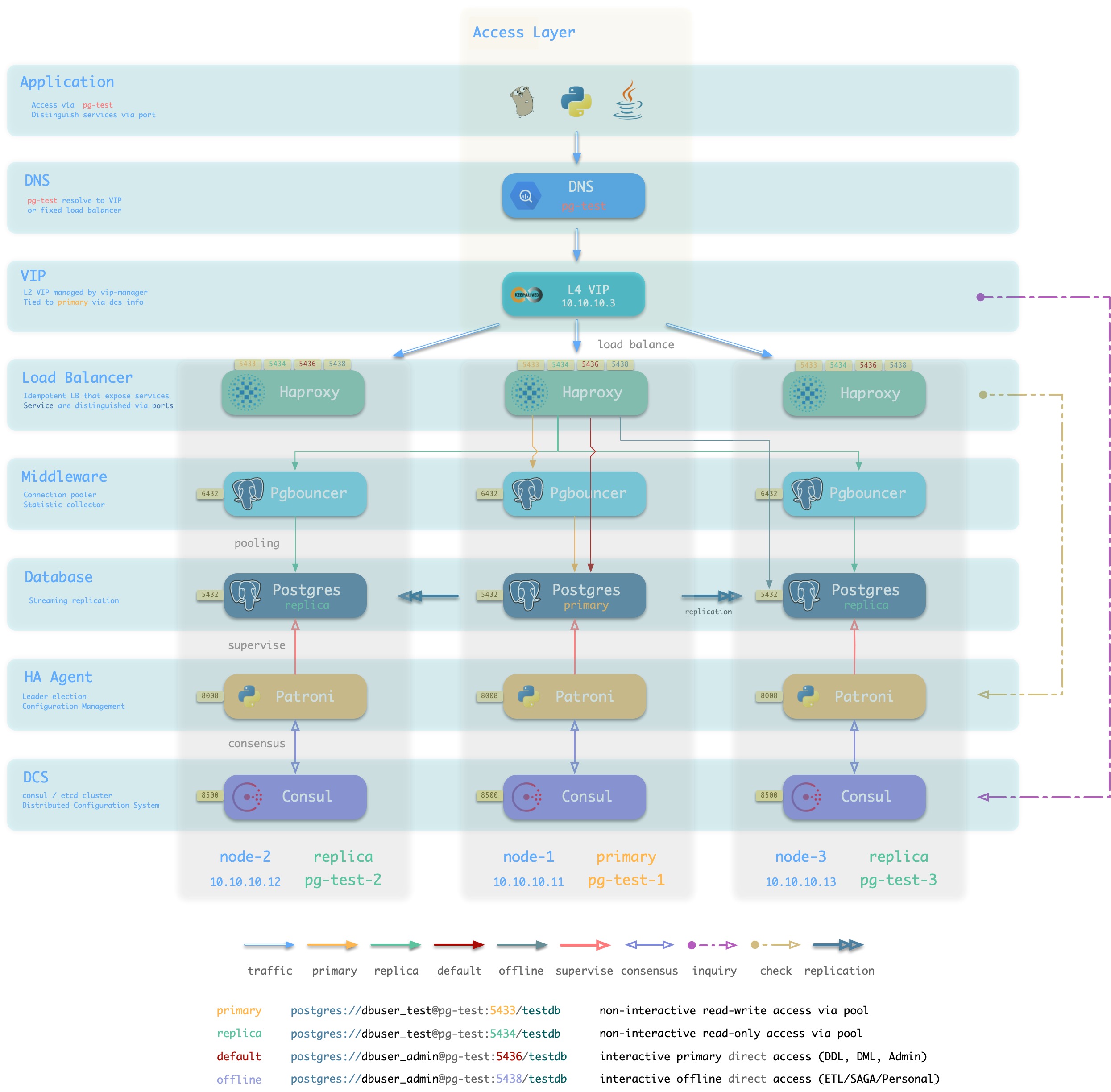

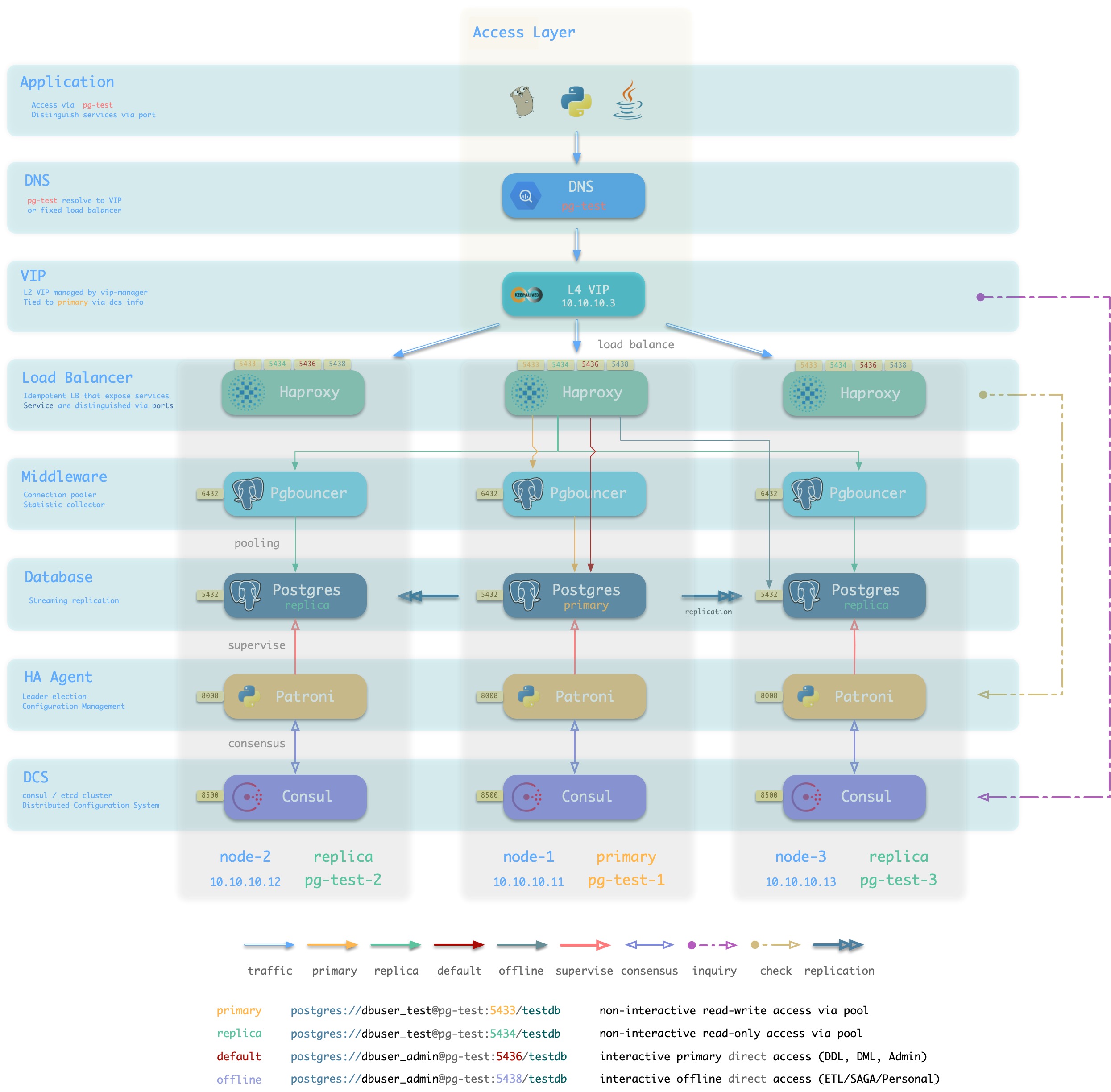

L4 VIP + Haproxy

方案简介

接入方案1/2的另一种变体,通过L4 VIP确保Haproxy的高可用

方案优越性

- 无单点,高可用

- 可以同时使用所有的Haproxy实例,均匀承载流量。

- 所有候选主库不需要位于同一二层网络。

- 可以操作单一VIP完成流量切换(如果同时使用了多个Haproxy,不需要逐个调整)

方案局限性

- 多两跳,较为浪费,如果有条件可以直接使用方案4: L4 VIP直接接入。

- Client IP地址丢失,部分HBA策略无法正常生效

方案示意

L4 VIP

方案简介

大规模高性能生产环境建议使用 L4 VIP接入(FullNAT,DPVS)

方案优越性

- 性能好,吞吐量大

- 可以通过

toa模块获取正确的客户端IP地址,HBA可以完整生效。

方案局限性

- 仍然多一条。

- 需要依赖外部基础设施,部署复杂。

- 未启用

toa内核模块时,仍然会丢失客户端IP地址。

- 没有Haproxy屏蔽主从差异,集群中的每个节点不再“幂等”。

方案示意

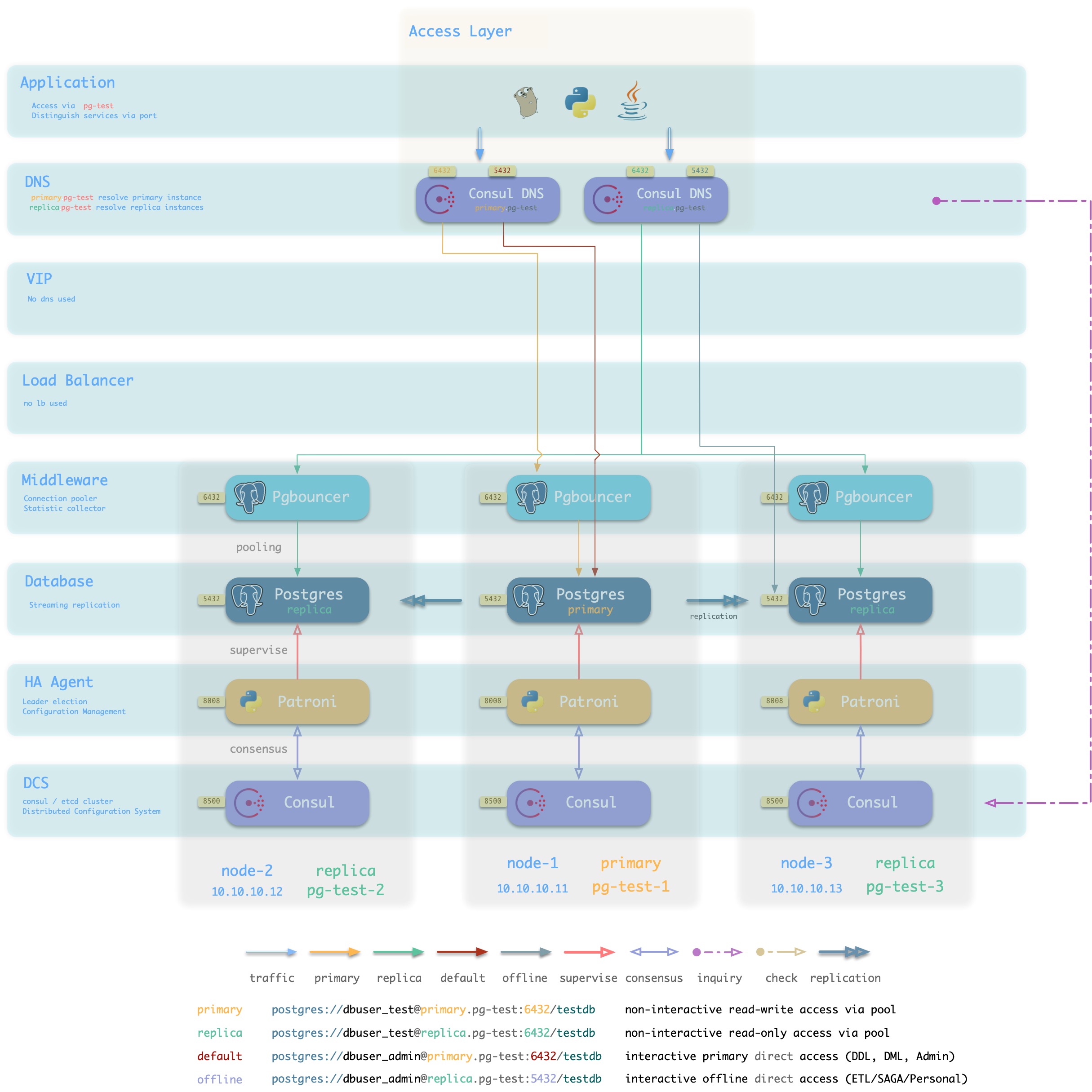

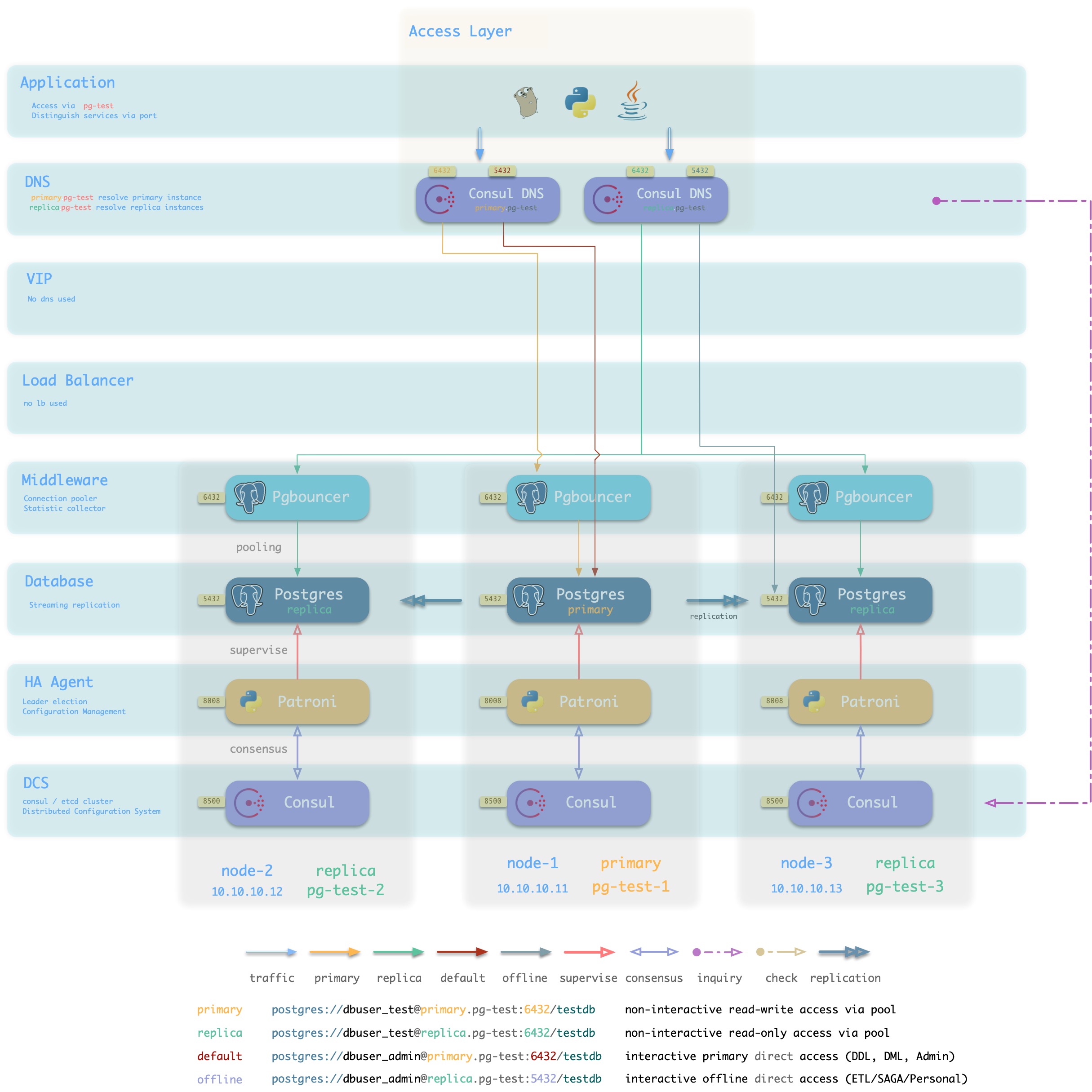

Consul DNS

方案简介

L2 VIP并非总是可用,特别是所有候选主库必须位于同一二层网络的要求可能不一定能满足。

在这种情况下,可以使用DNS解析代替L2 VIP,进行

方案优越性

方案局限性

- 依赖Consul DNS

- 用户需要合理配置DNS缓存策略

方案示意

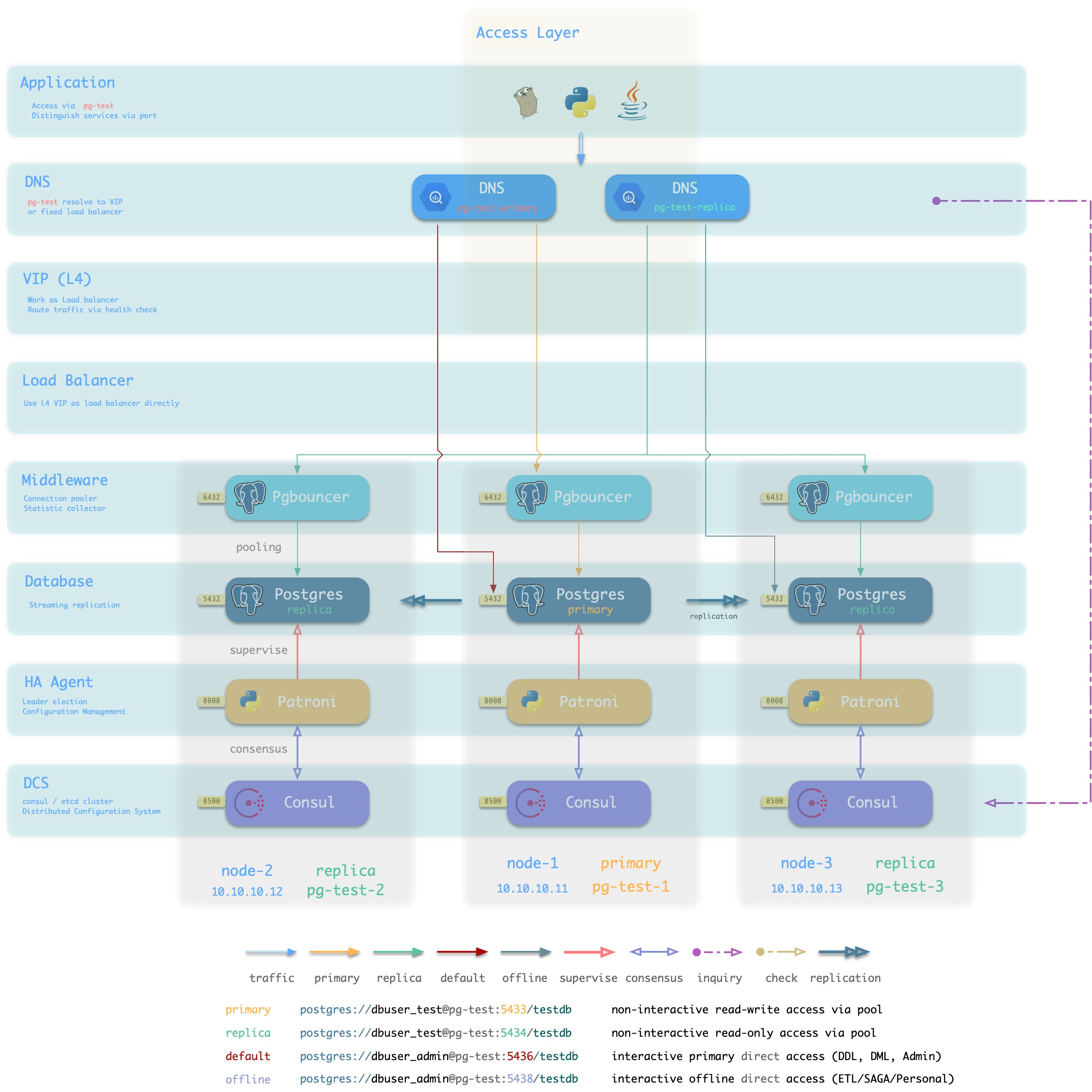

Static DNS

方案简介

传统静态DNS接入方式

方案优越性

方案局限性

方案示意

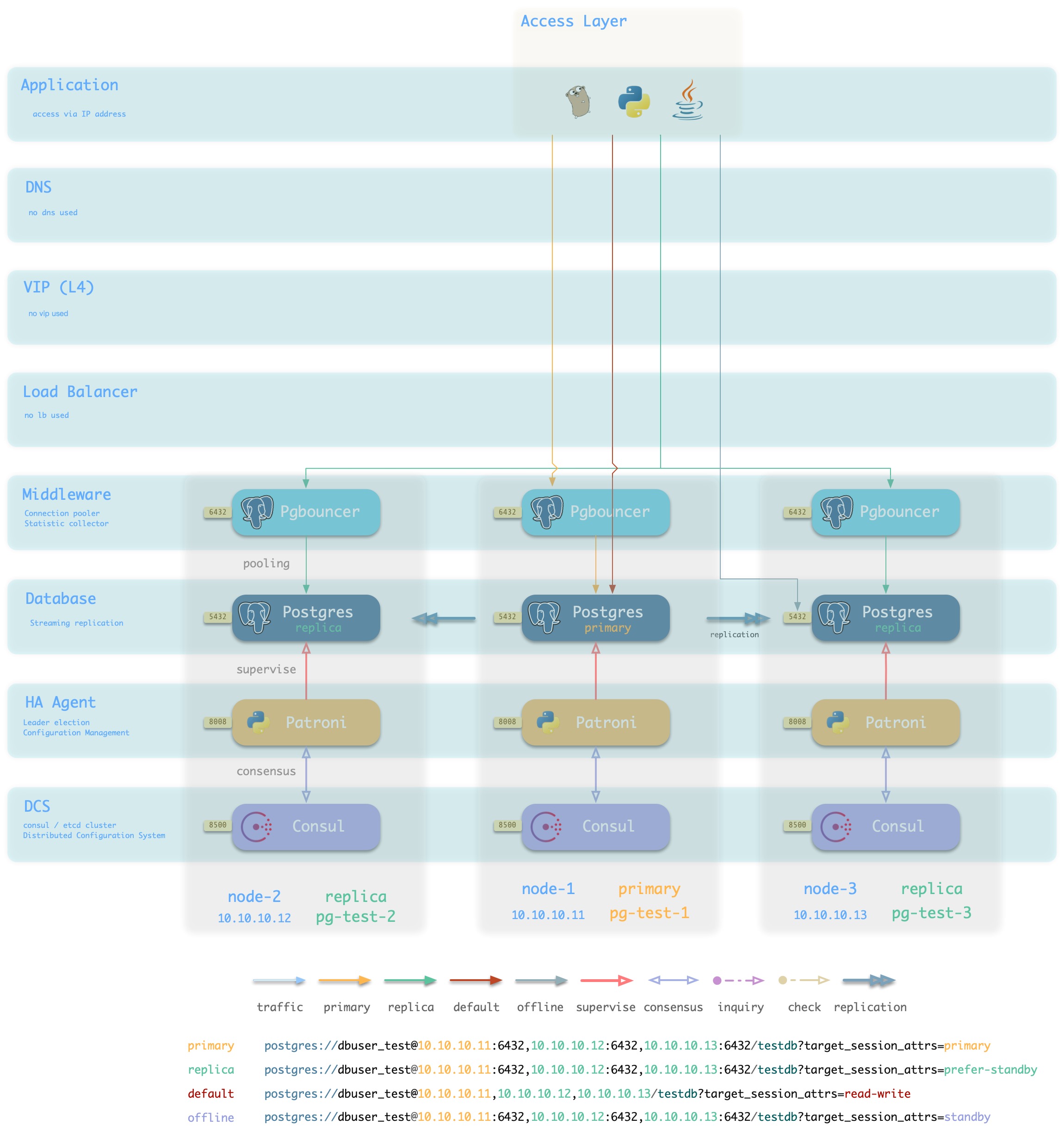

IP

方案简介

采用智能客户端直连数据库IP接入

方案优越性

- 直连数据库/连接池,少一条

- 不依赖额外组件进行主从区分,降低系统复杂性。

方案局限性

方案示意

2 - DB Service

How to access pigsty default service?

Service, the form of functionality that a database cluster provides to the outside world. In general, a database cluster** should provide at least two types of services**.

- read-write service (primary): users can write to the database

- read-only service (replica): users can access read-only copies

In addition, depending on the specific business scenario, there may be other services.

- offline replica service (offline): a dedicated slave that does not take on online read-only traffic, used for ETL and individual user queries.

- synchronous replica service (standby): read-only service with synchronous commit and no replication delay.

- delayed : Allows services to access old data before a fixed time interval.

- default : A service that allows (administrative) users to manage the database directly, bypassing the connection pool

Default Service

Pigsty provides four services outside the default queue: primary, replica, default, offline.

| service |

port |

purpose |

description |

| primary |

5433 |

production read/write |

connect to cluster primary via connection pool |

| replica |

5434 |

production read-only |

connection to cluster slave via connection pool |

| default |

5436 |

management |

direct connection to cluster master |

| offline |

5438 |

ETL/personal user |

connects directly to an available offline instance of the cluster |

| service |

port |

description |

sample |

| primary |

5433 |

Only production users can connect |

postgres://test@pg-test:5433/test |

| replica |

5434 |

Only production users can connect |

postgres://test@pg-test:5434/test |

| default |

5436 |

Administrator and DML executor can connect |

postgres://dbuser_admin@pg-test:5436/test |

| offline |

5438 |

ETL/STATS Individual users can connect |

postgres://dbuser_stats@pg-test-tt:5438/test

postgres://dbp_vonng@pg-test:5438/test |

Primary Service

The Primary service serves online production read and write access, which maps the cluster’s port 5433, to the primary connection pool (default 6432) port.

The Primary service selects all instances in the cluster as its members, but only those with a true health check /primary can actually take on traffic.

There is one and only one instance in the cluster that is the primary, and only its health check is true.

- name: primary # service name {{ pg_cluster }}_primary

src_ip: "*"

src_port: 5433

dst_port: pgbouncer # 5433 route to pgbouncer

check_url: /primary # primary health check, success when instance is primary

selector: "[]" # select all instance as primary service candidate

Replica Service

The Replica service serves online production read-only access, which maps the cluster’s port 5434, to the slave connection pool (default 6432) port.

The Replica service selects all instances in the cluster as its members, but only those with a true health check /read-only can actually take on traffic, and that health check returns success for all instances (including the master) that can take on read-only traffic. So any member of the cluster can carry read-only traffic.

But by default, only slave libraries carry read-only requests. The Replica service defines selector_backup, a selector that adds the cluster’s master library to the Replica service as a backup instance. The master will start taking read-only traffic** only when all other instances in the Replica service, i.e. **all slaves, are down.

# replica service will route {ip|name}:5434 to replica pgbouncer (5434->6432 ro)

- name: replica # service name {{ pg_cluster }}_replica

src_ip: "*"

src_port: 5434

dst_port: pgbouncer

check_url: /read-only # read-only health check. (including primary)

selector: "[]" # select all instance as replica service candidate

selector_backup: "[? pg_role == `primary`]" # primary are used as backup server in replica service

Default Service

The Default service serves the online primary direct connection, which maps the cluster’s port 5436, to the primary Postgres port (default 5432).

The Default service targets interactive read and write access, including: executing administrative commands, executing DDL changes, connecting to the primary library to execute DML, and executing CDC. interactive operations should not be accessed through connection pools, so the Default service forwards traffic directly to Postgres, bypassing the Pgbouncer.

The Default service is similar to the Primary service, using the same configuration options. The Default parameters are filled in explicitly for demonstration purposes.

# default service will route {ip|name}:5436 to primary postgres (5436->5432 primary)

- name: default # service's actual name is {{ pg_cluster }}-{{ service.name }}

src_ip: "*" # service bind ip address, * for all, vip for cluster virtual ip address

src_port: 5436 # bind port, mandatory

dst_port: postgres # target port: postgres|pgbouncer|port_number , pgbouncer(6432) by default

check_method: http # health check method: only http is available for now

check_port: patroni # health check port: patroni|pg_exporter|port_number , patroni by default

check_url: /primary # health check url path, / as default

check_code: 200 # health check http code, 200 as default

selector: "[]" # instance selector

haproxy: # haproxy specific fields

maxconn: 3000 # default front-end connection

balance: roundrobin # load balance algorithm (roundrobin by default)

default_server_options: 'inter 3s fastinter 1s downinter 5s rise 3 fall 3 on-marked-down shutdown-sessions slowstart 30s maxconn 3000 maxqueue 128 weight 100'

Offline Service

The Offline Service is used for offline access and personal queries. It maps the cluster’s 5438 port, to the offline instance Postgres port (default 5432).

The Offline Service is for interactive read-only access, including: ETL, offline large analytics queries, and individual user queries. Interactive operations should not be accessed through connection pools, so the Default service forwards traffic directly to the offline instance of Postgres, bypassing the Pgbouncer.

Offline instances are those with pg_role == offline or with the pg_offline_query flag. Other other slave libraries outside of the Offline instance will act as backup instances for Offline, so that when the Offline instance goes down, the Offline service can still get services from other slave libraries.

# offline service will route {ip|name}:5438 to offline postgres (5438->5432 offline)

- name: offline # service name {{ pg_cluster }}_replica

src_ip: "*"

src_port: 5438

dst_port: postgres

check_url: /replica # offline MUST be a replica

selector: "[? pg_role == `offline` || pg_offline_query ]" # instances with pg_role == 'offline' or instance marked with 'pg_offline_query == true'

selector_backup: "[? pg_role == `replica` && !pg_offline_query]" # replica are used as backup server in offline service

服务定义

Offline service is used for offline access and personal queries. It maps the cluster’s 5438 port, to the offline instance Postgres port (default 5432).

The Offline service is for interactive read-only access, including: ETL, offline large analytics queries, and individual user queries. Interactive operations should not be accessed through connection pools, so the Default service forwards traffic directly to the offline instance of Postgres, bypassing the Pgbouncer.

Offline instances are those with pg_role == offline or with the pg_offline_query flag. Other other slave libraries outside the Offline instance will act as backup instances for Offline, so that when the Offline instance goes down, the Offline service can still get services from other slave libraries.

# primary service will route {ip|name}:5433 to primary pgbouncer (5433->6432 rw)

- name: primary # service name {{ pg_cluster }}_primary

src_ip: "*"

src_port: 5433

dst_port: pgbouncer # 5433 route to pgbouncer

check_url: /primary # primary health check, success when instance is primary

selector: "[]" # select all instance as primary service candidate

# replica service will route {ip|name}:5434 to replica pgbouncer (5434->6432 ro)

- name: replica # service name {{ pg_cluster }}_replica

src_ip: "*"

src_port: 5434

dst_port: pgbouncer

check_url: /read-only # read-only health check. (including primary)

selector: "[]" # select all instance as replica service candidate

selector_backup: "[? pg_role == `primary`]" # primary are used as backup server in replica service

# default service will route {ip|name}:5436 to primary postgres (5436->5432 primary)

- name: default # service's actual name is {{ pg_cluster }}-{{ service.name }}

src_ip: "*" # service bind ip address, * for all, vip for cluster virtual ip address

src_port: 5436 # bind port, mandatory

dst_port: postgres # target port: postgres|pgbouncer|port_number , pgbouncer(6432) by default

check_method: http # health check method: only http is available for now

check_port: patroni # health check port: patroni|pg_exporter|port_number , patroni by default

check_url: /primary # health check url path, / as default

check_code: 200 # health check http code, 200 as default

selector: "[]" # instance selector

haproxy: # haproxy specific fields

maxconn: 3000 # default front-end connection

balance: roundrobin # load balance algorithm (roundrobin by default)

default_server_options: 'inter 3s fastinter 1s downinter 5s rise 3 fall 3 on-marked-down shutdown-sessions slowstart 30s maxconn 3000 maxqueue 128 weight 100'

# offline service will route {ip|name}:5438 to offline postgres (5438->5432 offline)

- name: offline # service name {{ pg_cluster }}_replica

src_ip: "*"

src_port: 5438

dst_port: postgres

check_url: /replica # offline MUST be a replica

selector: "[? pg_role == `offline` || pg_offline_query ]" # instances with pg_role == 'offline' or instance marked with 'pg_offline_query == true'

selector_backup: "[? pg_role == `replica` && !pg_offline_query]" # replica are used as backup server in offline service

Mandatory

-

Name (service.name).

service name, the full name of the service is prefixed by the database cluster name and suffixed by service.name, connected by -. For example, a service with name=primary in the pg-test cluster has the full service name pg-test-primary.

-

Port (service.port).

In Pigsty, services are exposed to the public by default in the form of NodePort, so exposing the port is mandatory. However, if you use an external load balancing service access scheme, you can also distinguish the services in other ways.

-

selector (service.selector).

The selector specifies the instance members of the service, in the form of a JMESPath that filters variables from all cluster instance members. The default [] selector will pick all cluster members.

Optional

-

backup selector (service.selector).

Optional backup selector service.selector_backup will select or mark the list of instances used for service backup, i.e. the backup instance takes over the service only when all other members of the cluster fail. For example, the primary instance can be added to the alternate set of the replica service, so that the master can still carry the read-only traffic of the cluster when all the slaves fail.

-

source_ip (service.src_ip).

Indicates the IP address used externally by the service. The default is *, which is all IP addresses on the local machine. Using vip will use the vip_address variable to take the value, or you can also fill in the specific IP address supported by the NIC.

-

Host port (service.dst_port).

Which port on the target instance will the service’s traffic be directed to? postgres will point to the port that the database listens on, pgbouncer will point to the port that the connection pool listens on, or you can fill in a fixed port number.

-

health check method (service.check_method):

How does the service check the health status of the instance? Currently only HTTP is supported

-

Health check port (service.check_port):

Which port does the service check the instance on to get the health status of the instance? patroni will get it from Patroni (default 8008), pg_exporter will get it from PG Exporter (default 9630), or user can fill in a custom port number.

-

Health check path (service.check_url):

The URL PATH used by the service to perform HTTP checks. / is used by default for health checks, and PG Exporter and Patroni provide a variety of health check methods that can be used to differentiate between master and slave traffic. For example, /primary will only return success for the master, and /replica will only return success for the slave. /read-only, on the other hand, will return success for any instance that supports read-only (including the master).

-

health check code (service.check_code):

The code expected for HTTP health checks, default is 200

-

Haproxy-specific configuration (service.haproxy) :

Proprietary configuration items about the service provisioning software (HAproxy)

3 - HA

Introduction to High Availability

The database cluster created by Pigsty is a distributed, highly available database cluster.

Effectively, as long as any instance in the cluster survives, the cluster can provide complete read and write services and read-only services to the outside world.

Each database instance in the database cluster is idempotent in use, and any instance can provide complete read and write services through the built-in load balancing components.

Database clusters can automatically perform fault detection and master-slave switching, and common failures can self-heal within seconds to tens of seconds, and read-only traffic is not affected during this period.

High Availability

Two core scenarios: Switchover, Failover

Four core issues: Fault detection, Fencing, master selection, traffic switching

For a walkthrough of the core scenarios of high availability, please refer to [High Availability Walkthrough](… /… /… /tasks/ha-drill/) section.

Patroni-based high availability scenarios

The Patroni based high availability solution is simple to deploy, does not require the use of special hardware, and has a large number of real production use cases to back it up.

Pigsty’s high availability solution is based on Patroni, vip-manager, haproxy

Patroni is based on DCS (etcd/consul/zookeeper) to reach a master selection consensus.

Patroni’s failure detection uses heartbeat packet to keep alive, DCS lease mechanism to achieve. The main repository holds the lease, and if Qin loses its deer, the world will fight it.

Patroni’s Fencing is based on the Linux kernel module watchdog.

Patroni provides master-slave health checks for easy integration with external load balancers.

Haproxy and VIP based access layer solutions

Pigsty sandboxes use by default L2 VIP and Haproxy based access layer solutions, Pigsty provides several optional [database access](… /… /… /concept/provision/access/) methods.

!

Haproxy idempotently is deployed on each instance of the cluster, and any one or more Haproxy instances can act as a load balancer for the cluster.

Haproxy uses a Node Port-like approach to expose its services to the public. By default, port 5433 provides read and write services to the cluster, while port 5434 provides read-only services to the cluster.

High availability of Haproxy itself can be achieved in several ways.

- Using a smart client that connects to the database using the DNS or service discovery mechanism provided by Consul.

- Using a smart client that uses the Multi-Host feature to populate all instances in the cluster.

- Use VIPs bound in front of Haproxy (Layer 2 or 4)

- Use external load balancers to guarantee

- Use DNS polling to resolve to multiple Haproxy, clients will re-execute DNS resolution and retry after a disconnect.

Patroni’s behavior in case of failure

| 场景 |

位置 |

Patroni的动作 |

| PG Down |

replica |

尝试重新拉起PG |

| Patroni Down |

replica |

PG随之关闭(维护模式下不变) |

| Patroni Crash |

replica |

PG不会随Patroni一并关闭 |

| DCS Network Partition |

replica |

无事 |

| Promote |

replica |

将PG降为从库并重新挂至主库。 |

| PG Down |

primary |

尝试重启PG

超过master_start_timeout后执行Failover |

| Patroni Down |

primary |

关闭PG并触发Failover |

| Patroni Crash |

primary |

触发Failover,可能触发脑裂。

可通过watchdog fencing避免。 |

| DCS Network Partition |

primary |

主库降级为从库,触发Failover |

| DCS Down |

DCS |

主库降级为从库,集群中没有主库,不可写入。 |

| 同步模式下无可用备选 |

|

临时切换为异步复制。

恢复为同步复制前不会Failover |

The proper configuration of Patroni can handle most failures. However, a scenario like DCS Down (Consul/Etcd down or network unreachable) will render all production database clusters unwritable and requires special attention. **Must ensure that DCS availability is higher than database availability. **

Known Issue

Please try to ensure that the server’s time synchronization service starts before Patroni.

4 - File Structure

Introduction to pigsty file structure hierarchy

The following parameters are related to the Pigsty directory structure

- pg_dbsu_home: home directory of the default user of Postgres, default is

/var/lib/pgsql

- pg_bin_dir: Postgres binary directory, defaults to

/usr/pgsql/bin/

- pg_data: Postgres database directory, defaults to

/pg/data

- pg_fs_main: Postgres main data disk mount point, default is

/export

- pg_fs_bkup: Postgres backup disk mount point, default is

/var/backups (optional, you can also choose to backup to the main data disk)

Overview

#------------------------------------------------------------------------------

# Create Directory

#------------------------------------------------------------------------------

# this assumes that

# /pg is shortcut for postgres home

# {{ pg_fs_main }} contains the main data (MUST ALREADY MOUNTED)

# {{ pg_fs_bkup }} contains archive and backup data (MUST ALREADY MOUNTED)

# cluster-version is the default parent folder for pgdata (e.g pg-test-12)

#------------------------------------------------------------------------------

# default variable:

# pg_fs_main = /export fast ssd

# pg_fs_bkup = /var/backups cheap hdd

#

# /pg -> /export/postgres/pg-test-12

# /pg/data -> /export/postgres/pg-test-12/data

#------------------------------------------------------------------------------

- name: Create postgresql directories

tags: pg_dir

become: yes

block:

- name: Make sure main and backup dir exists

file: path={{ item }} state=directory owner=root mode=0777

with_items:

- "{{ pg_fs_main }}"

- "{{ pg_fs_bkup }}"

# pg_cluster_dir: "{{ pg_fs_main }}/postgres/{{ pg_cluster }}-{{ pg_version }}"

- name: Create postgres directory structure

file: path={{ item }} state=directory owner={{ pg_dbsu }} group=postgres mode=0700

with_items:

- "{{ pg_fs_main }}/postgres"

- "{{ pg_cluster_dir }}"

- "{{ pg_cluster_dir }}/bin"

- "{{ pg_cluster_dir }}/log"

- "{{ pg_cluster_dir }}/tmp"

- "{{ pg_cluster_dir }}/conf"

- "{{ pg_cluster_dir }}/data"

- "{{ pg_cluster_dir }}/meta"

- "{{ pg_cluster_dir }}/stat"

- "{{ pg_cluster_dir }}/change"

- "{{ pg_backup_dir }}/postgres"

- "{{ pg_backup_dir }}/arcwal"

- "{{ pg_backup_dir }}/backup"

- "{{ pg_backup_dir }}/remote"

PG二进制目录结构

在RedHat/CentOS上,默认的Postgres发行版安装位置为

/usr/pgsql-${pg_version}/

安装剧本会自动创建指向当前安装版本的软连接,例如,如果安装了13版本的Postgres,则有:

/usr/pgsql -> /usr/pgsql-13

因此,默认的pg_bin_dir为/usr/pgsql/bin/,该路径会在/etc/profile.d/pgsql.sh中添加至所有用户的PATH环境变量中。

PG数据目录结构

Pigsty假设用于部署数据库实例的单个节点上至少有一块主数据盘(pg_fs_main),以及一块可选的备份数据盘(pg_fs_bkup)。通常主数据盘是高性能SSD,而备份盘是大容量廉价HDD。

#------------------------------------------------------------------------------

# Create Directory

#------------------------------------------------------------------------------

# this assumes that

# /pg is shortcut for postgres home

# {{ pg_fs_main }} contains the main data (MUST ALREADY MOUNTED)

# {{ pg_fs_bkup }} contains archive and backup data (MAYBE ALREADY MOUNTED)

# {{ pg_cluster }}-{{ pg_version }} is the default parent folder

# for pgdata (e.g pg-test-12)

#------------------------------------------------------------------------------

# default variable:

# pg_fs_main = /export fast ssd

# pg_fs_bkup = /var/backups cheap hdd

#

# /pg -> /export/postgres/pg-test-12

# /pg/data -> /export/postgres/pg-test-12/data

PG数据库集簇目录结构

# basic

{{ pg_fs_main }} /export # contains all business data (pg,consul,etc..)

{{ pg_dir_main }} /export/postgres # contains postgres main data

{{ pg_cluster_dir }} /export/postgres/pg-test-13 # contains cluster `pg-test` data (of version 13)

/export/postgres/pg-test-13/bin # binary scripts

/export/postgres/pg-test-13/log # misc logs

/export/postgres/pg-test-13/tmp # tmp, sql files, records

/export/postgres/pg-test-13/conf # configurations

/export/postgres/pg-test-13/data # main data directory

/export/postgres/pg-test-13/meta # identity information

/export/postgres/pg-test-13/stat # stats information

/export/postgres/pg-test-13/change # changing records

{{ pg_fs_bkup }} /var/backups # contains all backup data (pg,consul,etc..)

{{ pg_dir_bkup }} /var/backups/postgres # contains postgres backup data

{{ pg_backup_dir }} /var/backups/postgres/pg-test-13 # contains cluster `pg-test` backup (of version 13)

/var/backups/postgres/pg-test-13/backup # base backup

/var/backups/postgres/pg-test-13/arcwal # WAL archive

/var/backups/postgres/pg-test-13/remote # mount NFS/S3 remote resources here

# links

/pg -> /export/postgres/pg-test-12 # pg root link

/pg/data -> /export/postgres/pg-test-12/data # real data dir

/pg/backup -> /var/backups/postgres/pg-test-13/backup # base backup

/pg/arcwal -> /var/backups/postgres/pg-test-13/arcwal # WAL archive

/pg/remote -> /var/backups/postgres/pg-test-13/remote # mount NFS/S3 remote resources here

Pgbouncer配置文件结构

Pgbouncer使用Postgres用户运行,配置文件位于/etc/pgbouncer。配置文件包括:

pgbouncer.ini,主配置文件userlist.txt:列出连接池中的用户pgb_hba.conf:列出连接池用户的访问权限database.txt:列出连接池中的数据库

5 - Access Control

Introduction to Pigsty ACL models

PostgreSQL提供了两类访问控制机制:认证(Authentication) 与 权限(Privileges)

Pigsty带有基本的访问控制模型,足以覆盖绝大多数应用场景。

用户体系

Pigsty的默认权限系统包含四个默认用户与四类默认角色 。

用户可以通过修改 pg_default_roles 来修改默认用户的名字,但默认角色的名字不建议新用户自行修改。

默认角色

Pigsty带有四个默认角色:

- 只读角色(

dbrole_readonly):只读

- 读写角色(

dbrole_readwrite):读写,继承dbrole_readonly

- 管理角色(

dbrole_admin):�执行DDL变更,继承dbrole_readwrite

- 离线角色(

dbrole_offline):只读,用于执行慢查询/ETL/交互查询,仅允许在特定实例上访问。

默认用户

Pigsty带有四个默认用户:

- 超级用户(

postgres),数据库的拥有者与创建者,与操作系统用户一致

- 复制用户(

replicator),用于主从复制的用户。

- 监控用户(

dbuser_monitor),用于监控数据库指标的用户。

- 管理员(

dbuser_admin),执行日常管理操作与数据库变更。(通常供DBA使用)

| name |

attr |

roles |

desc |

| dbrole_readonly |

Cannot login |

|

role for global readonly access |

| dbrole_readwrite |

Cannot login |

dbrole_readonly |

role for global read-write access |

| dbrole_offline |

Cannot login |

|

role for restricted read-only access (offline instance) |

| dbrole_admin |

Cannot login

Bypass RLS |

pg_monitor

pg_signal_backend

dbrole_readwrite |

role for object creation |

| postgres |

Superuser

Create role

Create DB

Replication

Bypass RLS |

|

system superuser |

| replicator |

Replication

Bypass RLS |

pg_monitor

dbrole_readonly |

system replicator |

| dbuser_monitor |

16 connections |

pg_monitor

dbrole_readonly |

system monitor user |

| dbuser_admin |

Bypass RLS

Superuser |

dbrole_admin |

system admin user |

相关配置

以下是8个默认用户/角色的相关变量

默认用户有专用的用户名与密码配置选项,会覆盖 pg_default_roles中的选项。因此无需在其中为默认用户配置密码。

出于安全考虑,不建议为DBSU配置密码,故pg_dbsu没有专门的密码配置项。如有需要,用户可以在pg_default_roles中为超级用户指定密码。

# - system roles - #

pg_replication_username: replicator # system replication user

pg_replication_password: DBUser.Replicator # system replication password

pg_monitor_username: dbuser_monitor # system monitor user

pg_monitor_password: DBUser.Monitor # system monitor password

pg_admin_username: dbuser_admin # system admin user

pg_admin_password: DBUser.Admin # system admin password

# - default roles - #

# chekc http://pigsty.cc/zh/docs/concepts/provision/acl/ for more detail

pg_default_roles:

# common production readonly user

- name: dbrole_readonly # production read-only roles

login: false

comment: role for global readonly access

# common production read-write user

- name: dbrole_readwrite # production read-write roles

login: false

roles: [dbrole_readonly] # read-write includes read-only access

comment: role for global read-write access

# offline have same privileges as readonly, but with limited hba access on offline instance only

# for the purpose of running slow queries, interactive queries and perform ETL tasks

- name: dbrole_offline

login: false

comment: role for restricted read-only access (offline instance)

# admin have the privileges to issue DDL changes

- name: dbrole_admin

login: false

bypassrls: true

comment: role for object creation

roles: [dbrole_readwrite,pg_monitor,pg_signal_backend]

# dbsu, name is designated by `pg_dbsu`. It's not recommend to set password for dbsu

- name: postgres

superuser: true

comment: system superuser

# default replication user, name is designated by `pg_replication_username`, and password is set by `pg_replication_password`

- name: replicator

replication: true

roles: [pg_monitor, dbrole_readonly]

comment: system replicator

# default replication user, name is designated by `pg_monitor_username`, and password is set by `pg_monitor_password`

- name: dbuser_monitor

connlimit: 16

comment: system monitor user

roles: [pg_monitor, dbrole_readonly]

# default admin user, name is designated by `pg_admin_username`, and password is set by `pg_admin_password`

- name: dbuser_admin

bypassrls: true

comment: system admin user

roles: [dbrole_admin]

# default stats user, for ETL and slow queries

- name: dbuser_stats

password: DBUser.Stats

comment: business offline user for offline queries and ETL

roles: [dbrole_offline]

Pgbouncer用户

Pgbouncer的操作系统用户将与数据库超级用户保持一致,默认都使用postgres。

Pigsty默认会使用Postgres管理用户作为Pgbouncer的管理用户,使用Postgres的监控用户同时作为Pgbouncer的监控用户。

Pgbouncer的用户权限通过/etc/pgbouncer/pgb_hba.conf进行控制。

Pgbounce的用户列表通过/etc/pgbouncer/userlist.txt文件进行控制。

定义用户时,只有显式添加pgbouncer: true 的用户,才会被加入到Pgbouncer的用户列表中。

用户的定义

Pigsty中的用户可以通过以下两个参数进行声明,两者使用同样的形式:

用户的创建

Pigsty的用户可以通过 pgsql-createuser.yml 剧本完成创建

权限模型

默认情况下,角色拥有的权限如下所示:

GRANT USAGE ON SCHEMAS TO dbrole_readonly

GRANT SELECT ON TABLES TO dbrole_readonly

GRANT SELECT ON SEQUENCES TO dbrole_readonly

GRANT EXECUTE ON FUNCTIONS TO dbrole_readonly

GRANT USAGE ON SCHEMAS TO dbrole_offline

GRANT SELECT ON TABLES TO dbrole_offline

GRANT SELECT ON SEQUENCES TO dbrole_offline

GRANT EXECUTE ON FUNCTIONS TO dbrole_readonly

GRANT INSERT, UPDATE, DELETE ON TABLES TO dbrole_readwrite

GRANT USAGE, UPDATE ON SEQUENCES TO dbrole_readwrite

GRANT TRUNCATE, REFERENCES, TRIGGER ON TABLES TO dbrole_admin

GRANT CREATE ON SCHEMAS TO dbrole_admin

GRANT USAGE ON TYPES TO dbrole_admin

其他业务用户默认都应当属于四种默认角色之一:只读,读写,管理员,离线访问。

| Owner |

Schema |

Type |

Access privileges |

| username |

|

function |

=X/postgres |

|

|

|

postgres=X/postgres |

|

|

|

dbrole_readonly=X/postgres |

|

|

|

dbrole_offline=X/postgres |

| username |

|

schema |

postgres=UC/postgres |

|

|

|

dbrole_readonly=U/postgres |

|

|

|

dbrole_offline=U/postgres |

|

|

|

dbrole_admin=C/postgres |

| username |

|

sequence |

postgres=rwU/postgres |

|

|

|

dbrole_readonly=r/postgres |

|

|

|

dbrole_readwrite=wU/postgres |

|

|

|

dbrole_offline=r/postgres |

| username |

|

table |

postgres=arwdDxt/postgres |

|

|

|

dbrole_readonly=r/postgres |

|

|

|

dbrole_readwrite=awd/postgres |

|

|

|

dbrole_offline=r/postgres |

|

|

|

dbrole_admin=Dxt/postgres |

所有用户都可以访问所有模式,只读用户可以读取所有表,读写用户可以对所有表进行DML操作,管理员可以执行DDL变更操作。离线用户与只读用户类似,但只允许访问pg_role == 'offline' 或带有 pg_offline_query = true 的实例。

数据库权限

数据库有三种权限:CONNECT, CREATE, TEMP,以及特殊的属主OWNERSHIP。数据库的定义由参数 pg_database 控制。一个完整的数据库定义如下所示:

pg_databases:

- name: meta # name is the only required field for a database

owner: postgres # optional, database owner

template: template1 # optional, template1 by default

encoding: UTF8 # optional, UTF8 by default

locale: C # optional, C by default

allowconn: true # optional, true by default, false disable connect at all

revokeconn: false # optional, false by default, true revoke connect from public # (only default user and owner have connect privilege on database)

tablespace: pg_default # optional, 'pg_default' is the default tablespace

connlimit: -1 # optional, connection limit, -1 or none disable limit (default)

extensions: # optional, extension name and where to create

- {name: postgis, schema: public}

parameters: # optional, extra parameters with ALTER DATABASE

enable_partitionwise_join: true

pgbouncer: true # optional, add this database to pgbouncer list? true by default

comment: pigsty meta database # optional, comment string for database

默认情况下,如果数据库没有配置属主,那么数据库超级用户dbsu将会作为数据库的默认OWNER,否则将为指定用户。

默认情况下,所有用户都具有对新创建数据库的CONNECT 权限,如果希望回收该权限,设置 revokeconn == true,则该权限会被回收。只有默认用户(dbsu|admin|monitor|replicator)与数据库的属主才会被显式赋予CONNECT权限。同时,admin|owner将会具有CONNECT权限的GRANT OPTION,可以将CONNECT权限转授他人。

如果希望实现不同数据库之间的访问隔离,可以为每一个数据库创建一个相应的业务用户作为owner,并全部设置revokeconn选项。这种配置对于多租户实例尤为实用。

创建新对象

默认情况下,出于安全考虑,Pigsty会撤销PUBLIC用户在数据库下CREATE新模式的权限,同时也会撤销PUBLIC用户在public模式下创建新关系的权限。数据库超级用户与管理员不受此限制,他们总是可以在任何地方执行DDL变更。

Pigsty非常不建议使用业务用户执行DDL变更,因为PostgreSQL的ALTER DEFAULT PRIVILEGE仅针对“由特定用户创建的对象”生效,默认情况下超级用户postgres和dbuser_admin创建的对象拥有默认的权限配置,如果用户希望授予业务用户dbrole_admin,请在使用该业务管理员执行DDL变更时首先执行:

SET ROLE dbrole_admin; -- dbrole_admin 创建的对象具有正确的默认权限

在数据库中创建对象的权限与用户是否为数据库属主无关,这只取决于创建该用户时是否为该用户赋予管理员权限。

pg_users:

- {name: test1, password: xxx , groups: [dbrole_readwrite]} # 不能创建Schema与对象

- {name: test2, password: xxx , groups: [dbrole_admin]} # 可以创建Schema与对象

认证模型

HBA是Host Based Authentication的缩写,可以将其视作IP黑白名单。

HBA配置方式

在Pigsty中,所有实例的HBA都由配置文件生成而来,最终生成的HBA规则取决于实例的角色(pg_role)

Pigsty的HBA由下列变量控制:

pg_hba_rules: 环境统一的HBA规则pg_hba_rules_extra: 特定于实例或集群的HBA规则pgbouncer_hba_rules: 链接池使用的HBA规则pgbouncer_hba_rules_extra: 特定于实例或集群的链接池HBA规则

每个变量都是由下列样式的规则组成的数组:

- title: allow intranet admin password access

role: common

rules:

- host all +dbrole_admin 10.0.0.0/8 md5

- host all +dbrole_admin 172.16.0.0/12 md5

- host all +dbrole_admin 192.168.0.0/16 md5

基于角色的HBA

role = common的HBA规则组会安装到所有的实例上,而其他的取值,例如(role : primary)则只会安装至pg_role = primary的实例上。因此用户可以通过角色体系定义灵活的HBA规则。

作为一个特例,role: offline 的HBA规则,除了会安装至pg_role == 'offline'的实例,也会安装至pg_offline_query == true的实例上。

默认配置

在默认配置下,主库与从库会使用以下的HBA规则:

- 超级用户通过本地操作系统认证访问

- 其他用户可以从本地用密码访问

- 复制用户可以从局域网段通过密码访问

- 监控用户可以通过本地访问

- 所有人都可以在元节点上使用密码访问

- 管理员可以从局域网通过密码访问

- 所有人都可以从内网通过密码访问

- 读写用户(生产业务账号)可以通过本地(链接池)访问

(部分访问控制转交链接池处理)

- 在从库上:只读用户(个人)可以从本地(链接池)访问。

(意味主库上拒绝只读用户连接)

pg_role == 'offline' 或带有pg_offline_query == true的实例上,会添加允许dbrole_offline分组用户访问的HBA规则。

#==============================================================#

# Default HBA

#==============================================================#

# allow local su with ident"

local all postgres ident

local replication postgres ident

# allow local user password access

local all all md5

# allow local/intranet replication with password

local replication replicator md5

host replication replicator 127.0.0.1/32 md5

host all replicator 10.0.0.0/8 md5

host all replicator 172.16.0.0/12 md5

host all replicator 192.168.0.0/16 md5

host replication replicator 10.0.0.0/8 md5

host replication replicator 172.16.0.0/12 md5

host replication replicator 192.168.0.0/16 md5

# allow local role monitor with password

local all dbuser_monitor md5

host all dbuser_monitor 127.0.0.1/32 md5

#==============================================================#

# Extra HBA

#==============================================================#

# add extra hba rules here

#==============================================================#

# primary HBA

#==============================================================#

#==============================================================#

# special HBA for instance marked with 'pg_offline_query = true'

#==============================================================#

#==============================================================#

# Common HBA

#==============================================================#

# allow meta node password access

host all all 10.10.10.10/32 md5

# allow intranet admin password access

host all +dbrole_admin 10.0.0.0/8 md5

host all +dbrole_admin 172.16.0.0/12 md5

host all +dbrole_admin 192.168.0.0/16 md5

# allow intranet password access

host all all 10.0.0.0/8 md5

host all all 172.16.0.0/12 md5

host all all 192.168.0.0/16 md5

# allow local read/write (local production user via pgbouncer)

local all +dbrole_readonly md5

host all +dbrole_readonly 127.0.0.1/32 md5

#==============================================================#

# Ad Hoc HBA

#===========================================================