DB Access

How to access database?

By Provisioning Solution, we mean a system that delivers database services and monitoring systems to users.

Provisioning Solution is not a database, but a database factory.

The user submits a configuration to the provisioning system, and the provisioning system creates the required database cluster in the environment according to the user’s desired specifications.

This is more similar to submitting a YAML file to Kubernetes to create the various resources required.

For example, the following configuration information declares a set of PostgreSQL database clusters named pg-test.

#-----------------------------

# cluster: pg-test

#-----------------------------

pg-test: # define cluster named 'pg-test'

# - cluster members - #

hosts:

10.10.10.11: {pg_seq: 1, pg_role: primary, ansible_host: node-1}

10.10.10.12: {pg_seq: 2, pg_role: replica, ansible_host: node-2}

10.10.10.13: {pg_seq: 3, pg_role: offline, ansible_host: node-3}

# - cluster configs - #

vars:

# basic settings

pg_cluster: pg-test # define actual cluster name

pg_version: 13 # define installed pgsql version

node_tune: tiny # tune node into oltp|olap|crit|tiny mode

pg_conf: tiny.yml # tune pgsql into oltp/olap/crit/tiny mode

# business users, adjust on your own needs

pg_users:

- name: test # example production user have read-write access

password: test # example user's password

roles: [dbrole_readwrite] # dborole_admin|dbrole_readwrite|dbrole_readonly|dbrole_offline

pgbouncer: true # production user that access via pgbouncer

comment: default test user for production usage

pg_databases: # create a business database 'test'

- name: test # use the simplest form

pg_default_database: test # default database will be used as primary monitor target

# proxy settings

vip_mode: l2 # enable/disable vip (require members in same LAN)

vip_address: 10.10.10.3 # virtual ip address

vip_cidrmask: 8 # cidr network mask length

vip_interface: eth1 # interface to add virtual ip

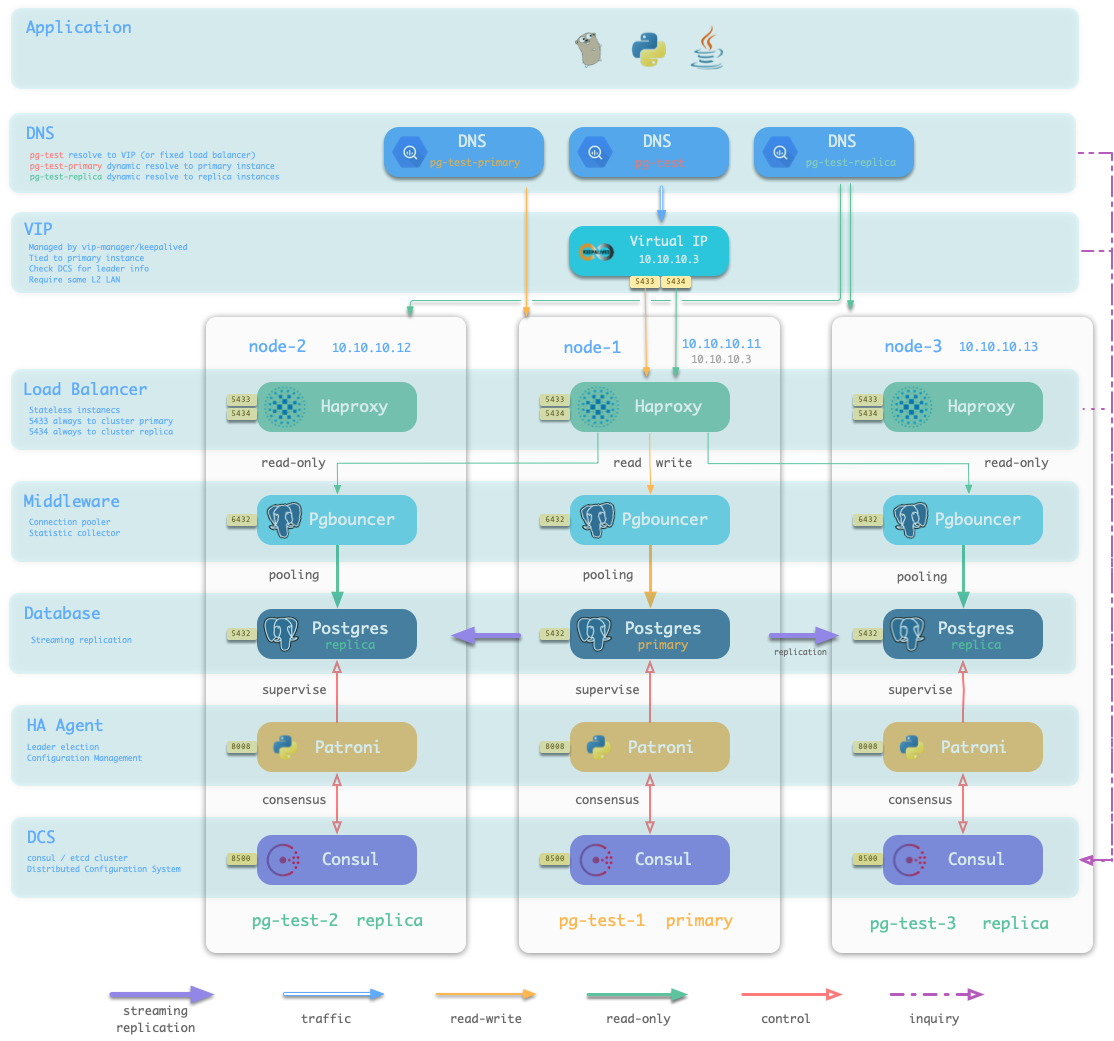

When executing database provisioning script . /pgsql.yml, the provisioning system will generate a one-master-two-slave PostgreSQL cluster pg-test on the three machines 10.10.10.11, 10.10.10.12, and 10.10.10.13, as defined in the manifest. And create a user and database named test. At the same time, Pigsty will also declare a 10.10.10.3 VIP binding on top of the cluster’s master library upon request. The structure is shown in the figure below.

The user is able to define not only the database cluster, but also the entire infrastructure.

Pigsty implements a complete representation of the database runtime environment with 154 variables.

For detailed configurable items, please refer to the Configuration Guide

The provisioning solution is usually only responsible for the creation of the cluster. Once the cluster is created, the day-to-day management should be the responsibility of the control platform.

However, Pigsty does not currently include a control platform component, so a simple resource recovery and destruction script is provided and can also be used for resource updates and management. However, it is not the job of the provisioning solution to do this.

How to access database?

How to access pigsty default service?

Introduction to High Availability

Introduction to pigsty file structure hierarchy

Introduction to Pigsty ACL models