Architecture

A Pigsty deployment is architecturally divided into two parts.

- Infrastructure : deployed on meta nodes, monitoring, DNS, NTP, DCS, Yum sources, etc. basic services.

- [Database Cluster](#Database Cluster) (PgSQL) : Deployed on database nodes to provide database services to the outside world as a cluster.

Also, the nodes (physical machines, virtual machines, Pods) used for deployment are divided into two types.

- Metanode (Meta): deploying infrastructure, executing control logic, at least one metanode is required for each Pigsty deployment.

- [Database Node](#Database Node) (Node): used to deploy database clusters/instances, nodes correspond to database instances one by one.

Sample sandbox

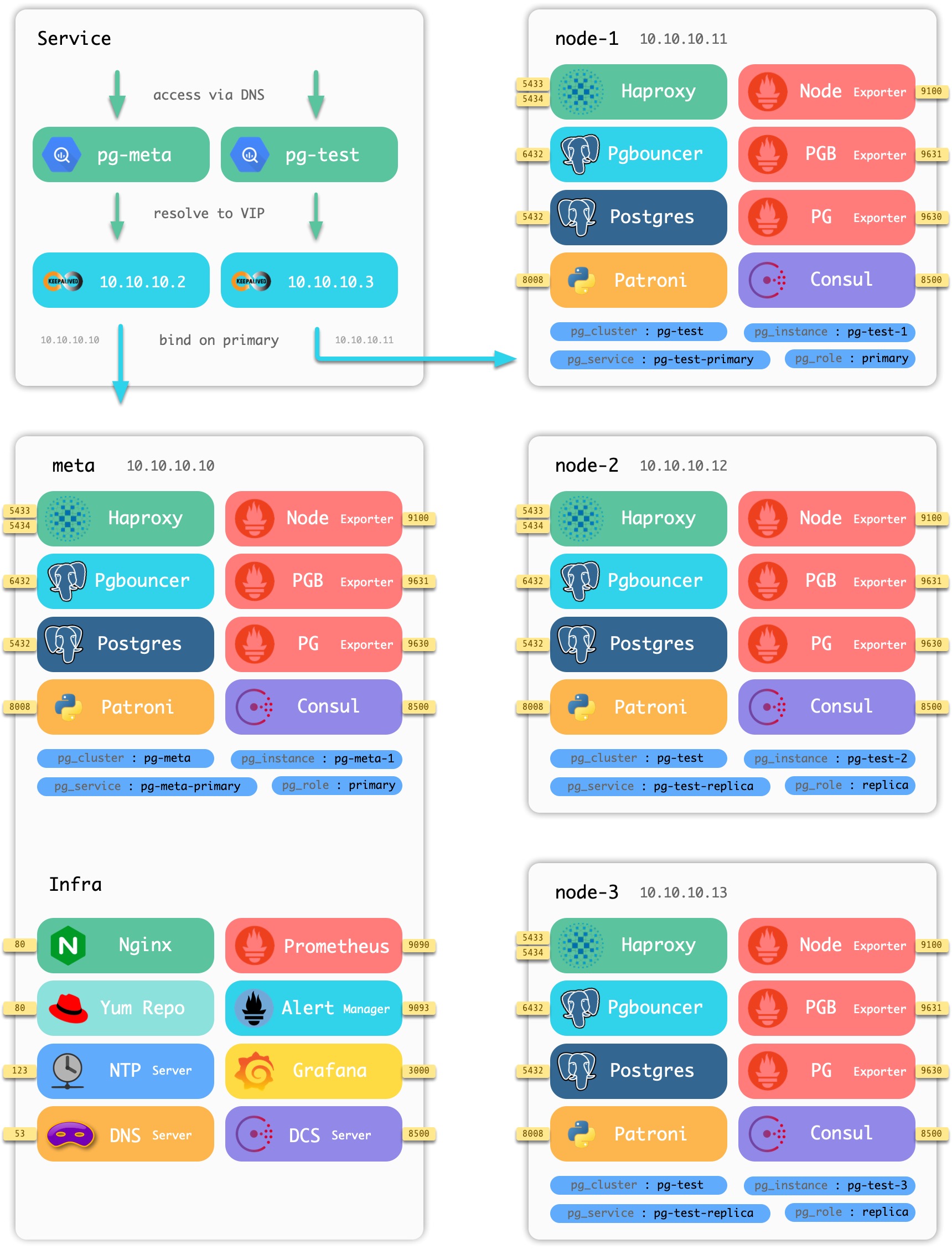

Using the four-node sandbox environment that comes with Pigsty as an example, the distribution of components on the nodes is shown in the following figure.

Figure : Nodes and components contained in the Pigsty sandbox

The sandbox consists of a [meta node](#meta node) and four [database nodes](#database nodes) (the meta node is also reused as a database node), deployed with one set of infrastructure and two sets of [database clusters](#database clusters). meta is a meta-node, deployed with infrastructure components, also multiplexed as a common database node, deployed with a single master database cluster pg-meta. node-1, node-2, node-3 are normal database nodes, deployed with database cluster pg-test.

Infrastructure

Each set of Pigsty [deployment](. /… /deploy/) (Deployment) requires some infrastructure to make the whole system work properly.

Infrastructure is usually handled by a professional Ops team or cloud vendor, but Pigsty, as an out-of-the-box product solution, integrates the basic infrastructure into the provisioning solution.

- Domain infrastructure: Dnsmasq (some requests are forwarded to Consul DNS for processing)

- Time infrastructure: NTP

- Monitoring infrastructure: Prometheus

- Alarm infrastructure: Altermanager

- Visualization infrastructure: Grafana

- Local source infrastructure: Yum/Nginx

- Distributed Configuration Storage: etcd/consul

- Pigsty infrastructure: MetaDB meta-database, management component Ansible, timed tasks, with other advanced feature components.

The infrastructure is deployed on meta nodes. A set of environments containing one or more meta-nodes for infrastructure deployment.

All infrastructure components are deployed replica-style except for Distributed Configuration Store (DCS); if there are multiple meta-nodes, the DCS (etcd/consul) on the meta-nodes act together as the DCS Server.

Metanodes

In each environment, Pigsty at a minimum requires a meta-node that will act as the control center for the entire environment. The meta-node is responsible for various administrative tasks: saving state, managing configuration, initiating tasks, collecting metrics, and so on. The infrastructure components of the entire environment, Nginx, Grafana, Prometheus, Alertmanager, NTP, DNS Nameserver, and DCS, will be deployed on the meta node.

The meta node will also be used to deploy the meta database (Consul or Etcd), and users can also use existing external DCS clusters. If deploying DCS to a meta-node, it is recommended that 3 meta-nodes be used in a production environment to fully guarantee the availability of DCS services. infrastructure components outside of DCS will be deployed as peer-to-peer copies on all meta-nodes. The number of meta-nodes requires a minimum of 1, recommends 3, and recommends no more than 5.

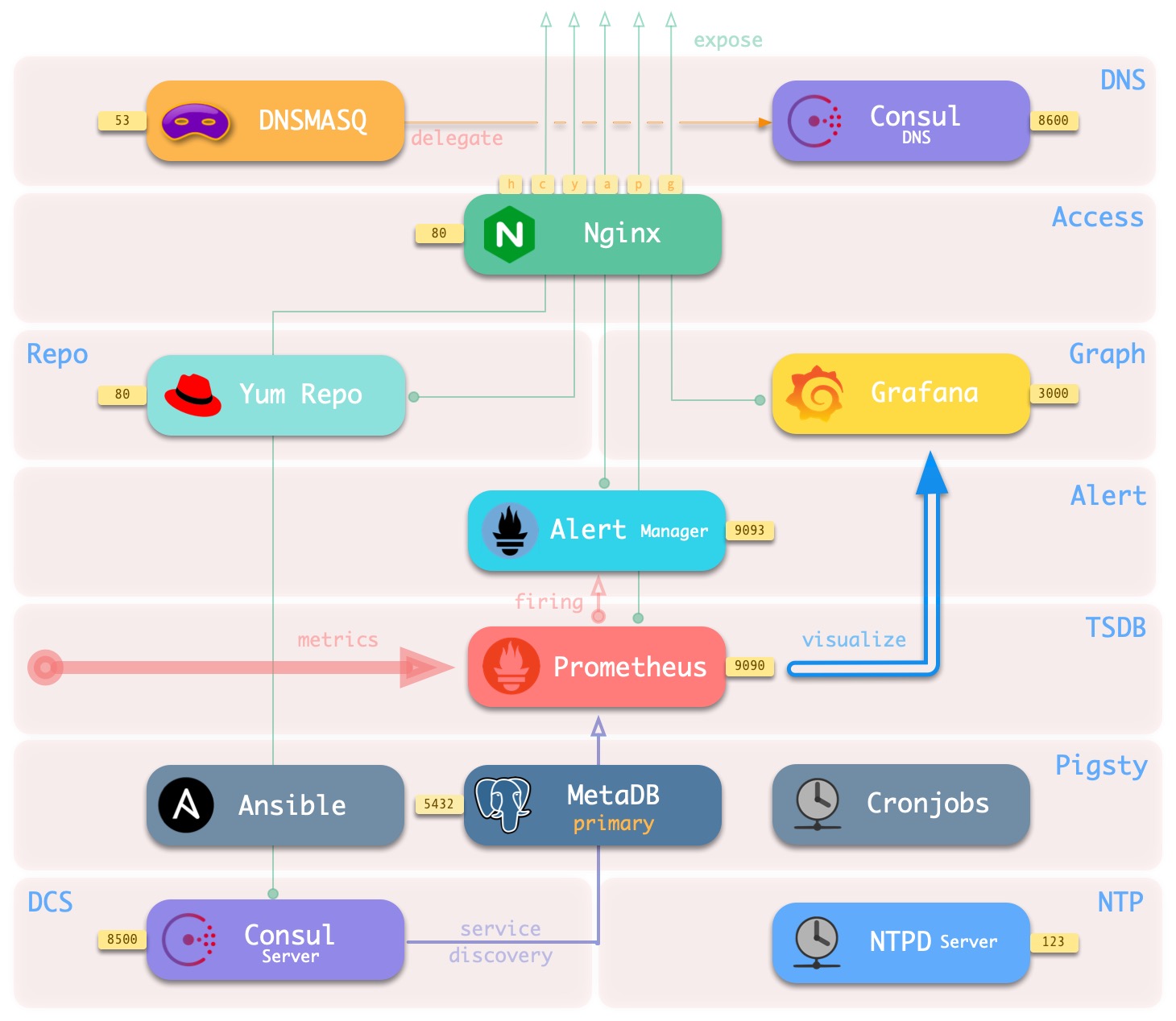

The services running on the meta-nodes are shown below.

| component | port | default domain | description |

|---|---|---|---|

| Grafana | 3000 | g.pigsty |

Pigsty Monitoring System GUI |

| Prometheus | 9090 | p.pigsty |

Monitoring Timing Database |

| AlertManager | 9093 | a.pigsty |

Alarm aggregation management component |

| Consul | 8500 | c.pigsty |

Distributed Configuration Management, Service Discovery |

| Consul DNS | 8600 | - | Consul-provided DNS services |

| Nginx | 80 | pigsty |

Entry proxy for all services |

| Yum Repo | 80 | yum.pigsty |

Local Yum sources |

| Haproxy Index | 80 | h.pigsty |

Access proxy for all Haproxy management interfaces |

| NTP | 123 | n.pigsty |

The NTP time server used uniformly by the environment |

| Dnsmasq | 53 | - | The DNS name resolution server used by the environment |

The base setup architecture deployed on the meta-node is shown in the following figure.

The main interactions are as follows.

-

Dnsmasq provides DNS resolution services within the environment (optional, can use existing Nameserver)

Some DNS resolution will ** be forwarded ** by Consul DNS

-

Nginx externally exposes all web services and forwards them differently by domain name.

-

Yum Repo is the default server for Nginx, providing the ability to install software from offline for all nodes in the environment.

-

Grafana is the carrier for the Pigsty monitoring system, used to visualize data in Prometheus and CMDB.

-

Prometheus is the timing database for monitoring.

- Prometheus fetches all Exporter to be crawled from Consul by default and associates identity information for them.

- Prometheus pulls monitoring metrics data from the Exporter, precomputes and processes it and stores it in its own TSDB.

- Prometheus calculates alarm rules and sends the alarm events to Alertmanager for processing.

-

Consul Server is used to save the status of DCS, reach consensus, and serve metadata queries.

-

NTP Service is used to synchronize the time of all nodes in the environment (external NTP service is optional)

-

Pigsty related components.

- Ansible for executing scripts, initiating control

- MetaDB for supporting various advanced features (also a standard database cluster)

- Timed task controller (backup, cleanup, statistics, patrol, advanced features not yet added)

Postgres clusters

Databases in production environments are organized in clusters, which clusters are a logical entity consisting of a set of database instances associated by master-slave replication. Each database cluster is a self-organizing business service unit consisting of at least one database instance.

Clusters are the basic business service units, and the following diagram shows the replication topology in a sandbox environment. Where pg-meta-1 alone constitutes a database cluster pg-meta, while pg-test-1, pg-test-2, and pg-test-3 together constitute another logical cluster pg-test.

pg-meta-1

(primary)

pg-test-1 -------------> pg-test-2

(primary) | (replica)

|pg-test-2

^ -------> pg-test-3

(replica)

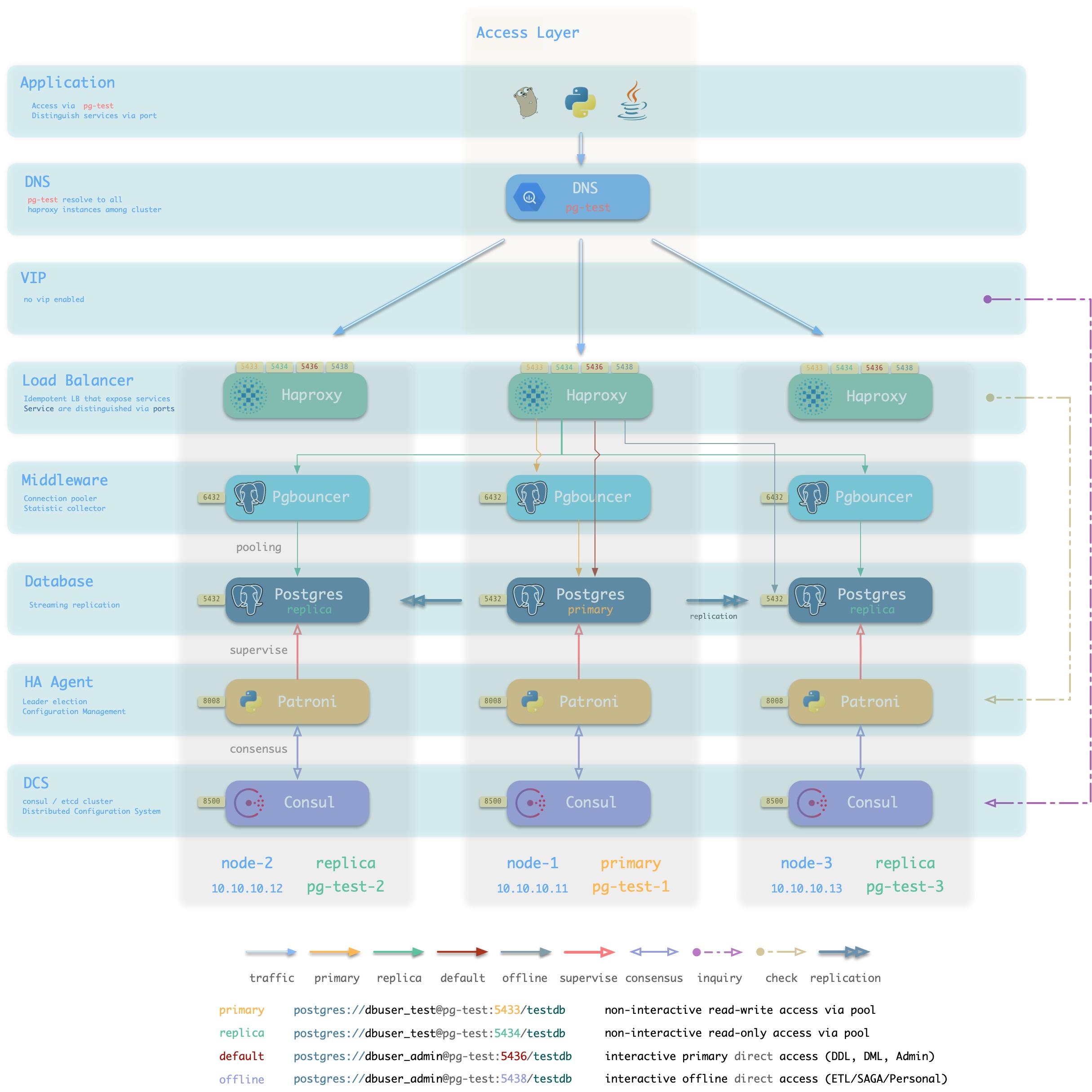

The following figure rearranges the location of related components in the pg-test cluster from the perspective of the database cluster.

Figure : Looking at the architecture from the logical view of a database cluster ([standard access scenario](. /provision/access/#dns–haproxy))

Pigsty is a database provisioning solution that creates highly available database clusters on demand. Pigsty can automatically failover with business-side read-only traffic unaffected; the impact of read and write traffic is usually in the range of a few seconds to tens of seconds, depending on the specific configuration and load.

In Pigsty, each “database instance” is idempotent in use and is exposed to the public in a similar way to NodePort [database service](… /provision/service/). By default, access to port 5433 of any instance is sufficient to access the master database, and access to port 5434 of any instance is sufficient to access the slave database. Users also have the flexibility to use different ways to access the database at the same time, for details, please refer to: [Database Access](. /provision/access).

Database Nodes

A database node is responsible for running database instances. In Pigsty database instances are fixed using exclusive deployment, where there is one and only one database instance on a node, so nodes and database instances can be uniquely identified with each other (IP address and instance name).

A typical service running on a database node is shown below.

| component | port | description |

|---|---|---|

| Postgres | 5432 | Postgres Database Service |

| Pgbouncer | 6432 | Pgbouncer Connection Pooling Service |

| Patroni | 8008 | Patroni High Availability Components |

| Consul | 8500 | Distributed Configuration Management, Local Agent for Service Discovery Component Consul |

| Haproxy Primary | 5433 | Cluster read and write service (primary connection pool) agent |

| Haproxy Replica | 5434 | Cluster Read-Only Service (Slave Connection Pool) Agent |

| Haproxy Default | 5436 | Cluster Master Direct Connect Service (for management, DDL/DML changes) |

| Haproxy Offline | 5438 | Cluster Offline Read Service (directly connected offline instances, for ETL, interactive queries) |

| Haproxy <Service> | 543x | Additional custom services provided by the cluster will be assigned ports in turn |

| Haproxy Admin | 9101 | Haproxy Monitoring Metrics and Administration Page |

| PG Exporter | 9630 | Postgres Monitoring Metrics Exporter |

| PGBouncer Exporter | 9631 | Pgbouncer Monitoring Metrics Exporter |

| Node Exporter | 9100 | Machine Node Monitoring Metrics Exporter |

| Consul DNS | 8600 | DNS Services Provided by Consul |

| vip-manager | x | Bind VIPs to the cluster master |

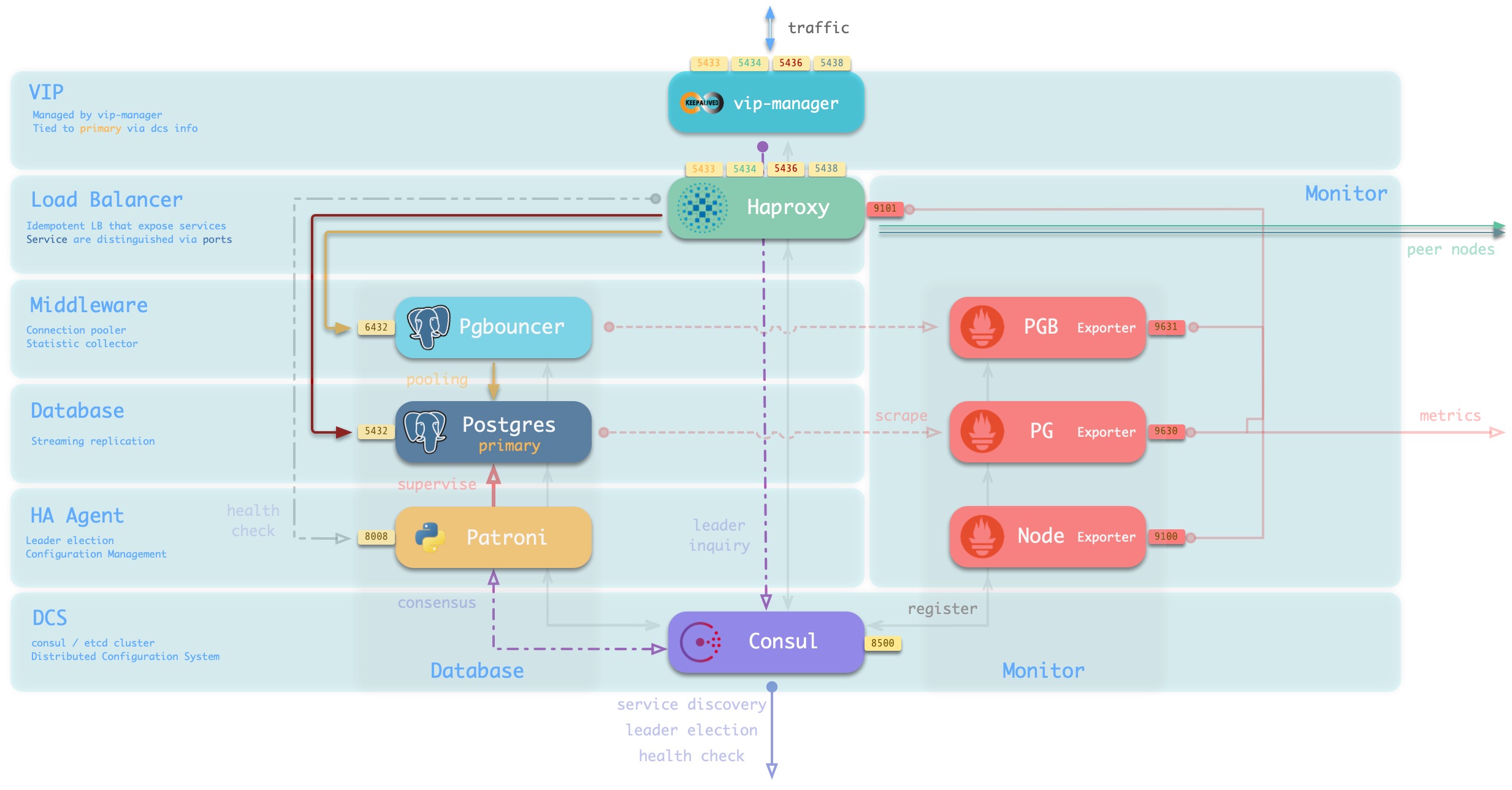

The main interactions are as follows.

-

vip-managerobtains cluster master information via query Consul and binds cluster-specific L2 VIPs to the master node (default access scheme). -

Haproxy is the database traffic portal for exposing services to the outside world, using different ports (543x) to distinguish between different services.

- Haproxy port 9101 exposes Haproxy’s internal monitoring metrics, while providing an Admin interface to control traffic.

- Haproxy port 5433 points to the cluster master connection pool port 6432 by default

- Haproxy port 5434 points to the cluster slave connection pool port 6432 by default

- Haproxy 5436 port points directly to the cluster master 5432 port by default

- Haproxy 5438 port defaults to point directly to the cluster offline instance port 5432

-

Pgbouncer for pooling database connections, buffering failure shocks, and exposing additional metrics.

-

Production services (high frequency non-interactive, 5433/5434) must be accessed through Pgbouncer.

-

Directly connected services (management and ETL, 5436/5438) must bypass the Pgbouncer direct connection.

-

-

Postgres provides the actual database service, which constitutes a master-slave database cluster via stream replication.

-

Patroni is used to oversee the Postgres service and is responsible for master-slave election and switchover, health checks, and configuration management.

- Patroni uses Consul to reach consensus as the basis for cluster leader election.

-

Consul Agent is used to issue configurations, accept service registrations, service discovery, and provide DNS queries.

- All process services using the port are registered into Consul

-

PGB Exporter, PG Exporter, Node Exporter are used to ** expose ** database, connection pool, and node monitoring metrics respectively

Nodes interact with meta-nodes

As an example of an environment consisting of a single [meta-node](# meta-node) and a single [database node](# database node), the architecture is shown in the following figure.

Figure : Single meta-node and single database node (click for larger image)

The interaction between meta nodes and database nodes mainly includes.

-

Database cluster/node’s domain name relies on the meta-node’s Nameserver for resolution.

-

Database node software installation needs to use Yum Repo on the meta node.

-

Database cluster/node monitoring metrics are collected by Prometheus on the meta node.

-

Pigsty will initiate management of the database nodes from the meta node

Perform cluster creation, capacity expansion and contraction, user, service, HBA modification; log collection, garbage cleanup, backup, patrol, etc.

-

Consul of the database node will synchronize locally registered services to the DCS of the meta node and proxy state read and write operations.

-

Database node will synchronize time from meta node (or other NTP server)