QCloud VPC Deployment

Deployment with cloud provider VPS

本样例将基于腾讯云VPC部署Pigsty

资源准备

申请虚拟机

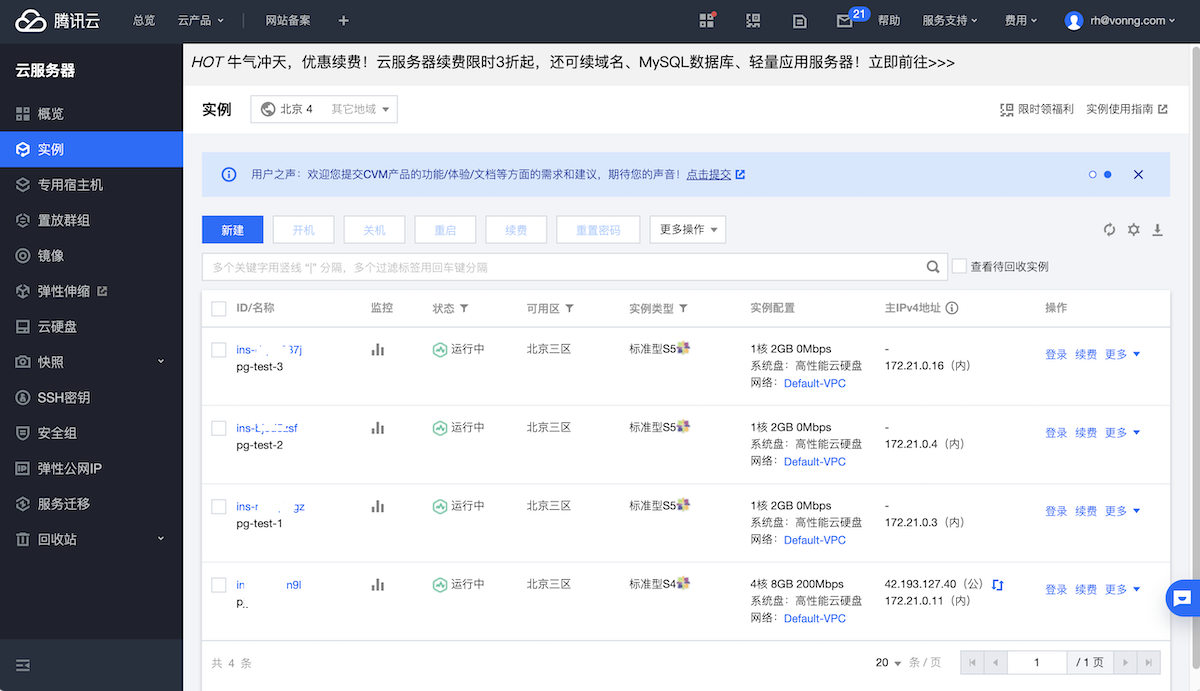

买几台虚拟机,如下图所示,其中11这一台作为元节点,带有公网IP,数据库节点3台,普通1核1G即可。

配置SSH远程登录

现在假设我们的管理用户名为vonng,就是我啦!现在首先配置我在元节点上到其他三台节点的ssh免密码访问。

# vonng@172.21.0.11 # meta

ssh-copy-id root@172.21.0.3 # pg-test-1

ssh-copy-id root@172.21.0.4 # pg-test-2

ssh-copy-id root@172.21.0.16 # pg-test-3

scp ~/.ssh/id_rsa.pub root@172.21.0.3:/tmp/

scp ~/.ssh/id_rsa.pub root@172.21.0.4:/tmp/

scp ~/.ssh/id_rsa.pub root@172.21.0.16:/tmp/

ssh root@172.21.0.3 'useradd vonng; mkdir -m 700 -p /home/vonng/.ssh; mv /tmp/id_rsa.pub /home/vonng/.ssh/authorized_keys; chown -R vonng /home/vonng; chmod 0600 /home/vonng/.ssh/authorized_keys;'

ssh root@172.21.0.4 'useradd vonng; mkdir -m 700 -p /home/vonng/.ssh; mv /tmp/id_rsa.pub /home/vonng/.ssh/authorized_keys; chown -R vonng /home/vonng; chmod 0600 /home/vonng/.ssh/authorized_keys;'

ssh root@172.21.0.16 'useradd vonng; mkdir -m 700 -p /home/vonng/.ssh; mv /tmp/id_rsa.pub /home/vonng/.ssh/authorized_keys; chown -R vonng /home/vonng; chmod 0600 /home/vonng/.ssh/authorized_keys;'

然后配置该用户免密码执行sudo的权限:

ssh root@172.21.0.3 "echo '%vonng ALL=(ALL) NOPASSWD: ALL' > /etc/sudoers.d/vonng"

ssh root@172.21.0.4 "echo '%vonng ALL=(ALL) NOPASSWD: ALL' > /etc/sudoers.d/vonng"

ssh root@172.21.0.16 "echo '%vonng ALL=(ALL) NOPASSWD: ALL' > /etc/sudoers.d/vonng"

# 校验配置是否成功

ssh 172.21.0.3 'sudo ls'

ssh 172.21.0.4 'sudo ls'

ssh 172.21.0.16 'sudo ls'

下载项目

# 从Github克隆代码

git clone https://github.com/Vonng/pigsty

# 如果您不能访问Github,也可以使用Pigsty CDN下载代码包

curl http://pigsty-1304147732.cos.accelerate.myqcloud.com/latest/pigsty.tar.gz -o pigsty.tgz && tar -xf pigsty.tgz && cd pigsty

下载离线安装包

# 从Github Release页面下载

# https://github.com/Vonng/pigsty

# 如果您不能访问Github,也可以使用Pigsty CDN下载离线软件包

curl http://pigsty-1304147732.cos.accelerate.myqcloud.com/latest/pkg.tgz -o files/pkg.tgz

# 将离线安装包解压至元节点指定位置 (也许要sudo)

mv -rf /www/pigsty /www/pigsty-backup && mkdir -p /www/pigsty

tar -xf files/pkg.tgz --strip-component=1 -C /www/pigsty/

调整配置

我们可以基于Pigsty沙箱的配置文件进行调整。因为都是普通低配虚拟机,因此不需要任何实质配置修改,只需要修改连接参数与节点信息即可。简单的说,只要改IP地址就可以了!

现在将沙箱中的IP地址全部替换为云环境中的实际IP地址。(如果使用了L2 VIP,VIP也需要替换为合理的地址)

| 说明 | 沙箱IP | 虚拟机IP | |

|---|---|---|---|

| 元节点 | 10.10.10.10 | 172.21.0.11 | |

| 数据库节点1 | 10.10.10.11 | 172.21.0.3 | |

| 数据库节点2 | 10.10.10.12 | 172.21.0.4 | |

| 数据库节点3 | 10.10.10.13 | 172.21.0.16 | |

| pg-meta VIP | 10.10.10.2 | 172.21.0.8 | |

| pg-test VIP | 10.10.10.3 | 172.21.0.9 |

编辑配置文件:pigsty.yml,如果都是规格差不多的虚拟机,通常您只需要修改IP地址即可。特别需要注意的是在沙箱中我们是通过SSH Alias来连接的(诸如meta, node-1之类),记得移除所有ansible_host配置,我们将直接使用IP地址连接目标节点。

cat pigsty.yml | \

sed 's/10.10.10.10/172.21.0.11/g' |\

sed 's/10.10.10.11/172.21.0.3/g' |\

sed 's/10.10.10.12/172.21.0.4/g' |\

sed 's/10.10.10.13/172.21.0.16/g' |\

sed 's/10.10.10.2/172.21.0.8/g' |\

sed 's/10.10.10.3/172.21.0.9/g' |\

sed 's/10.10.10.3/172.21.0.9/g' |\

sed 's/, ansible_host: meta//g' |\

sed 's/ansible_host: meta//g' |\

sed 's/, ansible_host: node-[123]//g' |\

sed 's/vip_interface: eth1/vip_interface: eth0/g' |\

sed 's/vip_cidrmask: 8/vip_cidrmask: 24/g' > pigsty2.yml

mv pigsty.yml pigsty-backup.yml; mv pigsty2.yml pigsty.yml

就这?

是的,配置文件已经修改完了!我们可以看看到底修改了什么东西

$ diff pigsty.yml pigsty-backup.yml

38c38

< hosts: {172.21.0.11: {}}

---

> hosts: {10.10.10.10: {ansible_host: meta}}

46c46

< 172.21.0.11: {pg_seq: 1, pg_role: primary}

---

> 10.10.10.10: {pg_seq: 1, pg_role: primary, ansible_host: meta}

109,111c109,111

< vip_address: 172.21.0.8 # virtual ip address

< vip_cidrmask: 24 # cidr network mask length

< vip_interface: eth0 # interface to add virtual ip

---

> vip_address: 10.10.10.2 # virtual ip address

> vip_cidrmask: 8 # cidr network mask length

> vip_interface: eth1 # interface to add virtual ip

120,122c120,122

< 172.21.0.3: {pg_seq: 1, pg_role: primary}

< 172.21.0.4: {pg_seq: 2, pg_role: replica}

< 172.21.0.16: {pg_seq: 3, pg_role: offline}

---

> 10.10.10.11: {pg_seq: 1, pg_role: primary, ansible_host: node-1}

> 10.10.10.12: {pg_seq: 2, pg_role: replica, ansible_host: node-2}

> 10.10.10.13: {pg_seq: 3, pg_role: offline, ansible_host: node-3}

147,149c147,149

< vip_address: 172.21.0.9 # virtual ip address

< vip_cidrmask: 24 # cidr network mask length

< vip_interface: eth0 # interface to add virtual ip

---

> vip_address: 10.10.10.3 # virtual ip address

> vip_cidrmask: 8 # cidr network mask length

> vip_interface: eth1 # interface to add virtual ip

326c326

< - 172.21.0.11 yum.pigsty

---

> - 10.10.10.10 yum.pigsty

329c329

< - 172.21.0.11

---

> - 10.10.10.10

393c393

< - server 172.21.0.11 iburst

---

> - server 10.10.10.10 iburst

417,430c417,430

< - 172.21.0.8 pg-meta # sandbox vip for pg-meta

< - 172.21.0.9 pg-test # sandbox vip for pg-test

< - 172.21.0.11 meta-1 # sandbox node meta-1 (node-0)

< - 172.21.0.3 node-1 # sandbox node node-1

< - 172.21.0.4 node-2 # sandbox node node-2

< - 172.21.0.16 node-3 # sandbox node node-3

< - 172.21.0.11 pigsty

< - 172.21.0.11 y.pigsty yum.pigsty

< - 172.21.0.11 c.pigsty consul.pigsty

< - 172.21.0.11 g.pigsty grafana.pigsty

< - 172.21.0.11 p.pigsty prometheus.pigsty

< - 172.21.0.11 a.pigsty alertmanager.pigsty

< - 172.21.0.11 n.pigsty ntp.pigsty

< - 172.21.0.11 h.pigsty haproxy.pigsty

---

> - 10.10.10.2 pg-meta # sandbox vip for pg-meta

> - 10.10.10.3 pg-test # sandbox vip for pg-test

> - 10.10.10.10 meta-1 # sandbox node meta-1 (node-0)

> - 10.10.10.11 node-1 # sandbox node node-1

> - 10.10.10.12 node-2 # sandbox node node-2

> - 10.10.10.13 node-3 # sandbox node node-3

> - 10.10.10.10 pigsty

> - 10.10.10.10 y.pigsty yum.pigsty

> - 10.10.10.10 c.pigsty consul.pigsty

> - 10.10.10.10 g.pigsty grafana.pigsty

> - 10.10.10.10 p.pigsty prometheus.pigsty

> - 10.10.10.10 a.pigsty alertmanager.pigsty

> - 10.10.10.10 n.pigsty ntp.pigsty

> - 10.10.10.10 h.pigsty haproxy.pigsty

442c442

< grafana_url: http://admin:admin@172.21.0.11:3000 # grafana url

---

> grafana_url: http://admin:admin@10.10.10.10:3000 # grafana url

478,480c478,480

< meta-1: 172.21.0.11 # you could use existing dcs cluster

< # meta-2: 172.21.0.3 # host which have their IP listed here will be init as server

< # meta-3: 172.21.0.4 # 3 or 5 dcs nodes are recommend for production environment

---

> meta-1: 10.10.10.10 # you could use existing dcs cluster

> # meta-2: 10.10.10.11 # host which have their IP listed here will be init as server

> # meta-3: 10.10.10.12 # 3 or 5 dcs nodes are recommend for production environment

692c692

< - host all all 172.21.0.11/32 md5

---

> - host all all 10.10.10.10/32 md5

执行剧本

您可以使用同样的 沙箱初始化 来完成 基础设施和数据库集群的初始化。

其输出结果除了IP地址,与沙箱并无区别。参考输出

访问Demo

现在,您可以通过公网IP访问元节点上的服务了!请注意做好信息安全工作。

与沙箱环境不同的是,如果您需要从公网访问Pigsty管理界面,需要自己把定义的域名写入/etc/hosts中,或者使用真正申请的域名。

否则就只能通过IP端口直连的方式访问,例如: http://<meta_node_public_ip>:3000。

Nginx监听的域名可以通过可以通过 nginx_upstream 选项。

nginx_upstream:

- { name: home, host: pigsty.cc, url: "127.0.0.1:3000"}

- { name: consul, host: c.pigsty.cc, url: "127.0.0.1:8500" }

- { name: grafana, host: g.pigsty.cc, url: "127.0.0.1:3000" }

- { name: prometheus, host: p.pigsty.cc, url: "127.0.0.1:9090" }

- { name: alertmanager, host: a.pigsty.cc, url: "127.0.0.1:9093" }

- { name: haproxy, host: h.pigsty.cc, url: "127.0.0.1:9091" }

Last modified 2021-03-28: update en docs (f994b54)